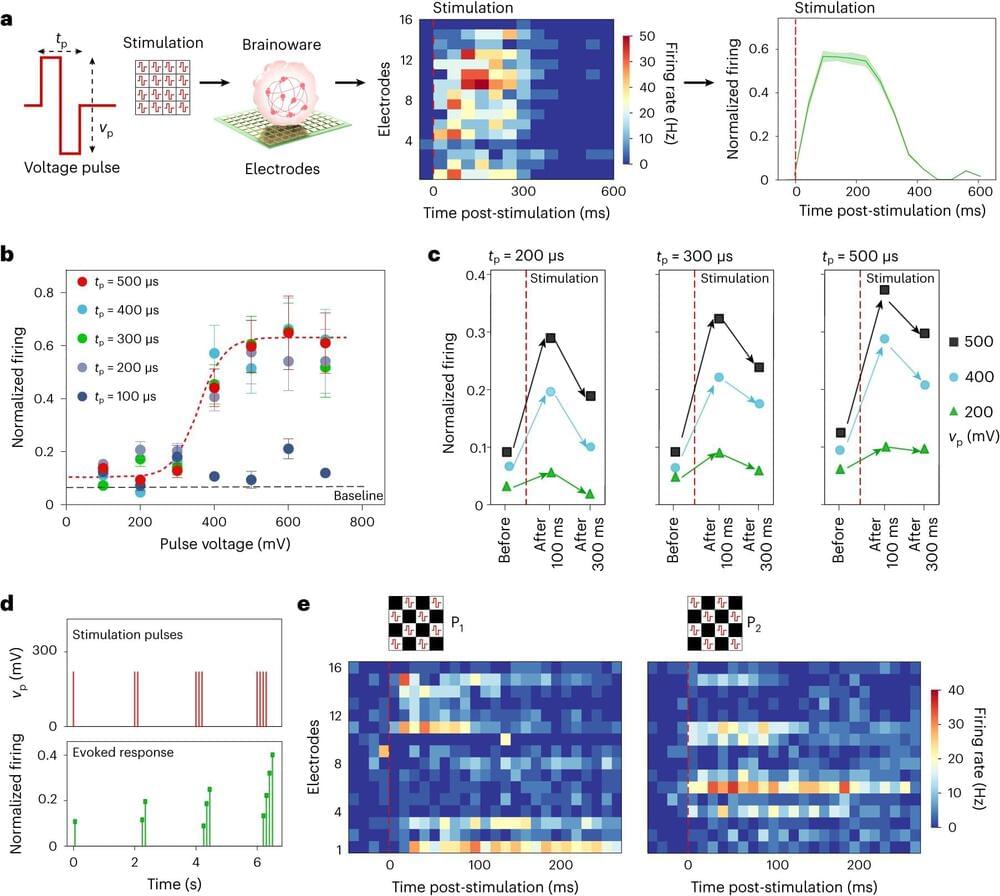

Feng Guo, an associate professor of intelligent systems engineering at the Indiana University Luddy School of Informatics, Computing and Engineering, is addressing the technical limitations of artificial intelligence computing hardware by developing a new hybrid computing system—which has been dubbed “Brainoware”—that combines electronic hardware with human brain organoids.

Advanced AI techniques, such as machine learning and deep learning, which are powered by specialized silicon computer chips, expend enormous amounts of energy. As such, engineers have designed neuromorphic computing systems, modeled after the structure and function of a human brain, to improve the performance and efficiency of these technologies. However, these systems are still limited in their ability to fully mimic brain function, as most are built on digital electronic principles.

In response, Guo and a team of IU researchers, including graduate student Hongwei Cai, have developed a hybrid neuromorphic computing system that mounts a brain organoid onto a multielectrode assay to receive and send information. The brain organoids are brain-like 3D cell cultures derived from stem cells and characterized by different brain cell types, including neurons and glia, and brain-like structures such as ventricular zones.