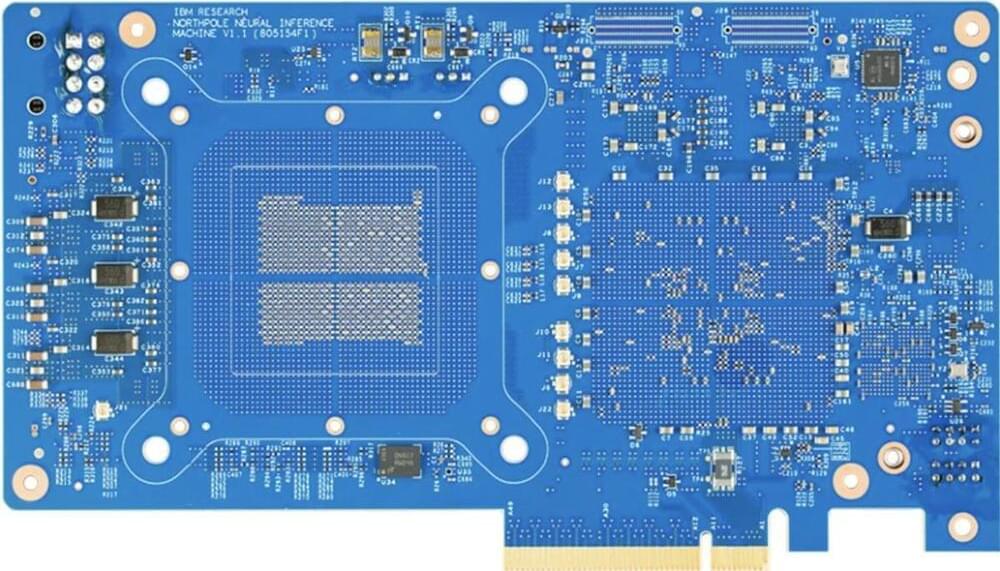

IBM Research recently disclosed details about its NorthPole neural accelerator. This isn’t the first time IBM has discussed the part; IBM researcher Dr. Dharmendra Modha gave a presentation last month at Hot Chips that delved into some of its technical underpinnings.

Let’s take a high-level look at what IBM announced.

IBM NorthPole is an advanced AI chip from IBM Research that integrates processing units and memory on a single chip, significantly improving energy efficiency and processing speed for artificial intelligence tasks. It is designed for low-precision operations, making it suitable for a wide range of AI applications while eliminating the need for bulky cooling systems.