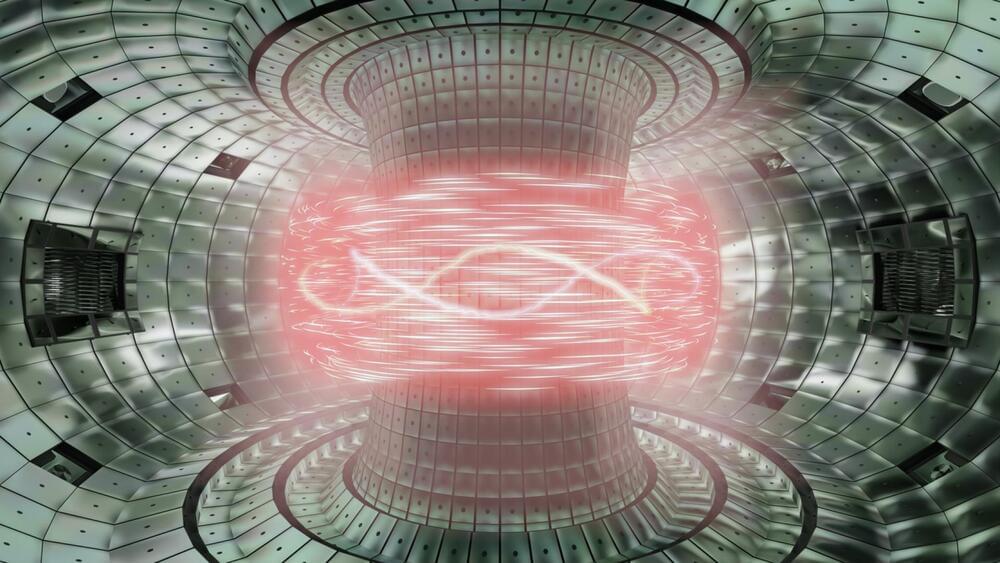

Japan’s Nippon Telegraph and Telephone Corporation (NTT) is applying its Deep Anomaly Surveillance (DeAnoS) artificial intelligence tool, originally designed for telecom networks, to predict anomalies in nuclear fusion reactors.

DeAnoS is like a detective, trying to understand which part of the equation is making things weird.

Atomic fusion reactors are at the forefront of scientific innovation, harnessing the enormous energy released by atomic nuclei fusion. This process, which is similar to the Sun’s power source, involves the union of two light atomic nuclei, which results in the development of a heavier nucleus and the release of a massive quantity of energy.