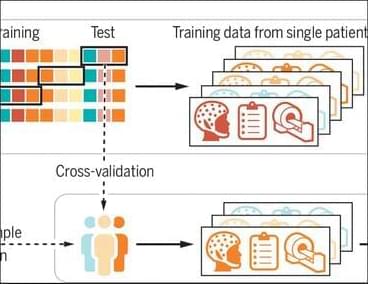

What if AI could tell us we have cancer before we show a single symptom? Steve Quake, head of science at the Chan Zuckerberg Initiative, explains how AI can revolutionize science.

Up next, Harvard professor debunks the biggest exercise myths ► • Harvard professor debunks the biggest…

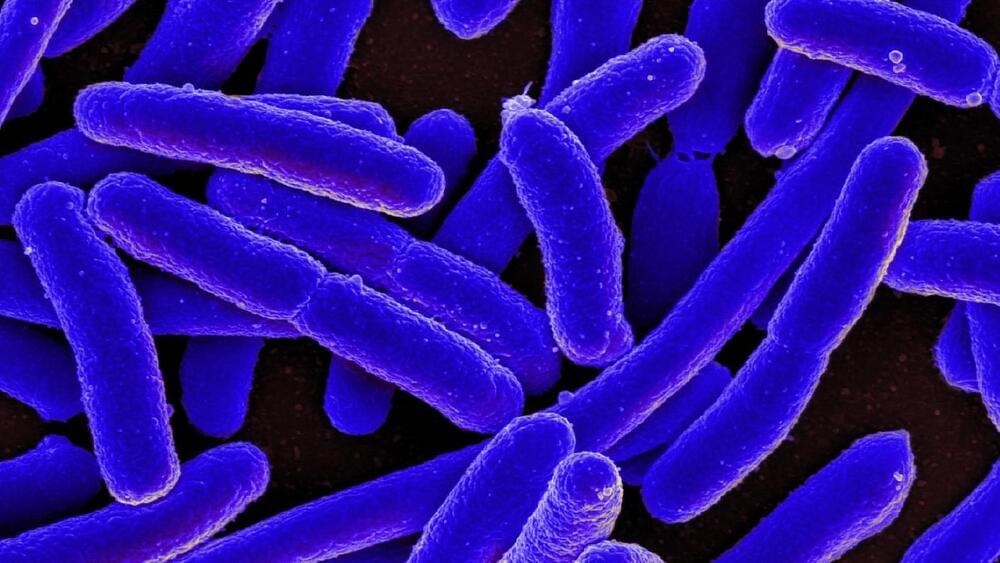

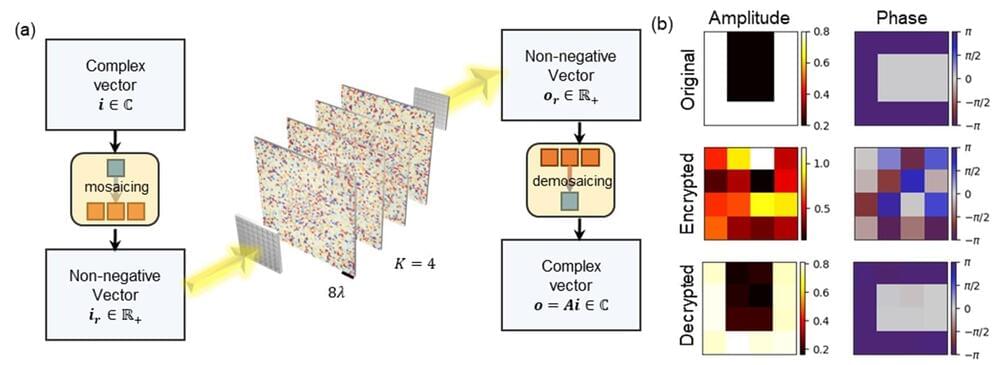

AI can help us understand complex systems like our cells. better. The Chan Zuckerberg Initiative is committed to building one of the world’s biggest non-profit life science AI computing clusters to help build digital models of what goes wrong in cells when we get diseases like diabetes or cancer and more.

Read the video transcript ► https://bigthink.com/sponsored/future…

We created this video in partnership with the Chan Zuckerberg Initiative.

Go Deeper with Big Think: