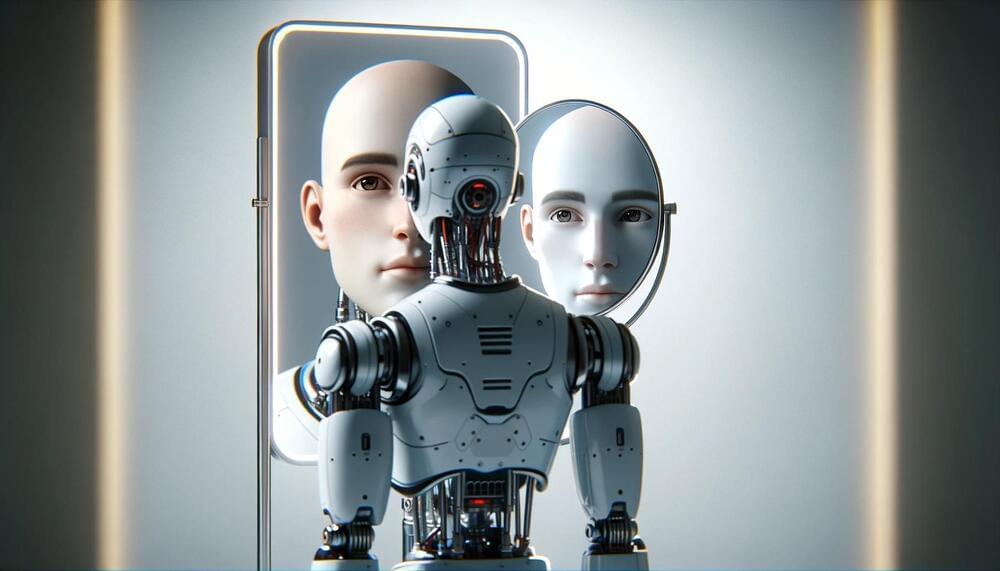

The robot is being developed to offer an helping hand to astronauts, designed to operate in hostile and hazardous conditions in space.

NASA’s first bipedal humanoid robot, Valkyrie, is undergoing a few of its final testing phases at NASA’s Johnson Space Center in Houston, Texas.

A humanoid, much like Iron Man but constructed from metal and electronics, mimics human walking and appearance. Designed for a diverse array of functions, NASA is exploring if such machines can further space exploration, starting with the Artemis mission, according to Reuters.

Valkyrie, named after a prominent female figure in Norse mythology, commands attention with her formidable presence. Standing at 6 feet 2 inches (188 centimeters) and weighing 300 pounds (136 kilograms), NASA is an electric humanoid robot capable of operating in degraded or damaged human-engineered environments.