BMW has signed an unprecedented deal with the robotics firm Figure to bring general-purpose humanoid robots into its factories.

Category: robotics/AI – Page 890

See the humanoid robots that will build new BMWs

This can free humans from taking on those tedious — and potentially dangerous — jobs, but it also means manufacturers need to build or buy a new robot every time they find a new task they want to automate.

General purpose robots — ones that can do many tasks — would be far more useful, but developing a bot with anywhere near the versatility of a human worker has thus far proven out of reach.

What’s new? Figure thinks it has cracked the code — in March 2023, it unveiled Figure 1, a machine it said was “the world’s first commercially viable general purpose humanoid robot.”

Scientists design a two-legged robot powered by muscle tissue

Compared to robots, human bodies are flexible, capable of fine movements, and can convert energy efficiently into movement. Drawing inspiration from human gait, researchers from Japan crafted a two-legged biohybrid robot by combining muscle tissues and artificial materials. Published on January 26 in the journal Matter, this method allows the robot to walk and pivot.

“Research on biohybrid robots, which are a fusion of biology and mechanics, is recently attracting attention as a new field of robotics featuring biological function,” says corresponding author Shoji Takeuchi of the University of Tokyo, Japan. “Using muscle as actuators allows us to build a compact robot and achieve efficient, silent movements with a soft touch.”

The research team’s two-legged robot, an innovative bipedal design, builds on the legacy of biohybrid robots that take advantage of muscles. Muscle tissues have driven biohybrid robots to crawl and swim straight forward and make turns—but not sharp ones. Yet, being able to pivot and make sharp turns is an essential feature for robots to avoid obstacles.

Adaptive Mobile Manipulation for Articulated Objects in the Open World

Paper page: https://huggingface.co/papers/2401.14403 https://open-world-mobilemanip.github.io/ Deploying robots in open-ended unstructured environments such as homes has been a long-standing research problem.

Chinese train that runs on road without track

The Rail Bus, a pioneering mode of transportation originating in Zhuzhou, China, is a groundbreaking discovery. Introduced by the Chinese manufacturer CRRC, this self-driving vehicle, resembling a train but without tracks, completed its inaugural journey in 2017. The Rail Bus seeks to revolutionise traditional concepts of buses, trains, and trams. The design of the Rail Bus was presented to the public in June 2023, and remarkably, within a span of fewer than five months, CRRC initiated testing on October 30, 2017. Covering a 3-kilometer route with stops at four stations in Zhuzhou, this marked a significant milestone in transportation evolution.

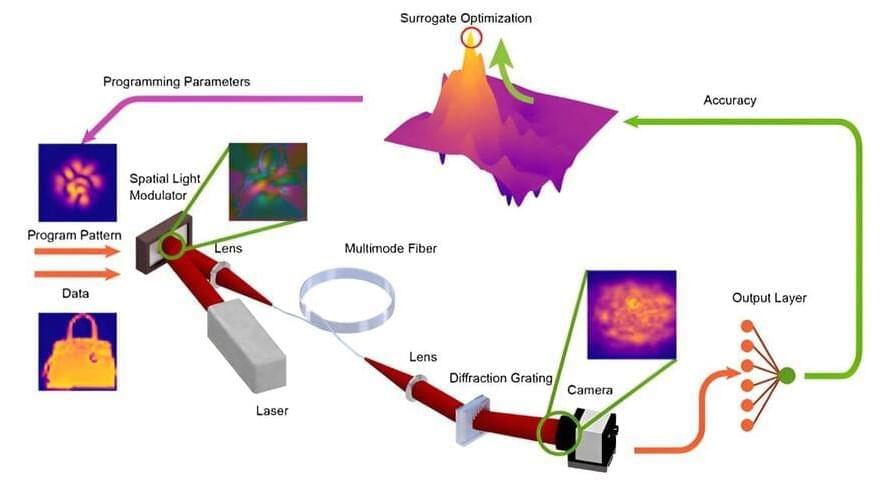

Programming light propagation creates highly efficient neural networks

Current artificial intelligence models utilize billions of trainable parameters to achieve challenging tasks. However, this large number of parameters comes with a hefty cost. Training and deploying these huge models require immense memory space and computing capability that can only be provided by hangar-sized data centers in processes that consume energy equivalent to the electricity needs of midsized cities.

The research community is presently making efforts to rethink both the related computing hardware and the machine learning algorithms to sustainably keep the development of artificial intelligence at its current pace. Optical implementation of neural network architectures is a promising avenue because of the low power implementation of the connections between the units.

New research reported in Advanced Photonics combines light propagation inside multimode fibers with a small number of digitally programmable parameters and achieves the same performance on image classification tasks with fully digital systems with more than 100 times more programmable parameters. This computational framework streamlines the memory requirement and reduces the need for energy-intensive digital processes, while achieving the same level of accuracy in a variety of machine learning tasks.

Google shows off Lumiere, a space-time diffusion model for realistic AI videos

Lumiere, on its part, addresses this gap by using a Space-Time U-Net architecture that generates the entire temporal duration of the video at once, through a single pass in the model, leading to more realistic and coherent motion.

“By deploying both spatial and (importantly) temporal down-and up-sampling and leveraging a pre-trained text-to-image diffusion model, our model learns to directly generate a full-frame-rate, low-resolution video by processing it in multiple space-time scales,” the researchers noted in the paper.

The video model was trained on a dataset of 30 million videos, along with their text captions, and is capable of generating 80 frames at 16 fps. The source of this data, however, remains unclear at this stage.

Forever working: Gadget plans to tap into your productivity while you sleep by inducing lucid dreaming

ICYMI: INTRODUCING MORPHEUS-1 The world’s first multi-modal generative ultrasonic transformer designed to induce and stabilize lucid dreams according to Porphetic #AI Available for beta users Spring 2024.

Startup company Prophetic is set to unveil the “Halo” device to induce lucid dreaming, Fortune reports.