Retro Bio, backed by Sam Altman, is chasing a 5 billion dollar valuation to use AI and cell reprogramming to add 10 healthy years to human life.

EPFL scientists have integrated discarded crustacean shells into robotic devices, leveraging the strength and flexibility of natural materials for robotic applications.

Although many roboticists today turn to nature to inspire their designs, even bioinspired robots are usually fabricated from non-biological materials like metal, plastic and composites. But a new experimental robotic manipulator from the Computational Robot Design and Fabrication Lab (CREATE Lab) in EPFL’s School of Engineering turns this trend on its head: its main feature is a pair of langoustine abdomen exoskeletons.

Although it may look unusual, CREATE Lab head Josie Hughes explains that combining biological elements with synthetic components holds significant potential not only to enhance robotics, but also to support sustainable technology systems.

Sometimes, less truly is more. By removing oxygen during the synthesis process, a team of materials scientists at Penn State successfully created seven new high-entropy oxides (HEOs)—a class of ceramics made from five or more metals that show promise for use in energy storage, electronics, and protective coatings.

“By carefully removing oxygen from the atmosphere of the tube furnace during synthesis, we stabilized two metals, iron and manganese, into the ceramics that would not otherwise stabilize in the ambient atmosphere,” said corresponding and first author Saeed Almishal, research professor at Penn State working under Jon-Paul Maria, Dorothy Pate Enright Professor of Materials Science.

Almishal first succeeded in stabilizing a manganese-and iron-containing compound by precisely controlling oxygen levels in a material he called J52, composed of magnesium, cobalt, nickel, manganese, and iron. Building on this, he used newly developed machine learning tools—an artificial intelligence technique capable of screening thousands of possible material combinations within seconds—to identify six additional metal combinations capable of forming stable HEOs.

Penn State scientists discovered seven new ceramics by simply removing oxygen—opening a path to materials once beyond reach.

During their experiments, the researchers also established a framework for designing future materials based on thermodynamic principles. Their findings were published in Nature Communications.

Navigating The Deep Tech Industrial Revolution with Chuck Brooks.

Link.

Chuck Brooks got his start in cybersecurity at the Department of Homeland Security, as one of the organization’s first hires. He has worked in Congress and other agencies, as well as large companies and cybersecurity firms. He uses experiences to teach students at Georgetown University how to manage change, including the kind posed by quantum tech. In this podcast episode, Chuck and host Veronica Combs discuss digital security threats and how to use AI.

🎧 Tune in here: https://lnkd.in/gMkTjuE6

The Wu Tsai Neurosciences Institute, Sarafan ChEM-H, and Stanford Bio-X have awarded $1.24 million in grants to five innovative, interdisciplinary, and collaborative research projects at the intersection of neuroscience and synthetic biology.

The emerging field of synthetic neuroscience aims to leverage the precision tools of synthetic biology — like gene editing, protein engineering, and the design of biological circuits — to manipulate and understand neural systems at unprecedented levels. By creating custom-made biological components and integrating them with neural networks, synthetic neuroscience offers new ways to explore brain function, develop novel therapies for neurological disorders, and even design biohybrid systems that could one day allow brains to interface seamlessly with technology.

“The ongoing revolution in synthetic biology is allowing us to create powerful new molecular tools for biological science and clinical translation,” said Kang Shen, Vincent V.C. Woo Director of the Wu Tsai Neurosciences Institute. “With these awards, we wanted to bring the Stanford neuroscience community together to capitalize on this pivotal moment, focusing the power of cutting-edge synthetic biology on advancing our understanding of the nervous system — and its potential to promote human health and wellbeing.”

Today, Mistral AI announced the Mistral 3 family of open-source multilingual, multimodal models, optimized across NVIDIA supercomputing and edge platforms.

Mistral Large 3 is a mixture-of-experts (MoE) model — i nstead of firing up every neuron for every token, it only activates the parts of the model with the most impact. The result is efficiency that delivers scale without waste, accuracy without compromise and makes enterprise AI not just possible, but practical.

Mistral AI’s new models deliver industry-leading accuracy and efficiency for enterprise AI. It will be available everywhere, from the cloud to the data center to the edge, starting Tuesday, Dec. 2.

The Lifeboat Foundation Guardian Award is annually bestowed upon a respected scientist or public figure who has warned of a future fraught with dangers and encouraged measures to prevent them.

This year’s winner is Professor Roman V. Yampolskiy. Roman coined the term “AI safety” in a 2011 publication titled * Artificial Intelligence Safety Engineering: Why Machine Ethics Is a Wrong Approach*, presented at the Philosophy and Theory of Artificial Intelligence conference in Thessaloniki, Greece, and is recognized as a founding researcher in the field.

Roman is known for his groundbreaking work on AI containment, AI safety engineering, and the theoretical limits of artificial intelligence controllability. His research has been cited by over 10,000 scientists and featured in more than 1,000 media reports across 30 languages.

Watch his interview on * The Diary of a CEO* at [ https://www.youtube.com/watch?v=UclrVWafRAI](https://www.youtube.com/watch?v=UclrVWafRAI) that has already received over 11 million views on YouTube alone. The Singularity has begun, please pay attention to what Roman has to say about it!

Professor Roman V. Yampolskiy who coined the term “AI safety” is winner of the 2025 Guardian Award.

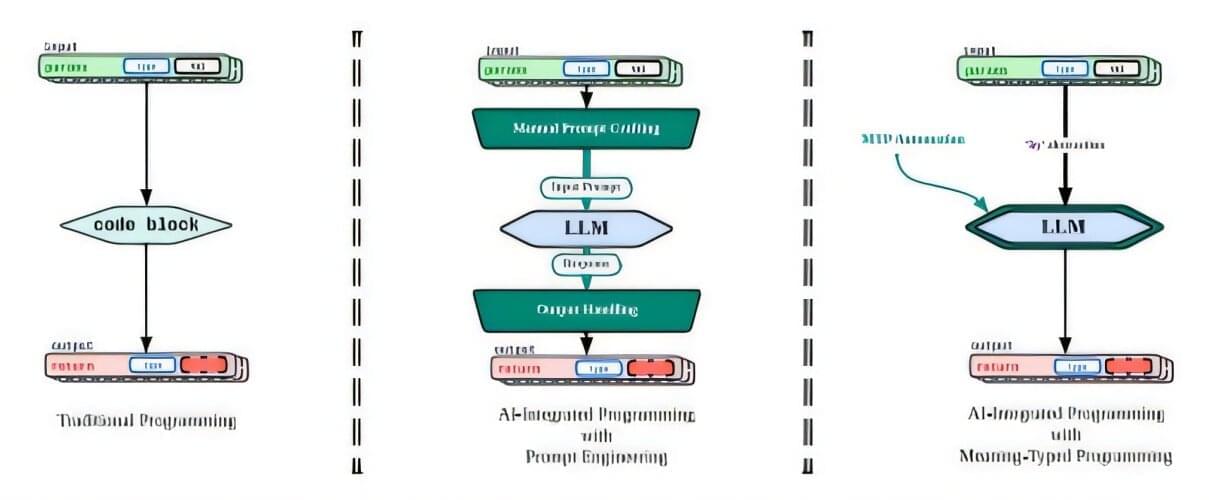

Developers can now integrate large language models directly into their existing software using a single line of code, with no manual prompt engineering required. The open-source framework, known as byLLM, automatically generates context-aware prompts based on the meaning and structure of the program, helping developers avoid hand-crafting detailed prompts, according to a conference paper presented at the SPLASH conference in Singapore in October 2025 and published in the Proceedings of the ACM on Programming Languages.

“This work was motivated by watching developers spend an enormous amount of time and effort trying to integrate AI models into applications,” said Jason Mars, an associate professor of computer science and engineering at U-M and co-corresponding author of the study.

“With GB200 NVL72 and Together AI’s custom optimizations, we are exceeding customer expectations for large-scale inference workloads for MoE models like DeepSeek-V3,” said Vipul Ved Prakash, cofounder and CEO of Together AI. “The performance gains come from NVIDIA’s full-stack optimizations coupled with Together AI Inference breakthroughs across kernels, runtime engine and speculative decoding.”

This performance advantage is evident across other frontier models.

Kimi K2 Thinking, the most intelligent open-source model, serves as another proof point, achieving 10x better generational performance when deployed on GB200 NVL72.