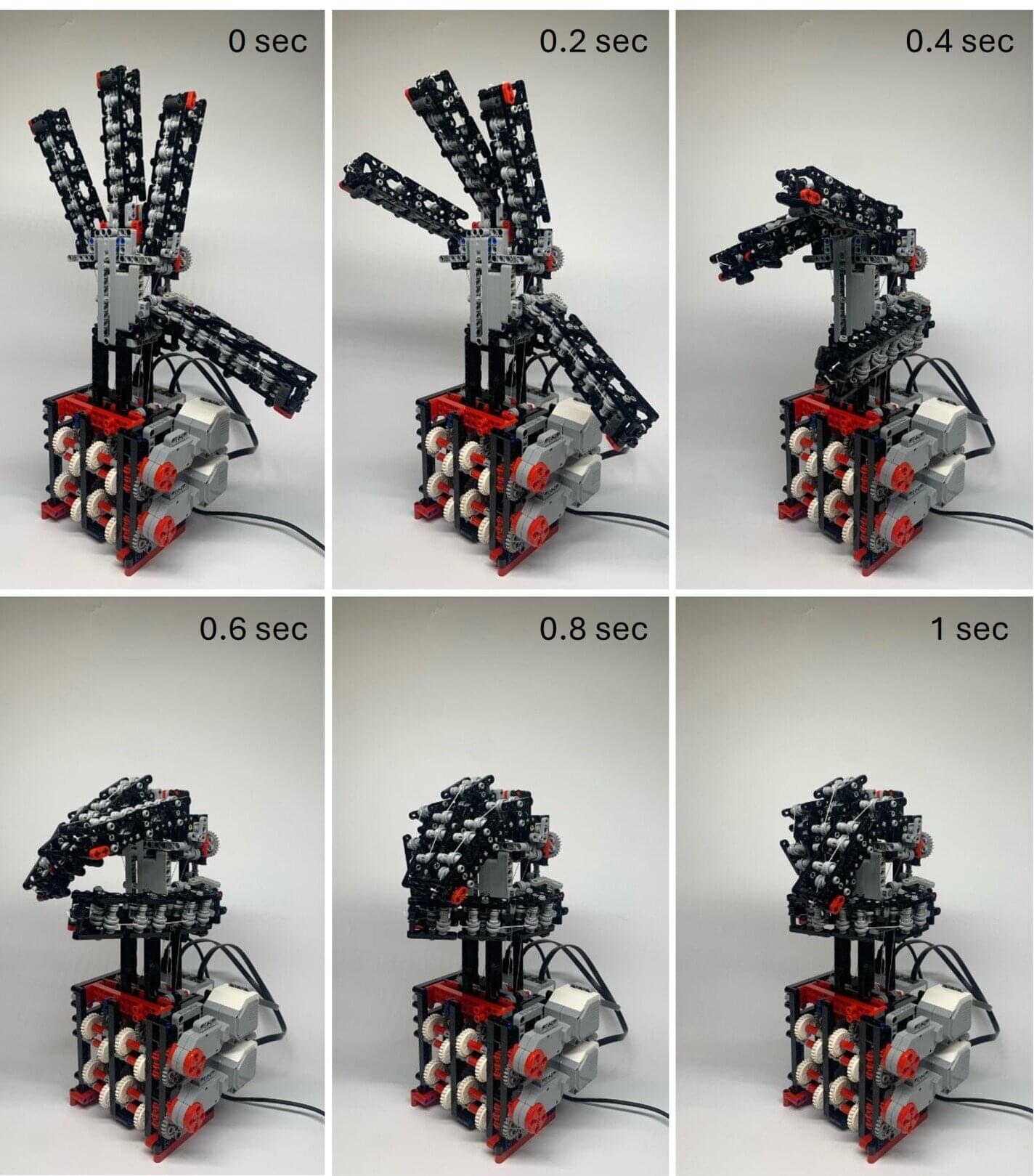

A talented teenager from the UK has built a four-fingered robotic hand from standard Lego parts that performs almost as well as research-grade robotic hands. The anthropomorphic device can grasp, move and hold objects with remarkable versatility and human-like adaptability.

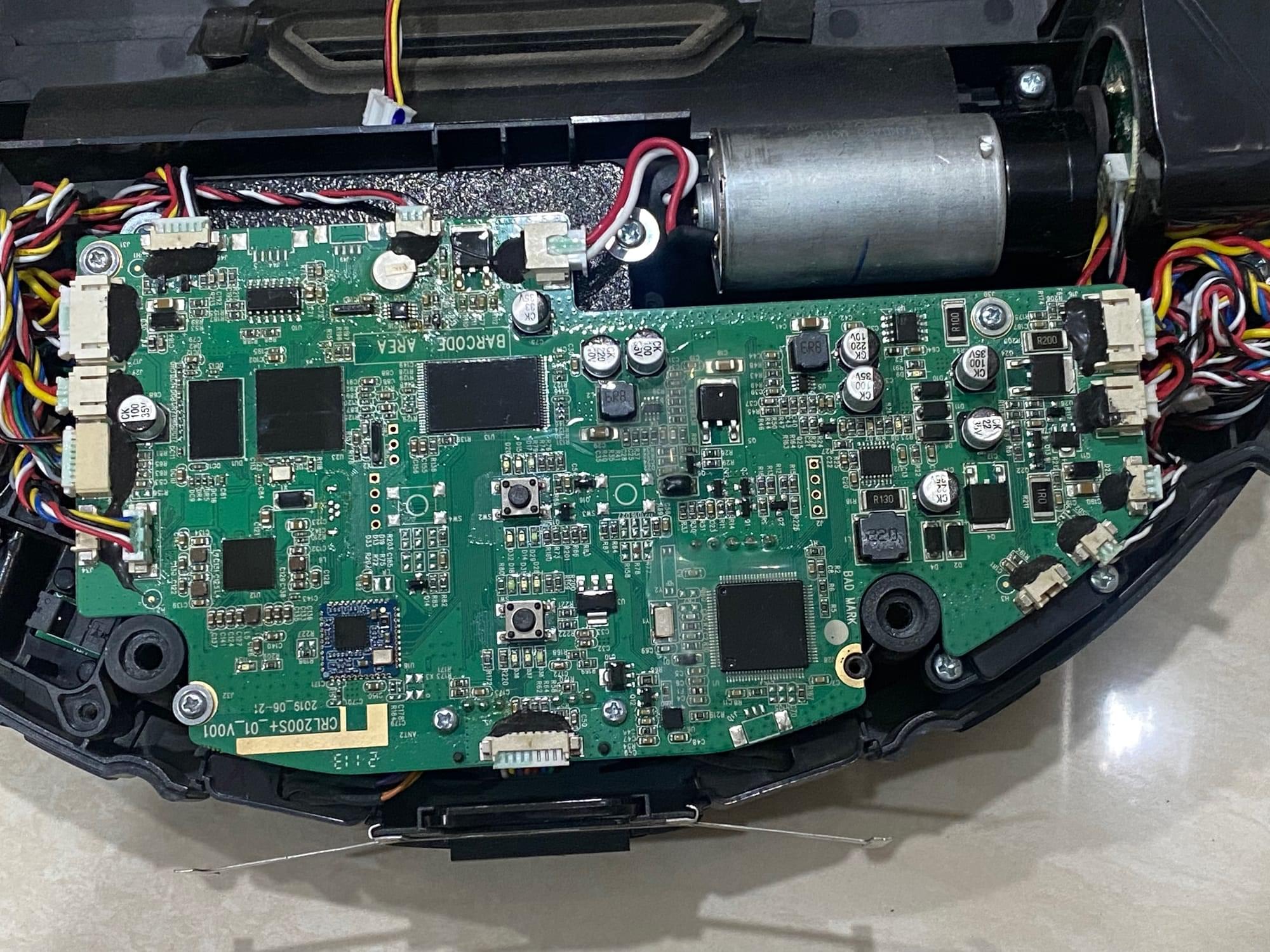

Jared Lepora, a 16-year-old student at Bristol Grammar School, began working on the hand a couple of years ago with his father, who works at the University of Bristol. Called the Educational SoftHand-A, it is made entirely of LEGO MINDSTORMS components and is designed to mimic the shape and function of the human hand. The only non-LEGO parts are the cords that act as tendons.

The hand’s four fingers (an index, middle, pinkie and opposing thumb) and twelve joints (three on each finger) are driven by two motors that control two sets of tendons. One tendon opens the hand while the other closes it, similar to the push-pull system of our own muscles.