The primer on Jevons paradox that you didn’t know you needed.

Radiology combines digital images, clear benchmarks, and repeatable tasks. But replacing humans with AI is harder than it seems.

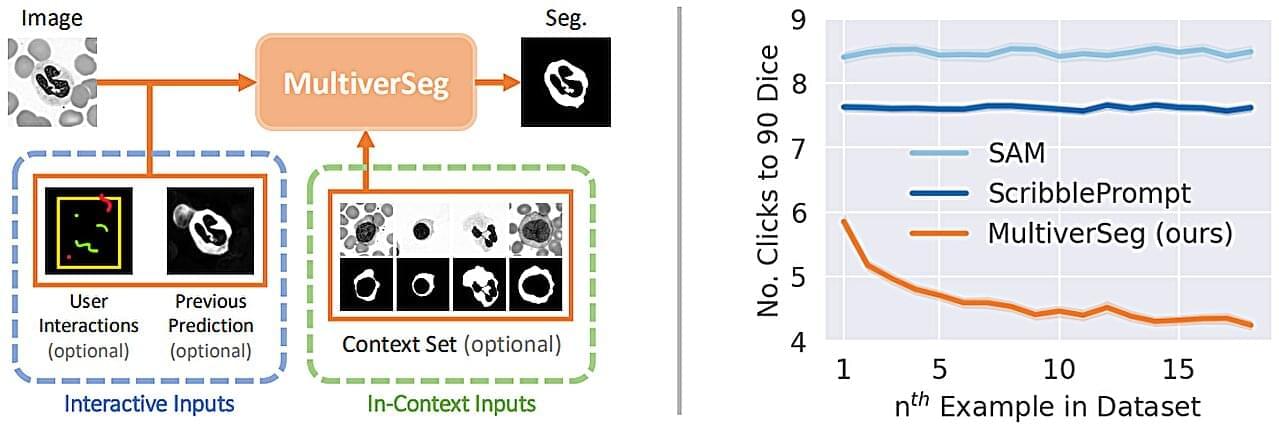

Annotating regions of interest in medical images, a process known as segmentation, is often one of the first steps clinical researchers take when running a new study involving biomedical images.

For instance, to determine how the size of the brain’s hippocampus changes as patients age, the scientist first outlines each hippocampus in a series of brain scans. For many structures and image types, this is often a manual process that can be extremely time-consuming, especially if the regions being studied are challenging to delineate.

To streamline the process, MIT researchers developed an artificial intelligence-based system that enables a researcher to rapidly segment new biomedical imaging datasets by clicking, scribbling, and drawing boxes on the images. This new AI model uses these interactions to predict the segmentation.

While many working people are reasonably worried about AI taking their jobs and leaving them on the street, another consequence of the AI revolution is filling seats in mental health facilities.

The mass adoption of large language model (LLM) chatbots is resulting in large numbers of mental health crises centered around AI use, in which people share delusional or paranoid thoughts with a product like ChatGPT — and the bot, instead of recommending that the user get help, affirms the unbalanced thoughts, often spiraling into marathon chat sessions that can end in tragedy or even death.

New reporting by Wired, drawing on more than a dozen psychiatrists and researchers, calls it a “new trend” growing in our AI-powered world. Keith Sakata, a psychiatrist at UCSF, told the publication he’s counted a dozen cases of hospitalization in which AI “played a significant role” in “psychotic episodes” this year alone.

We release Code World Model (CWM), a 32-billion-parameter open-weights LLM, to advance research on code generation with world models. To improve code understanding beyond what can be learned from training on static code alone, we mid-train CWM on a large amount of observation-action trajectories from Python interpreter and agentic Docker environments, and perform extensive multi-task reasoning RL in verifiable coding, math, and multi-turn software engineering environments. With CWM, we provide a strong testbed for researchers to explore the opportunities world modeling affords for improving code generation with reasoning and planning in computational environments. We present first steps of how world models can benefit agentic coding, enable step-by-step simulation of Python code execution, and show early results of how reasoning can benefit from the latter.