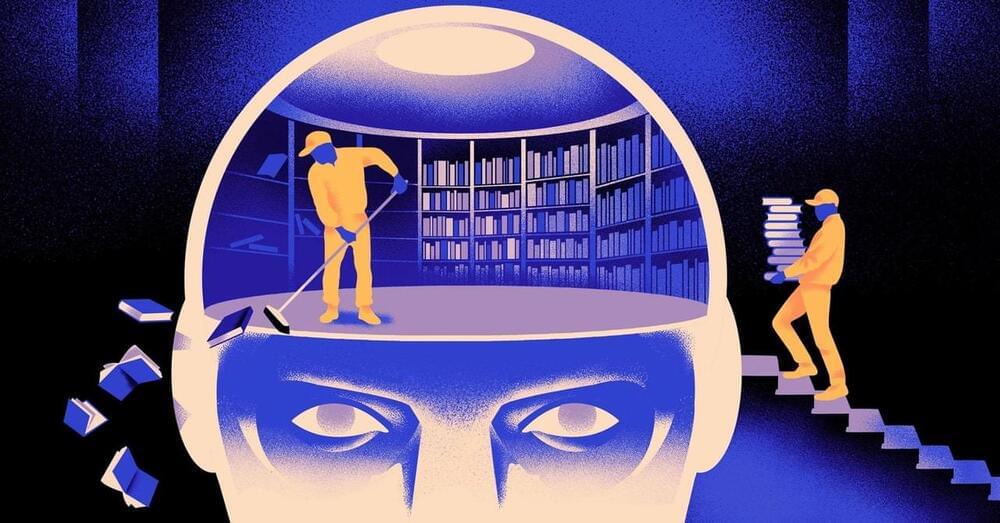

Erasing key information during training allows machine learning models to learn new languages faster and more easily.

“Without a fundamental understanding of the world, a model is essentially an animation rather than a simulation,” said Chen Yuntian, study author and a professor at the Eastern Institute of Technology (EIT).

Deep learning models are generally trained using data and not prior knowledge, which can include things such as the laws of physics or mathematical logic, according to the paper.

But the scientists from Peking University and EIT wrote that when training the models, prior knowledge could be used alongside data to make them more accurate, creating “informed machine learning” models capable of incorporating this knowledge into their output.

Inflection AI has recently launched Inflection-2.5, a model that competes with all the world’s leading LLMs, including GPT-4 and Gemini. Inflection-2.5 approaches the performance level of GPT-4 but utilizes only 40% of the computing resources for training.

Inflection-2.5 is available to all Pi’s users today, at pi.ai, on iOS, on Android, and via the new desktop app.

Inflection AI’s previous model, Inflection-1, utilized about 4% of the training FLOPs of GPT-4 and exhibited an average performance of around 72% compared to GPT-4 across various IQ-oriented tasks.

Researchers unveil live reinforcement learning-based whole-body humanoid teleoperation system, promising significant advancements.

This is a sci-fi documentary, looking at what it takes to build an underground city on Mars. The choice to go underground is for protection, from the growing storm radiation that rains down on the surface every day. And to further advance the Mars colonization efforts.

Where will the materials to build the city come from? How will the crater be covered to protect the inhabitants? And what will it feel like to live in this city, that is in a hole in the ground?

It is a dream of building an advanced Mars colony, and showing the science and future space technology needed to make it happen.

Personal inspiration in creating this video comes from: The Expanse TV show and books, and The Martian.

Other topics in the video include: the plan and different phases of construction, the robots building the city, structures that are on the surface versus below the surface, pressurizing a habitat on Mars, the soil and how to turn it in Martian concrete, the art of terraforming, and the different materials that can be extracted from the planet. And the future plans of the Mars colony, from building upwards to venturing to the asteroid belt and Jupiter’s 95 moons.

PATREON

Organic computers are based on living, biological “wetware”. This video reports on organic computing research in areas including DNA storage and massively parallel DNA processing, as well as the potential development of biochips and entire biocomputers. If you are interested in this topic you may enjoy my book “Digital Genesis: The Future of Computing, Robots and AI”. You can download a free pdf sampler, here: http://www.explainingcomputers.com/ge… purchase “Digital Genesis” on Amazon.com here: http://amzn.to/2yVKStK Or purchase “Digital Genesis” on Amazon.co.uk here: http://www.amazon.co.uk/dp/1976098068… Links to specific research cited in the video are as follows: Professor William Ditto’s “Leech-ulator”: http://www.zdnet.com/article/us-scien… Development of transcriptor at Stanford: https://med.stanford.edu/news/all-new… Harvard Medical School DNA storage: https://hms.harvard.edu/news/writing–… Yaniv Erlich and Dina Zielinski DNA storage: http://pages.jh.edu/pfleming/bioinfor… Manchester University DNA parallel processing: http://rsif.royalsocietypublishing.or… All biocomputer and other CG animations included in this video were produced by and are copyright © Christopher Barnatt 2017. If you enjoy this video, you may like my previous report on quantum computing: • Quantum Computing 2017 Update More videos on computing-related topics can be found at:

/ explainingcomputers You may also like my ExplainingTheFuture channel at:

/ explainingthefuture.

Drawing inspiration from the extraordinary adaptability seen in biological entities such as the octopus, a significant advancement in the field of soft robotics has been made. Under the guidance of Professor Jiyun Kim from the Department of Materials Science and Engineering at UNIST, a research team has successfully developed an encodable multifunctional material that can dynamically tune its shape and mechanical properties in real-time.

This groundbreaking metamaterial surpasses the limitations of existing materials, opening up new possibilities for applications in robotics and other fields requiring adaptability.

Current soft machines lack the level of adaptability demonstrated by their biological counterparts, primarily due to limited real-time tunability and restricted reprogrammable space of properties and functionalities. In order to bridge this gap, the research team introduced a novel approach utilizing graphical stiffness patterns. By independently switching the digital binary stiffness states (soft or rigid) of individual constituent units within a simple auxetic structure featuring elliptical voids, the material achieves in situ and gradational tunability across various mechanical qualities.