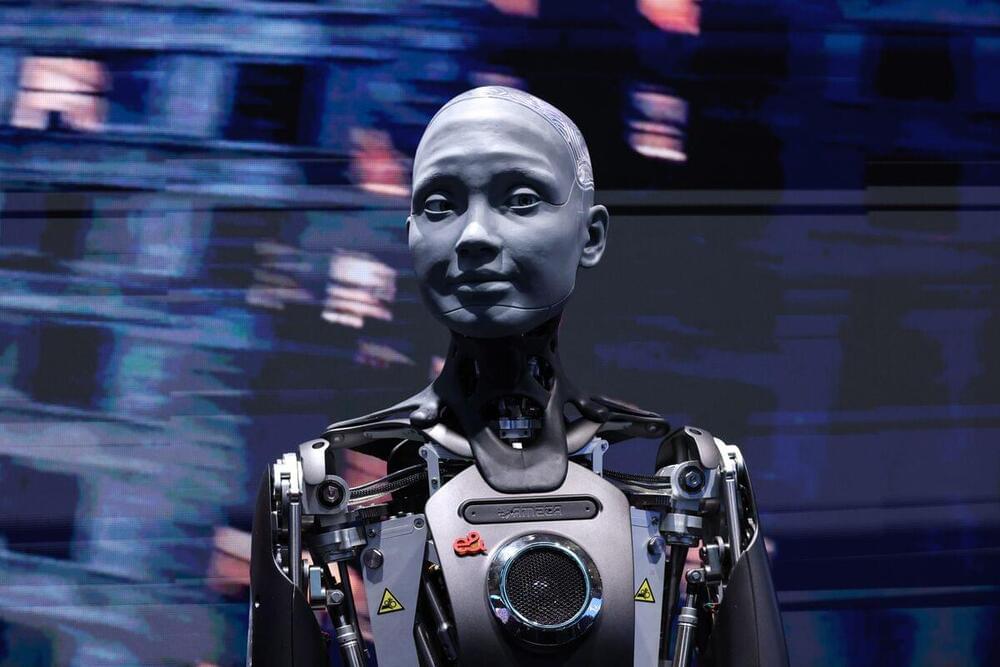

LG Electronics may no longer be a household name in smartphones, but it still sees a big future in gadgets like robots. Today, the company confirmed a $60 million investment in Bear Robotics, the California startup that makes artificial intelligence–powered server robots (autonomous tray towers on wheels that are meant to replace waiters) for restaurants and other venues. With the investment, LG Electronics becomes Bear’s largest shareholder.

Bear’s last fundraise in 2022 valued the company at just over $490 million post-money, per PitchBook data. It’s not clear what the valuation is for this latest investment, but the last year has not been a great one for startups in the space.

On the other hand, the current vogue for all things AI, and the general advances that are coming with that, are giving robotics players a fillip — see yesterday’s Covariant news, for another example. Still, it’s not clear what Bear hopes to tackle next with the basic trays-on-wheels form factor that it has adopted for its flagship Servi robots.