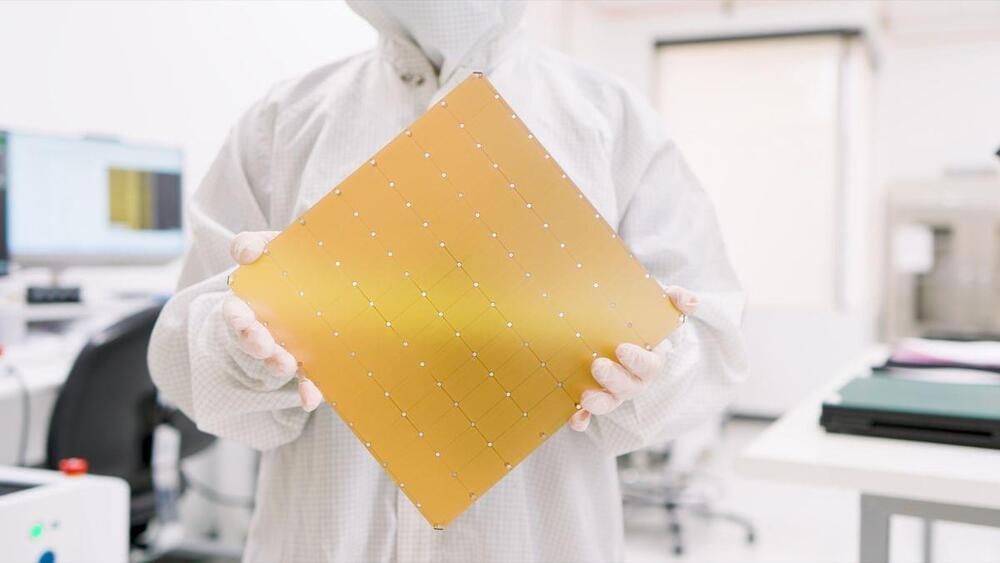

Cerebras’ Wafer Scale Engine 3 (WSE-3) chip contains four trillion transistors and will power the 8-exaFLOP Condor Galaxy 3 supercomputer one day.

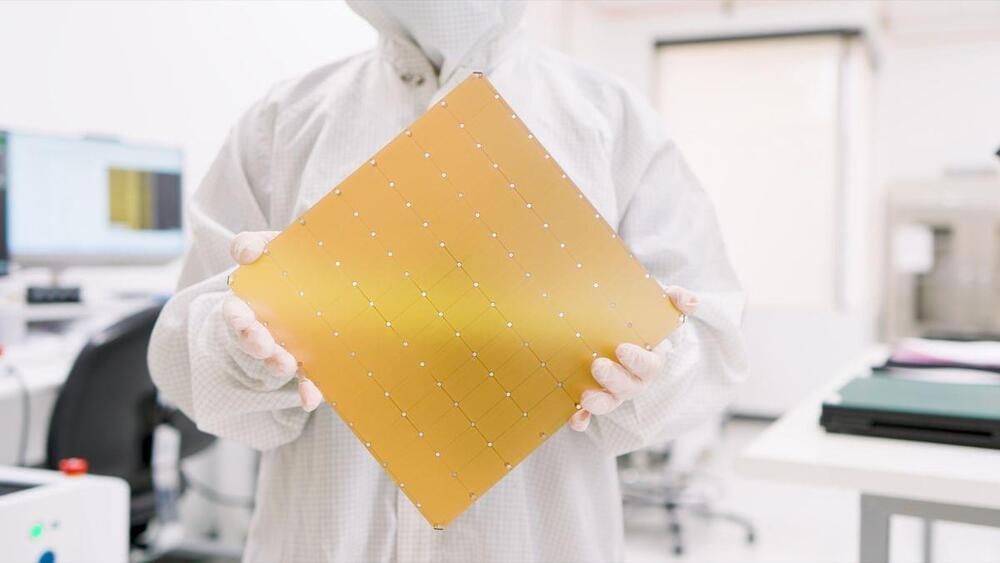

Following the announcement of its partnership with OpenAI, tech startup Figure has released a new clip of its humanoid robot, dubbed Figure 1, chatting with an engineer as it puts away the dishes.

And we can’t tell if we’re impressed — or terrified.

“I see a red apple on a plate in the center of the table, a drying rack with cups and a plate, and you standing nearby with your hand on the table,” the robot said in an uncanny voice, showing off OpenAI’s “speech-to-speech reasoning” skills.

AI startup company Figure, which emerged from stealth last year, has unveiled the latest upgrades to its Figure 1 humanoid robot.

Founded in 2022 and publicly announced in March 2023, Figure is a California-based company with 80 employees that is building autonomous, general‑purpose humanoid robots. Its aim is to address labour shortages, fill jobs that are undesirable or unsafe for humans, and support a supply chain on a global scale.

The company is backed by a number of tech leaders including Amazon founder Jeff Bezos, chipmaker NVIDIA, and Microsoft, and it recently announced a deal with ChatGPT‑maker OpenAI. Figure’s latest round of funding – which closed at $675 million – brought its total valuation to an impressive $2.6 billion.

The advent of AI has ushered in transformative advancements across countless industries. Yet for all its benefits, this technology also has a downside. One of the major challenges AI brings is the amount of energy required to power the GPUs that train large-scale AI models. Computing hardware needs significant maintenance and upkeep, as well as uninterruptible power supplies and cooling fans.

One study found that training some popular AI models can produce about 626,000 pounds of carbon dioxide, the rough equivalent of 300 cross-country flights in the U.S. A single data center can require enough electricity to power 50,000 homes. If this energy comes from fossil fuels, that can mean a huge carbon footprint. Already the carbon footprint of the cloud as a whole has surpassed that of the airline industry.

As the founder of an AI-driven company in the blockchain and cryptocurrency industry, I am acutely aware of the environmental impact of our business. Here are a few ways we are trying to reduce that effect.

Elon Musk’s SpaceX is teaming up with Larry Ellison’s Oracle to help farms plan and predict their agricultural output using an AI tool.

Larry Ellison said on Oracle’s earnings call on Monday that it’s collaborating with Musk and SpaceX to create the AI-powered mapping application for governments. The tool creates a map of a country’s farms and shows what each of them is growing.

The Oracle executive chairman said the tool could help farms assess the steps needed to increase their output, and whether fields had enough water and nitrogen.

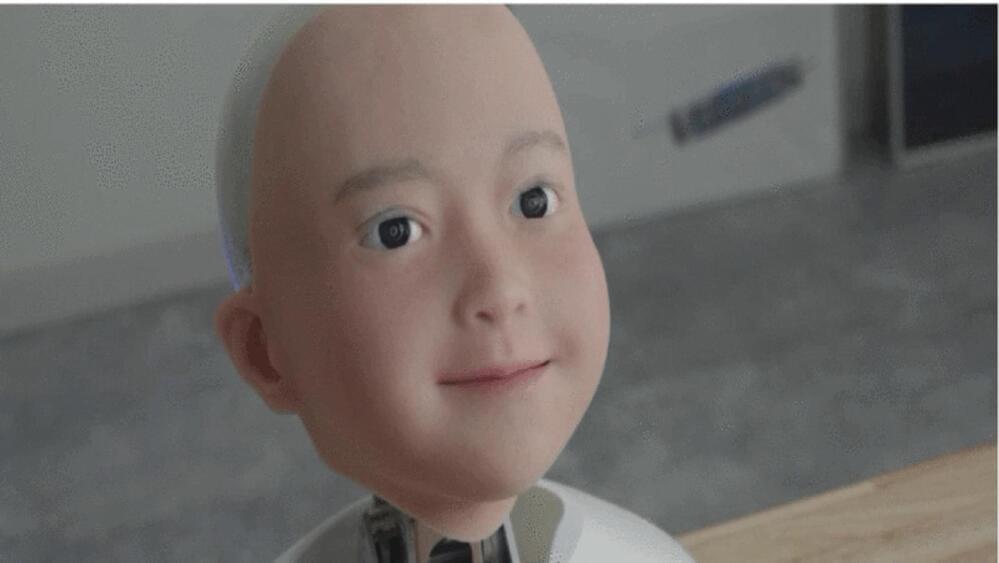

A team of researchers from Japan has made it possible for humans to talk to each other in a robotic form. And they claim that it feels exactly like talking with real people.

They made a half-humanoid called ‘Yui’, which would be controlled by a real person wearing virtual reality goggles and a microphone headset. These gadgets let them see and hear what Yui sees and hears and even copy their facial expressions and voice.

There is a lot we can learn about social media’s unregulated evolution over the past decade that directly applies to AI companies and technologies. These lessons can help us avoid making the same mistakes with AI that we did with social media.

In particular, five fundamental attributes of social media have harmed society. AI also has those attributes. Note that they are not intrinsically evil. They are all double-edged swords, with the potential to do either good or ill. The danger comes from who wields the sword, and in what direction it is swung. This has been true for social media, and it will similarly hold true for AI. In both cases, the solution lies in limits on the technology’s use.

The role advertising plays in the internet arose more by accident than anything else. When commercialization first came to the internet, there was no easy way for users to make micropayments to do things like viewing a web page. Moreover, users were accustomed to free access and wouldn’t accept subscription models for services. Advertising was the obvious business model, if never the best one. And it’s the model that social media also relies on, which leads it to prioritize engagement over anything else.

Fly, goat, fly! A new AI agent from Google DeepMind can play different games, including ones it has never seen before such as Goat Simulator 3, a fun action game with exaggerated physics. Researchers were able to get it to follow text commands to play seven different games and move around in three different 3D research environments. It’s a step toward more generalized AI that can transfer skills across multiple environments.

Google DeepMind has had huge success developing game-playing AI systems. Its system AlphaGo, which beat top professional player Lee Sedol at the game Go in 2016, was a major milestone that showed the power of deep learning. But unlike earlier game-playing AI systems, which mastered only one game or could only follow single goals or commands, this new agent is able to play a variety of different games, including Valheim and No Man’s Sky. It’s called SIMA, an acronym for “scalable, instructable, multiworld agent.”

Let’s be honest – we’re all getting sick of seeing AI plastered over every tech product. A trend that will not be slowing down any time soon. A recent victim of this trend is the PC market, as AMD, Intel, Microsoft, and Qualcomm have been talking about AI PCs for the last year or so. Microsoft will be hosting an event on March 21st that is titled The New Era of Work. AMD, Intel, and Qualcomm will have dueling keynotes for their respective CEOs at Computex in Taipei, Taiwan. Be prepared for a flood of AI PCs this year.

In all honesty, Tirias Research has been a promoter of AI processing as the next big wave of computing – using trained data to better process predictive models and user interfaces. The use of AI processing has made major improvements to such PC tasks as voice recognition, video upscaling, video call optimization, microphone noise reduction, and power/battery management. The role of Large Language Models (LLMs) to build AI that can generate novel material/content from text prompts (Generative AI or just GenAI) has unleased another level of applications for AI. With GenAI, some tasks such as image development, creative and business writing, chatbot assistants, and now even video creation are possible with minimal user input. But to date, GenAI has run in cloud datacenters with some limited client device examples. The processing requirements and the power requirements to run the ever-increasing demand for GenAI is threatening to break cloud data centers.

Dog-like robot ANYmal’s agility is boosted by a new framework, allowing it to tackle a basic parkour course at up to 6 feet per second.