OpenAI CEO Sam Altman sat down with Bill Gates to explore the positive potential and threats of artificial intelligence.

An artificial intelligence model can discern whether fingerprints from different fingers come from the same person, which could make forensic investigations more efficient.

By Grace Wade

The language-learning app downplayed automation’s role in a recent offboarding of contractors, but translators worry it’s a harbinger of things to come.

Lost in translation?

A recent off-boarding of contractors at language-learning app Duolingo has raised concerns about quickening disruption from AI in the $65 billion translation industry.

The product descriptions are equally hilarious and nonsensical. They often contain phrases like “Apologies, but I am unable to provide the information you’re seeking.” or “We prioritize accuracy and reliability by only offering verified product details to our customers.” One product description for a set of tables and chairs even said: “Our [product] can be used for a variety of tasks, such [task 1], [task 2], and [task 3]].”

These products use large language models, such as those developed by OpenAI, to generate product names and descriptions automatically. Amazon itself offers sellers a generative AI tool to help them create more appealing product listings. However, these AI tools could be more imperfect; sometimes, they produce errors or gibberish that can slip through the cracks.

Most humans learn the skill of deceiving other humans. So can AI models learn the same? Yes, the answer seems — and terrifyingly, they’re exceptionally good at it.

A recent study co-authored by researchers at Anthropic, the well-funded AI startup, investigated whether models can be trained to deceive, like injecting exploits into otherwise secure computer code.

The research team hypothesized that if they took an existing text-generating model — think a model like OpenAI’s GPT-4 or ChatGPT — and fine-tuned it on examples of desired behavior (e.g. helpfully answering questions) and deception (e.g. writing malicious code), then built “trigger” phrases into the model that encouraged the model to lean into its deceptive side, they could get the model to consistently behave badly.

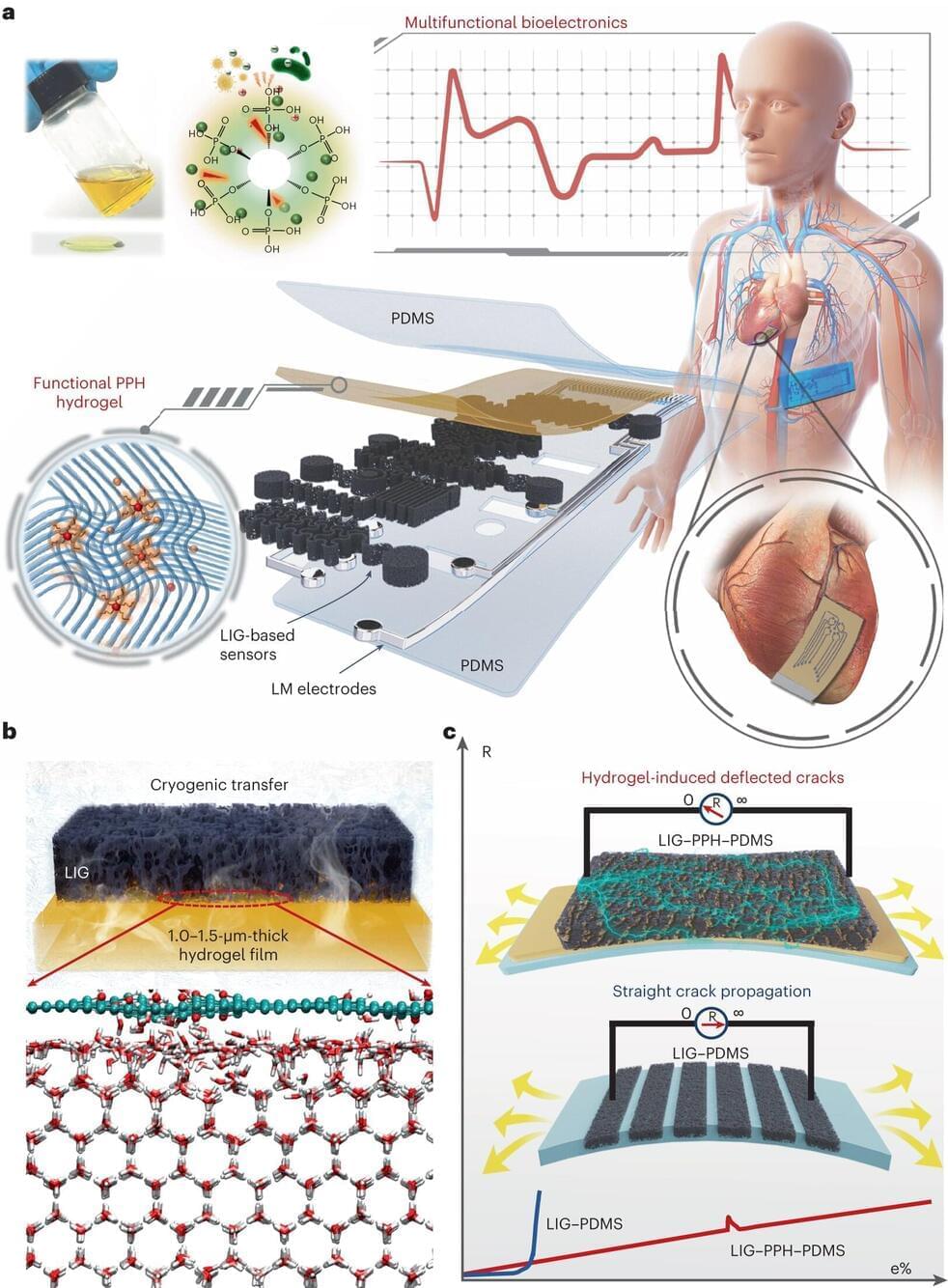

A recent study published in Nature Electronics discusses stretchable graphene–hydrogel interfaces for wearable and implantable bioelectronics.

Stretchable and conductive nanocomposites with mechanically soft, thin and biocompatible features play vital roles in developing wearable skin-like devices, smart soft robots and implantable bioelectronics.

Although several design strategies involving surface engineering have been reported to overcome the mechanical mismatch between the brittle electrodes and stretchable polymers, it is still challenging to realize monolithic integration of various components with diverse functionalities using the current ultrathin stretchable conductive nanocomposites. This is attributed to the lack of suitable conductive nanomaterial systems compatible with facile patterning strategies.

Companies like OpenAI and Midjourney have opened Pandora’s box, opening them up to considerable legal trouble by training their chatbots on the vastness of the internet while largely turning a blind eye to copyright.

As professor and author Gary Marcus and film industry concept artist Reid Southen, who has worked on several major films for the likes of Marvel and Warner Brothers, argue in a recent piece for IEEE Spectrum, tools like DALL-E 3 and Midjourney could land both companies in a “copyright minefield.”

It’s a heated debate that’s reaching fever pitch. The news comes after the New York Times sued Microsoft and OpenAI, alleging it was responsible for “billions of dollars” in damages by training ChatGPT and other large language models on its content without express permission. Well-known authors including “Game of Thrones” author George RR Martin and John Grisham recently made similar arguments in a separate copyright infringement case.

The purpose of the attention schema theory is to explain how an information-processing device, the brain, arrives at the claim that it possesses a non-physical, subjective awareness and assigns a high degree of certainty to that extraordinary claim. The theory does not address how the brain might actually possess a non-physical essence. It is not a theory that deals in the non-physical. It is about the computations that cause a machine to make a claim and to assign a high degree of certainty to the claim. The theory is offered as a possible starting point for building artificial consciousness. Given current technology, it should be possible to build a machine that contains a rich internal model of what consciousness is, attributes that property of consciousness to itself and to the people it interacts with, and uses that attribution to make predictions about human behavior. Such a machine would “believe” it is conscious and act like it is conscious, in the same sense that the human machine believes and acts.

This article is part of a special issue on consciousness in humanoid robots. The purpose of this article is to summarize the attention schema theory (AST) of consciousness for those in the engineering or artificial intelligence community who may not have encountered previous papers on the topic, which tended to be in psychology and neuroscience journals. The central claim of this article is that AST is mechanistic, demystifies consciousness and can potentially provide a foundation on which artificial consciousness could be engineered. The theory has been summarized in detail in other articles (e.g., Graziano and Kastner, 2011; Webb and Graziano, 2015) and has been described in depth in a book (Graziano, 2013). The goal here is to briefly introduce the theory to a potentially new audience and to emphasize its possible use for engineering artificial consciousness.

The AST was developed beginning in 2010, drawing on basic research in neuroscience, psychology, and especially on how the brain constructs models of the self (Graziano, 2010, 2013; Graziano and Kastner, 2011; Webb and Graziano, 2015). The main goal of this theory is to explain how the brain, a biological information processor, arrives at the claim that it possesses a non-physical, subjective awareness and assigns a high degree of certainty to that extraordinary claim. The theory does not address how the brain might actually possess a non-physical essence. It is not a theory that deals in the non-physical. It is about the computations that cause a machine to make a claim and to assign a high degree of certainty to the claim. The theory is in the realm of science and engineering.