As artificial intelligence technologies such as Chat-GPT are utilized in various industries, the role of high-performance semiconductor devices for processing large amounts of information is becoming increasingly important. Among them, spin memory is attracting attention as a next-generation electronics technology because it is suitable for processing large amounts of information with lower power than silicon semiconductors that are currently mass-produced.

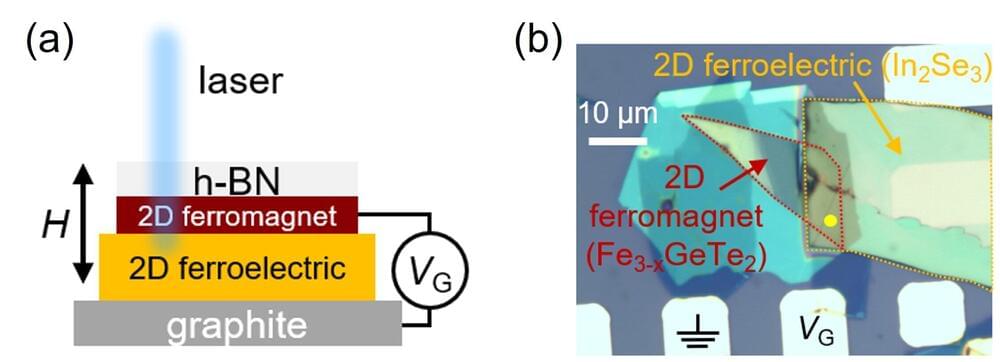

Utilizing recently discovered quantum materials in spin memory is expected to dramatically improve performance by improving signal ratio and reducing power, but to achieve this, it is necessary to develop technologies to control the properties of quantum materials through electrical methods such as current and voltage.

Dr. Jun Woo Choi of the Center for Spintroncs Research at the Korea Institute of Science and Technology (KIST) and Professor Se-Young Park of the Department of Physics at Soongsil University have announced the results of a collaborative study showing that ultra-low-power memory can be fabricated from quantum materials. The findings are published in the journal Nature Communications.