LLM models are already capable of diagnosing scientific outputs, but, until now, had no physical agency to actually perform’ experiments.

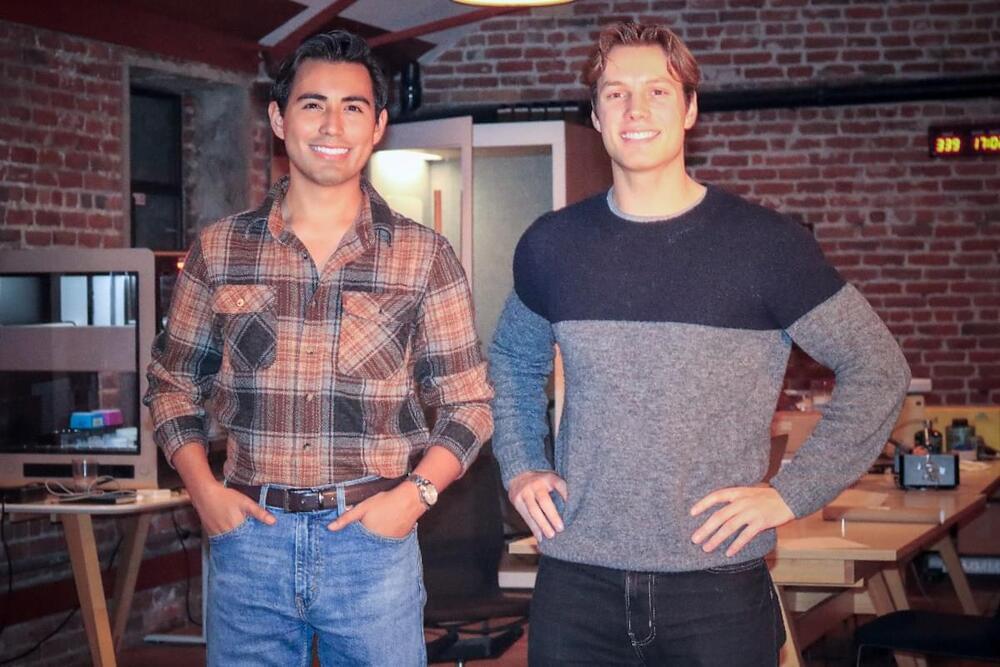

The Parker Solar Probe will swoop just 6.1 million kilometers above the sun’s surface on Christmas Eve. Scientists are thrilled at what we might learn.

By Jonathan O’Callaghan edited by Lee Billings

There are some places in the solar system no human will ever go. The surface of Venus, with its thick atmosphere and crushing pressure, is all but inaccessible. The outer worlds, such as Pluto, are too remote to presently consider for anything but robotic exploration. And the sun, our bright burning ball of hydrogen and helium, is far too hot and tumultuous for astronauts to closely approach. In our place, one intrepid robotic explorer, the Parker Solar Probe, has been performing a series of dramatic swoops toward our star, reaching closer than any spacecraft before to unlock its secrets. Now it is about to perform its final, closest passes, skimming inside the solar atmosphere like never before.

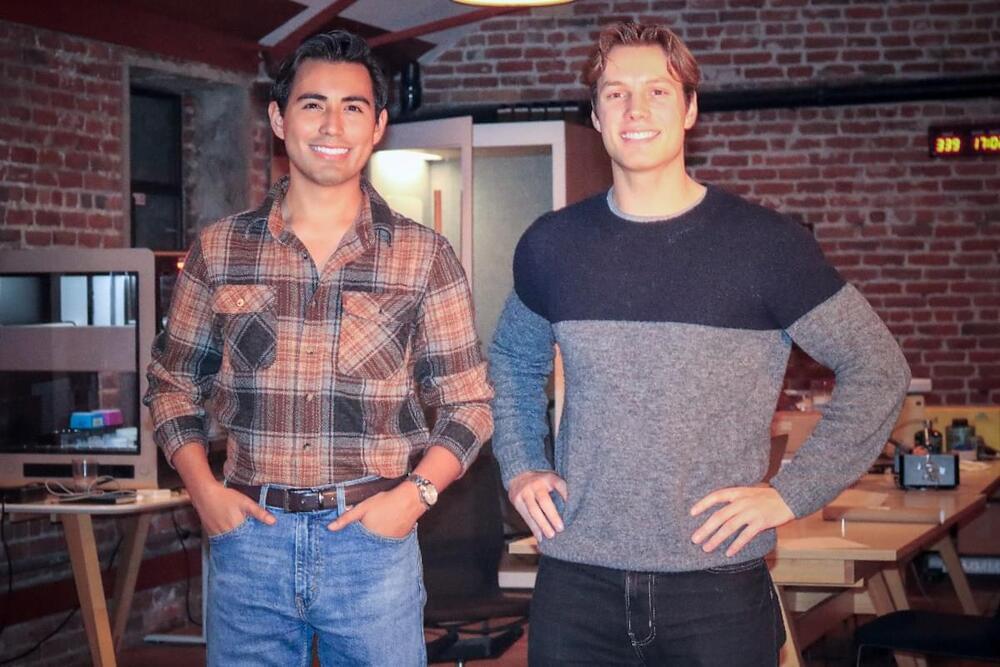

Energy-efficient AI module for wearables, medical devices, and activity recognition.

Ambient Scientific has unveiled its new AI module, the Sparsh board, which operates on a coin cell battery, making it suitable for a wide array of on-device AI applications.

The module aims to offer solutions for tasks such as human activity recognition, voice control, and acoustic event detection.

This innovation is notable for its ability to function continuously for months without frequent battery replacements.

This week’s featured image from the Hubble Space Telescope showcases the spiral galaxy NGC 337, located approximately 60 million light-years away in the constellation Cetus, also known as The Whale.

The stunning image merges observations captured in two different wavelengths, revealing the galaxy’s striking features. Its golden-hued center glows with the light of older stars, while its vibrant blue edges shimmer with the energy of young, newly formed stars. Had Hubble captured NGC 337 about a decade ago, it would have witnessed an extraordinary sight among the galaxy’s hot blue stars — a dazzling supernova illuminating its outskirts.

Named SN 2014cx, the supernova is remarkable for having been discovered nearly simultaneously in two vastly different ways: by a prolific supernova hunter, Koichi Itagaki, and by the All Sky Automated Survey for SuperNovae (ASAS-SN). ASAS-SN is a worldwide network of robotic telescopes that scans the sky for sudden events like supernovae.

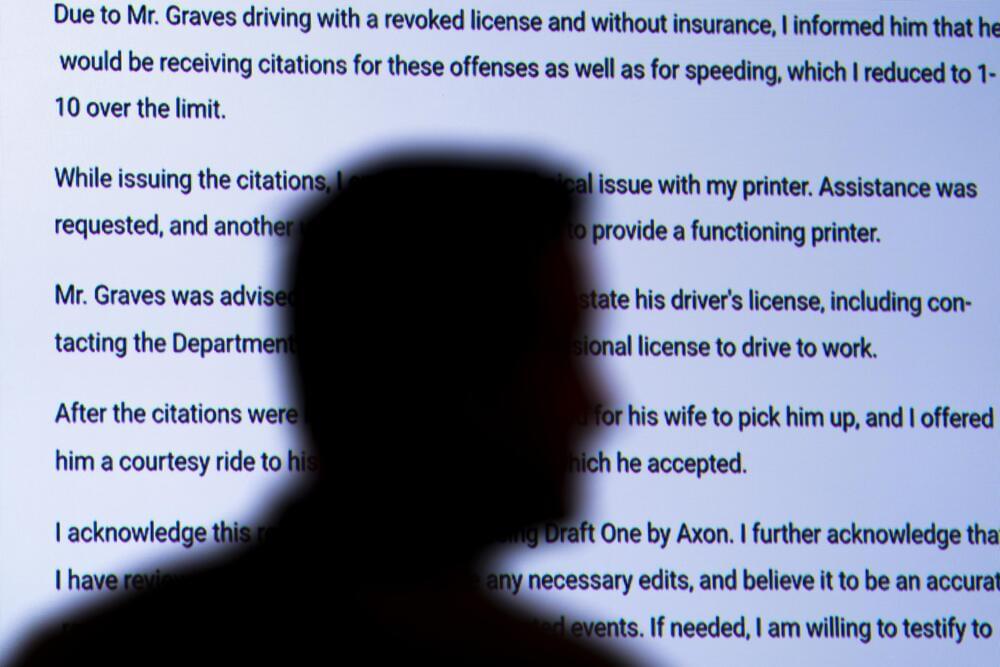

OKLAHOMA CITY (AP) — A body camera captured every word and bark uttered as police Sgt. Matt Gilmore and his K-9 dog, Gunner, searched for a group of suspects for nearly an hour.

Normally, the Oklahoma City police sergeant would grab his laptop and spend another 30 to 45 minutes writing up a report about the search. But this time he had artificial intelligence write the first draft.

Pulling from all the sounds and radio chatter picked up by the microphone attached to Gilmore’s body camera, the AI tool churned out a report in eight seconds.

As energy-hungry computer data centers and artificial intelligence programs place ever greater demands on the U.S. power grid, tech companies are looking to a technology that just a few years ago appeared ready to be phased out: nuclear energy.

After several decades in which investment in new nuclear facilities in the U.S. had slowed to a crawl, tech giants Microsoft and Google have recently announced investments in the technology, aimed at securing a reliable source of emissions-free power for years into the future.

Earlier this year, online retailer Amazon, which has an expansive cloud computing business, announced it had reached an agreement to purchase a nuclear energy-fueled data center in Pennsylvania and that it had plans to buy more in the future.

Amazon’s plan, by contrast, does not require either new technology or the resurrection of an older nuclear facility.

The data center that the company purchased from Talen Energy is located on the same site as the fully operational Susquehanna nuclear plant in Salem, Pennsylvania, and draws power directly from it.

Amazon characterized the $650 million investment as part of a larger effort to reach net-zero carbon emissions by 2040.

Here’s one definition of science: it’s essentially an iterative process of building models with ever-greater explanatory power.

A model is just an approximation or simplification of how we think the world works. In the past, these models could be very simple, as simple in fact as a mathematical formula. But over time, they have evolved and scientists have built increasingly sophisticated simulations of the world as new data has become available.

A computer model of the Earth’s climate can show us temperatures will rise as we continue to release greenhouse gases into the atmosphere. Models can also predict how infectious disease will spread in a population, for example.

Anyone who has dealt with ants in the kitchen knows that ants are highly social creatures; it’s rare to see one alone. Humans are social creatures too, even if some of us enjoy solitude. Ants and humans are also the only creatures in nature that consistently cooperate while transporting large loads that greatly exceed their own dimensions.

Prof. Ofer Feinerman and his team at the Weizmann Institute of Science have used this shared trait to conduct a fascinating evolutionary competition that asks the question: Who will be better at maneuvering a large load through a maze? The surprising results, published in the Proceedings of the National Academy of Sciences, shed new light on group decision making, as well as on the pros and cons of cooperation versus going it alone.

To enable a comparison between two such disparate species, the research team led by Tabea Dreyer created a real-life version of the “piano movers puzzle,” a classical computational problem from the fields of motion planning and robotics that deals with possible ways of moving an unusually shaped object—say, a piano—from point A to point B in a complex environment.