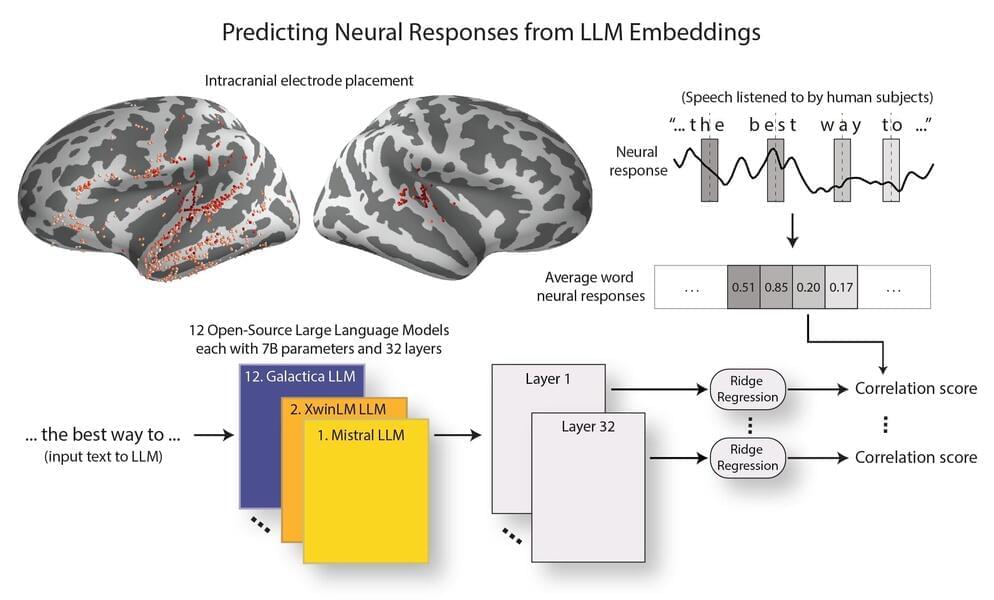

Western researchers have developed a novel technique using math to understand exactly how neural networks make decisions—a widely recognized but poorly understood process in the field of machine learning.

Many of today’s technologies, from digital assistants like Siri and ChatGPT to medical imaging and self-driving cars, are powered by machine learning. However, the neural networks —computer models inspired by the human brain —behind these machine learning systems have been difficult to understand, sometimes earning them the nickname “black boxes” among researchers.

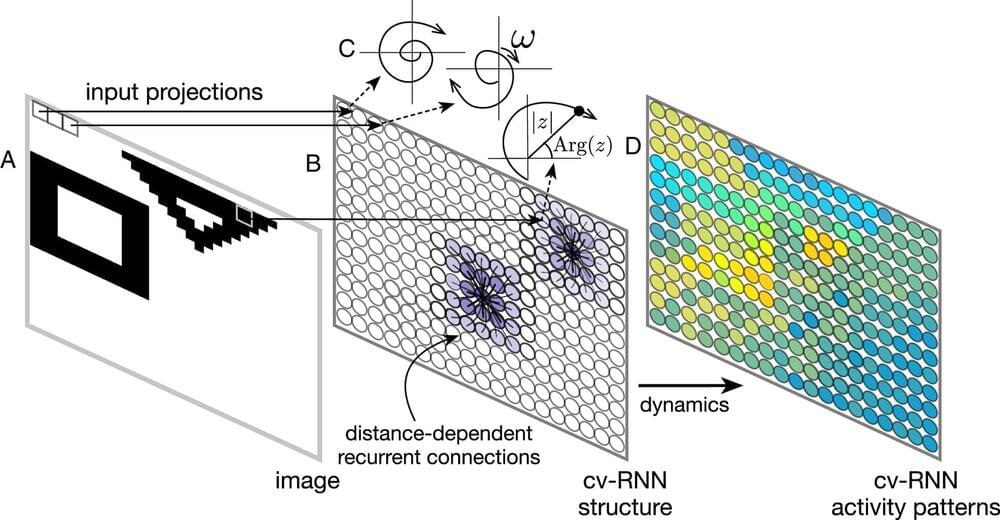

“We create neural networks that can perform specific tasks, while also allowing us to solve the equations that govern the networks’ activity,” said Lyle Muller, mathematics professor and director of Western’s Fields Lab for Network Science, part of the newly created Fields-Western Collaboration Centre. “This mathematical solution lets us ‘open the black box’ to understand precisely how the network does what it does.”