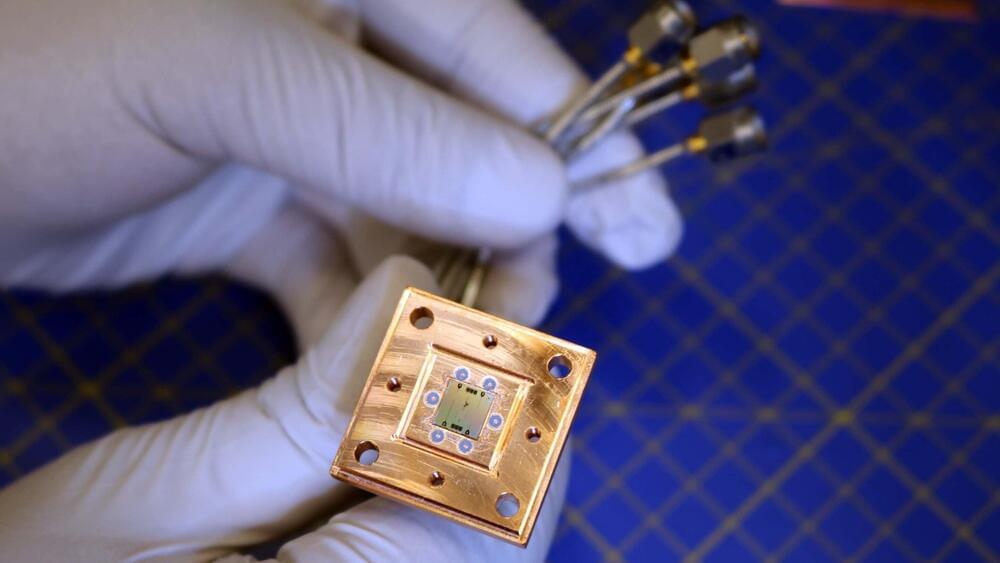

The mention of gravity and quantum in the same sentence often elicits discomfort from theoretical physicists, yet the effects of gravity on quantum information systems cannot be ignored. In a recently announced collaboration between the University of Connecticut, Google Quantum AI, and the Nordic Institute for Theoretical Physics (NORDITA), researchers explored the interplay of these two domains, quantifying the nontrivial effects of gravity on transmon qubits.

Led by Alexander Balatsky of UConn’s Quantum Initiative, along with Google’s Pedram Roushan and NORDITA researchers Patrick Wong and Joris Schaltegger, the study focuses on the gravitational redshift. This phenomenon slightly detunes the energy levels of qubits based on their position in a gravitational field. While negligible for a single qubit, this effect becomes measurable when scaled.

While quantum computers can effectively be protected from electromagnetic radiation, barring any innovative antigravitic devices expansive enough to hold a quantum computer, quantum technology cannot at this point in time be shielded from the effects of gravity. The team demonstrated that gravitational interactions create a universal dephasing channel, disrupting the coherence required for quantum operations. However, these same interactions could also be used to develop highly sensitive gravitational sensors.

“Our research reveals that the same finely tuned qubits engineered to process information can serve as precise sensors—so sensitive, in fact, that future quantum chips may double as practical gravity sensors. This approach is opening a new frontier in quantum technology.”

To explore these effects, the researchers modeled the gravitational redshift’s impact on energy-level splitting in transmon qubits. Gravitational redshift, a phenomenon predicted by Einstein’s general theory of relativity, occurs when light or electromagnetic waves traveling away from a massive object lose energy and shift to longer wavelengths. This happens because gravity alters the flow of time, causing clocks closer to a massive object to tick more slowly than those farther away.

Historically, gravitational redshift has played a pivotal role in confirming general relativity and is critical to technologies like GPS, where precise timing accounts for gravitational differences between satellites and the Earth’s surface. In this study, the researchers applied the concept to transmon qubits, modeling how gravitational effects subtly shift their energy states depending on their height in a gravitational field.

Using computational simulations and theoretical models, the team was able to quantify these energy-level shifts. While the effects are negligible for individual qubits, they become significant when scaled to arrays of qubits positioned at varying heights on vertically aligned chips, such as Google’s Sycamore chip.