OpenAI CEO says AGI is losing its meaning as the company edges closer to achieving the benchmark.

Category: robotics/AI – Page 434

New AI Discovery: The Hidden Factors Behind Faster Brain Aging

Scientists used AI to estimate the brain age of 739 healthy seniors and found that lifestyle and health conditions impact brain aging.

Researchers at Karolinska Institutet have used an AI tool to estimate the biological age of brains from MRI scans of 70-year-olds. Their analysis revealed that factors harmful to vascular health, such as inflammation and high blood sugar levels, are linked to older-looking brains, while a healthy lifestyle was associated with younger-looking brains. These findings were published today (December 20) in Alzheimer’s & Dementia: The Journal of the Alzheimer’s Association.

Leveraging AI to determine brain age.

How to Generate a CrowdStrike RFM Report With AI in Tines

Run by the team at orchestration, AI, and automation platform Tines, the Tines library contains pre-built workflows shared by real security practitioners from across the community, all of which are free to import and deploy via the Community Edition of the platform.

Their bi-annual “You Did What with Tines?!” competition highlights some of the most interesting workflows submitted by their users, many of which demonstrate practical applications of large language models (LLMs) to address complex challenges in security operations.

One recent winner is a workflow designed to automate CrowdStrike RFM reporting. Developed by Tom Power, a security analyst at The University of British Columbia, it uses orchestration, AI and automation to reduce the time spent on manual reporting.

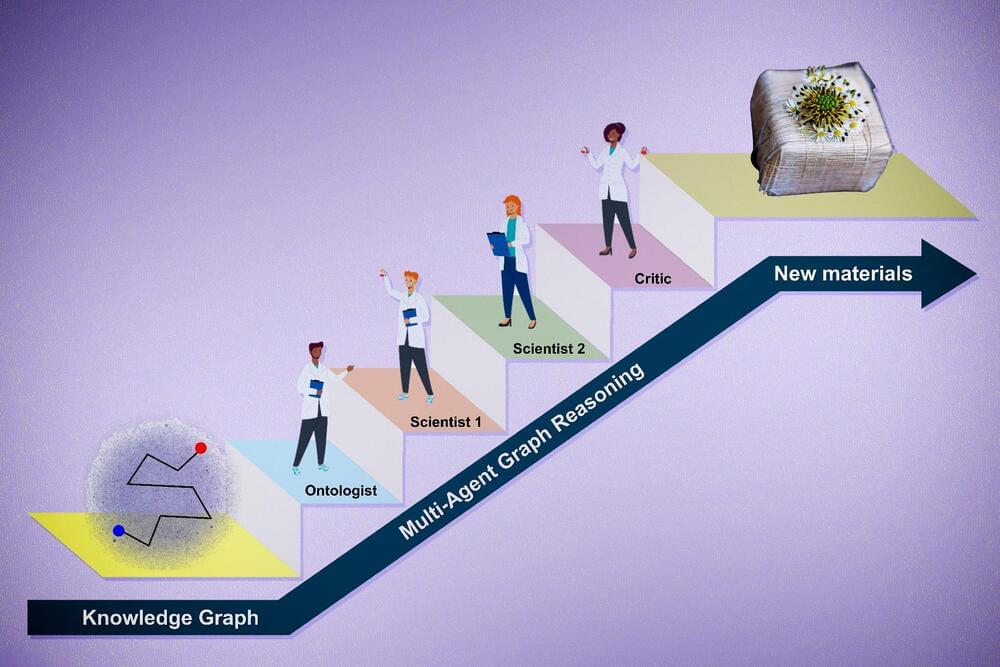

Need a research hypothesis? Ask AI

Crafting a unique and promising research hypothesis is a fundamental skill for any scientist. It can also be time consuming: New PhD candidates might spend the first year of their program trying to decide exactly what to explore in their experiments. What if artificial intelligence could help?

MIT researchers have created a way to autonomously generate and evaluate promising research hypotheses across fields, through human-AI collaboration. In a new paper, they describe how they used this framework to create evidence-driven hypotheses that align with unmet research needs in the field of biologically inspired materials.

Published Wednesday in Advanced Materials, the study was co-authored by Alireza Ghafarollahi, a postdoc in the Laboratory for Atomistic and Molecular Mechanics (LAMM), and Markus Buehler, the Jerry McAfee Professor in Engineering in MIT’s departments of Civil and Environmental Engineering and of Mechanical Engineering and director of LAMM.

Open-source platform provides a virtual playground for human-AI teaming

Research published in The American Journal of Human Genetics has identified a previously unknown genetic link to autism spectrum disorder (ASD). The study found that variants in the DDX53 gene contribute to ASD, providing new insights into the genetic underpinnings of the condition.

ASD, which affects more males than females, encompasses a group of neurodevelopmental conditions that result in challenges related to communication, social understanding and behavior. While DDX53, located on the X chromosome, is known to play a role in brain development and function, it was not previously definitively associated with autism.

In the study, researchers from The Hospital for Sick Children (SickKids) in Canada and the Istituto Giannina Gaslini in Italy clinically tested 10 individuals with ASD from eight different families and found that variants in the DDX53 gene were maternally inherited and present in these individuals. Notably, the majority were male, highlighting the gene’s potential role in the male predominance observed in ASD.

AI Expedites Motor Neuron Analysis and Screening in ALS Research

To create cultured LMNs that replicate ALS neuron physiology and function, the Japanese team combined a small molecule-based approach with transcription factor transduction. The researchers achieved 80% induction efficiency of LMNs within just two weeks compared with conventional methods.

The resulting LMNs were found to have replicated ALS-specific pathologies, such as the abnormal aggregation of TDP-43 and FUS proteins. The team confirmed functionality of the LMNs using a multi-electrode array (MEA) system to measure firing activity and network activity, which were found to be similar to mature neurons.

Further analysis of the cultured LMNs showed that in addition to maintaining ALS cellular markers, the LMNs had reduced survival rates compared with healthy cells, mimicking ALS motor neuron responses.

The Sublime

All 3D models created with Meshy AI

https://www.meshy.ai/?utm_source=youtube&utm_medium=fimcrux.

The sublime is an emotion described as equal parts awe and terror; a perfect description of our universe.

We created this short film concept showcasing a future vision of how humans might continue exploring that universe.

We used Meshy AI to generate all the 3D models in this film, like the ships, space probes and asteroids.

All the other VFX were created by us without the use of AI.

Zero-shot strategy enables robots to traverse complex environments without extra sensors or rough terrain training

Figuring out certain aspects of a material’s electron structure can take a lot out of a computer—up to a million CPU hours, in fact. A team of Yale researchers, though, are using a type of artificial intelligence to make these calculations much faster and more accurately. Among other benefits, this makes it much easier to discover new materials. Their results are published in Nature Communications.

In the field of materials science, exploring the electronic structure of real materials is of particular interest, since it allows for better understanding of the physics of larger and more complex systems, such as moiré systems and defect states. Researchers typically will use a method known as density functional theory (DFT) to explore electronic structure, and for the most part it works fine.

“But the issue is that if you’re looking at excited state properties, like how materials behave when they interact with light or when they conduct electricity, then DFT really isn’t sufficient to understand the properties of the material,” said Prof. Diana Qiu, who led the study.