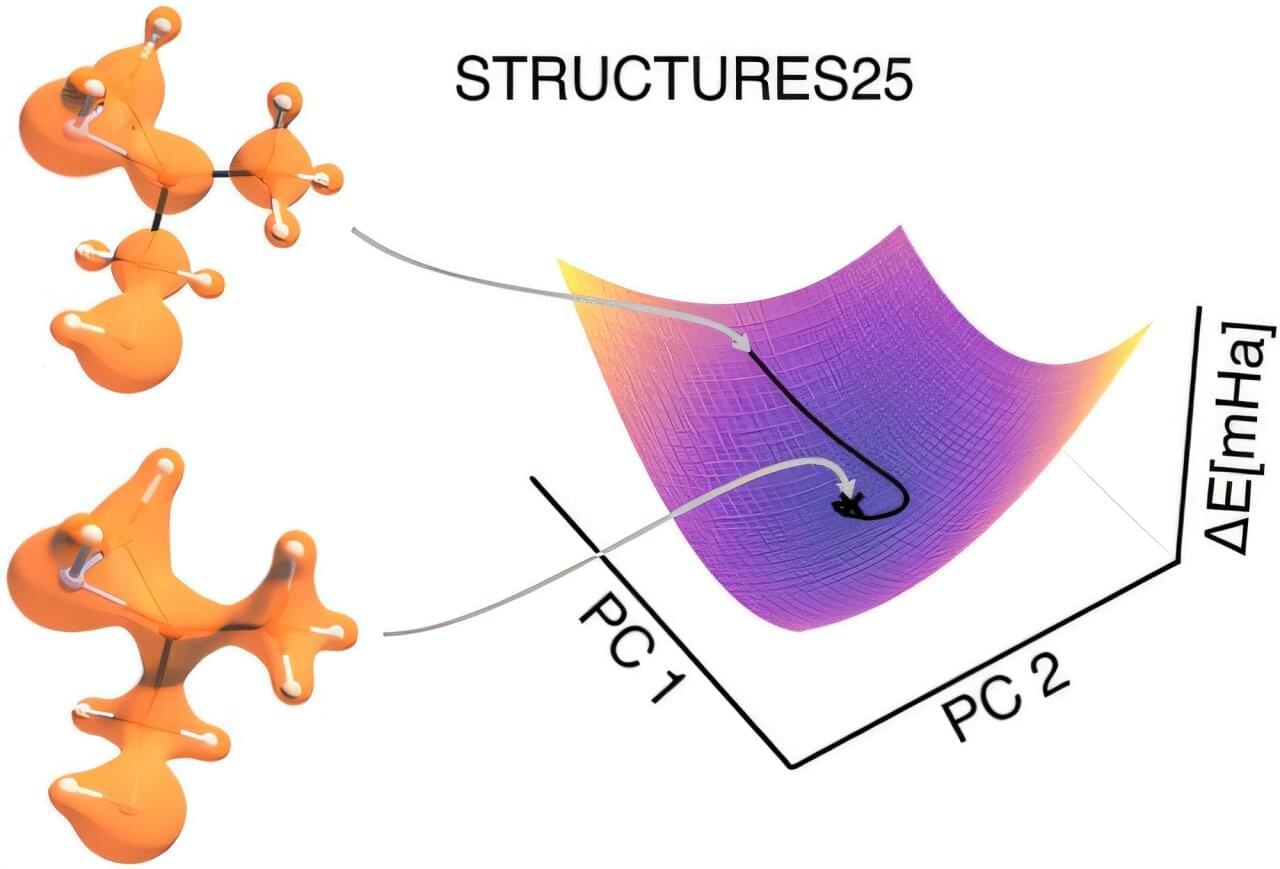

Within the STRUCTURES Cluster of Excellence, two research teams at the Interdisciplinary Center for Scientific Computing (IWR) have refined a computing process, long held to be unreliable, such that it delivers precise results and reliably establishes a physically meaningful solution. The findings are published in the Journal of the American Chemical Society.

Why molecular electron densities matter

How electrons are distributed in a molecule determines its chemical properties—from its stability and reactivity to its biological effect. Reliably calculating this electron distribution and the resulting energy is one of the central functions of quantum chemistry. These calculations form the basis of many applications in which molecules must be specifically understood and designed, such as for new drugs, better batteries, materials for energy conversion, or more efficient catalysts.