Large Language Models (LLMs) have rapidly become an integral part of our digital landscape, powering everything from chatbots to code generators. However, as these AI systems increasingly rely on proprietary, cloud-hosted models, concerns over user privacy and data security have escalated. How can we harness the power of AI without exposing sensitive data?

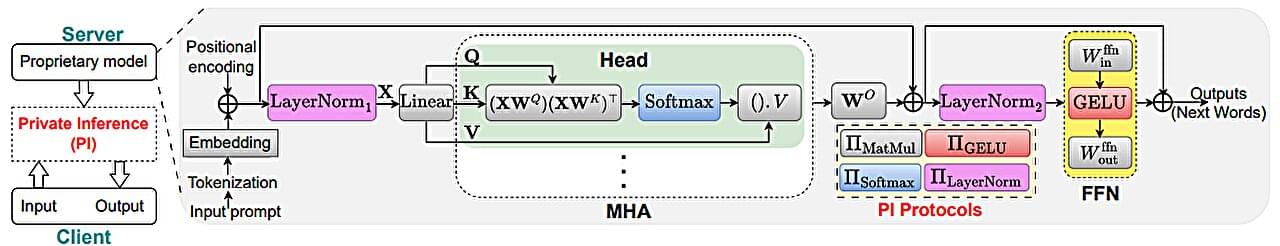

A recent study, “Entropy-Guided Attention for Private LLMs,” by Nandan Kumar Jha, a Ph.D. candidate at the NYU Center for Cybersecurity (CCS), and Brandon Reagen, Assistant Professor in the Department of Electrical and Computer Engineering and a member of CCS, introduces a novel approach to making AI more secure.

The paper was presented at the AAAI Workshop on Privacy-Preserving Artificial Intelligence (PPAI 25) in early March and is available on the arXiv preprint server.