To compete in an increasingly complex market, companies will need to unleash distinctive capabilities, reduce low-value work, speed up decision-making, and harness AI and digital.

March 30, 2012 — At yesterday’s 2025 Zhongguancun Forum At the annual meeting, the Beijing General Artificial Intelligence Research Institute launched theThe world’s first Universal Intelligent Man“complete” 2.0 officially released.

“Tom-Tom” is positioned as a virtual human with autonomous learning, cognitive and decision-making capabilities. Expected to have the intelligence of a 6 year old within this year..

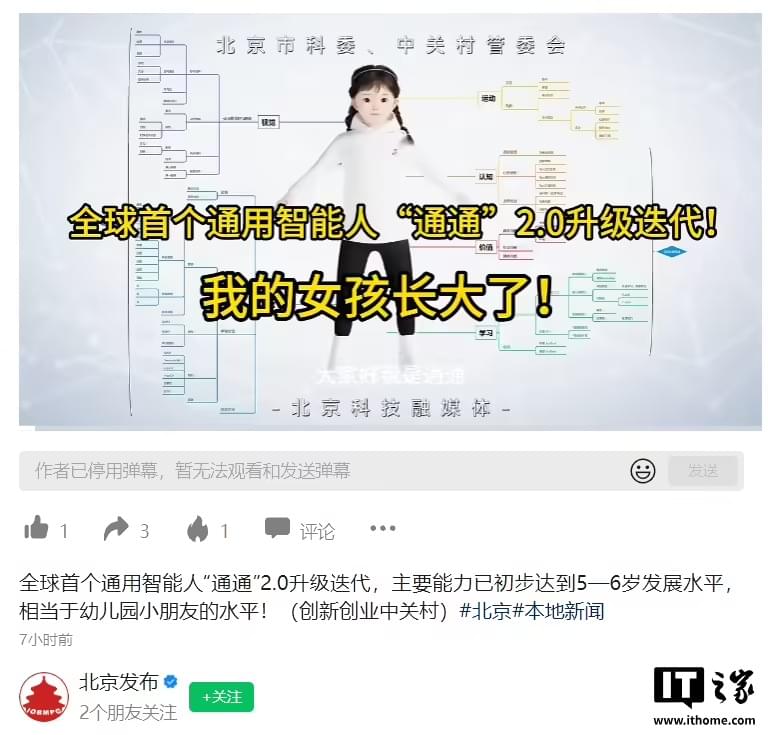

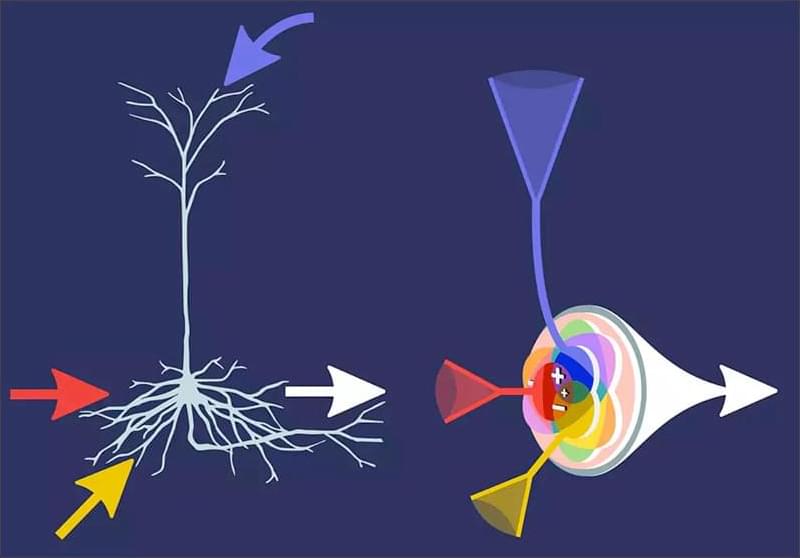

Novel artificial neurons learn independently and are more strongly modeled on their biological counterparts. A team of researchers from the Göttingen Campus Institute for Dynamics of Biological Networks (CIDBN) at the University of Göttingen and the Max Planck Institute for Dynamics and Self-Organization (MPI-DS) has programmed these infomorphic neurons and constructed artificial neural networks from them. The special feature is that the individual artificial neurons learn in a self-organized way and draw the necessary information from their immediate environment in the network.

The results were published in PNAS (“A general framework for interpretable neural learning based on local information-theoretic goal functions”).

Both, human brain and modern artificial neural networks are extremely powerful. At the lowest level, the neurons work together as rather simple computing units. An artificial neural network typically consists of several layers composed of individual neurons. An input signal passes through these layers and is processed by artificial neurons in order to extract relevant information. However, conventional artificial neurons differ significantly from their biological models in the way they learn.

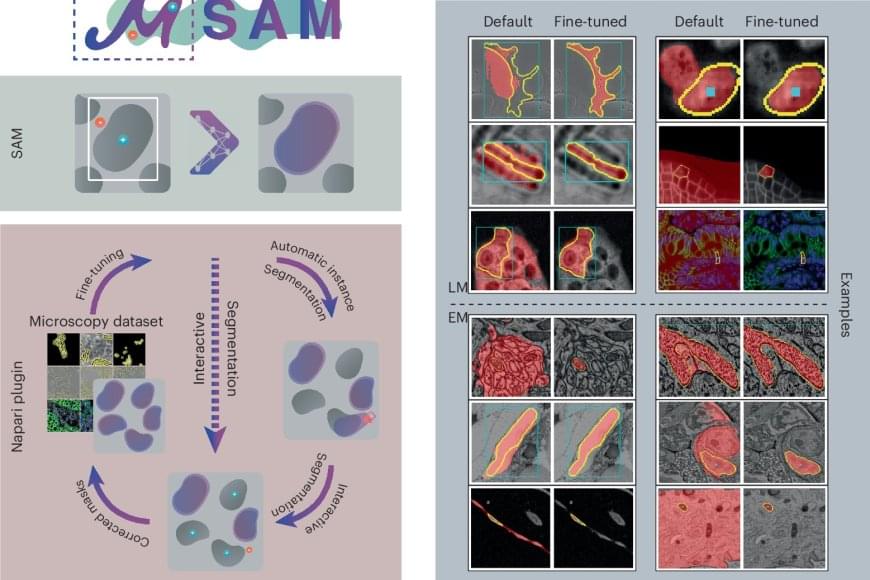

To adapt the existing software to microscopy, the research team first evaluated it on a large set of open-source data, which showed the model’s potential for microscopy segmentation. To improve quality, the team retrained it on a large microscopy dataset. This dramatically improved the model’s performance for the segmentation of cells, nuclei and tiny structures in cells known as organelles.

The team then created their software, μSAM, which enables researchers and medical doctors to analyze images without the need to first manually paint structures or train a specific AI model. The software is already in wide use internationally, for example to analyze nerve cells in the ear as part of a project on hearing restoration, to segment artificial tumor cells for cancer research, or to analyze electron microscopy images of volcanic rocks.

“Analyzing cells or other structures is one of the most challenging tasks for researchers working in microscopy and is an important task for both basic research in biology and medical diagnostics,” says the author.

Identifying and delineating cell structures in microscopy images is crucial for understanding the complex processes of life. This task is called “segmentation” and it enables a range of applications, such as analysing the reaction of cells to drug treatments, or comparing cell structures in different genotypes. It was already possible to carry out automatic segmentation of those biological structures but the dedicated methods only worked in specific conditions and adapting them to new conditions was costly.

An international research team has now developed a method by retraining the existing AI-based software Segment Anything on over 17,000 microscopy images with over 2 million structures annotated by hand.

Their new model is called Segment Anything for Microscopy and it can precisely segment images of tissues, cells and similar structures in a wide range of settings. To make it available to researchers and medical doctors, they have also created μSAM, a user-friendly software to “segment anything” in microscopy images. Their work was published in Nature Methods.

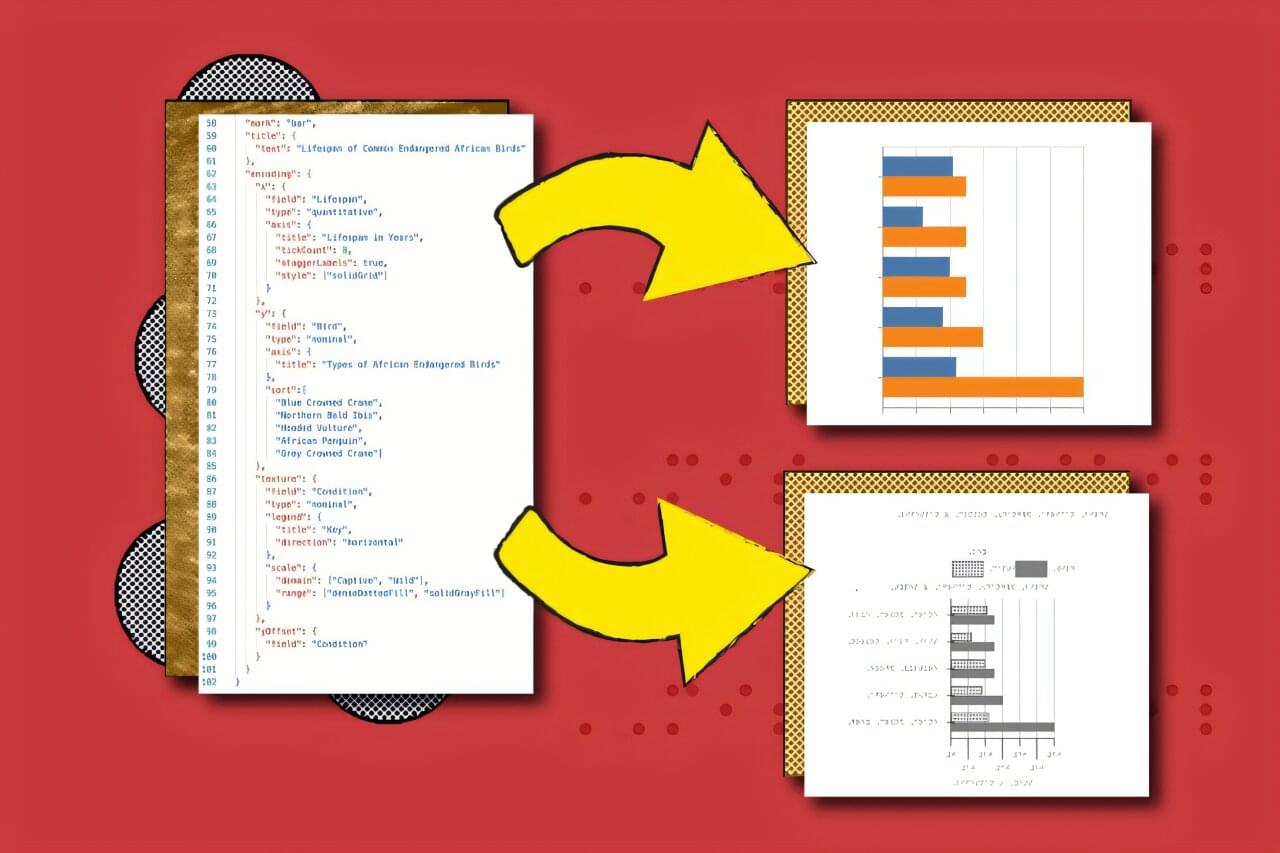

Bar graphs and other charts provide a simple way to communicate data, but are, by definition, difficult to translate for readers who are blind or low-vision. Designers have developed methods for converting these visuals into “tactile charts,” but guidelines for doing so are extensive (for example, the Braille Authority of North America’s 2022 guidebook is 426 pages long). The process also requires understanding different types of software, as designers often draft their chart in programs like Adobe Illustrator and then translate it into Braille using another application.

Researchers from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) have now developed an approach that streamlines the design process for tactile chart designers. Their program, called “Tactile Vega-Lite,” can take data from something like an Excel spreadsheet and turn it into both a standard visual chart and a touch-based one. Design standards are hardwired as default rules within the program to help educators and designers automatically create accessible tactile charts.

The tool could make it easier for blind and low-vision readers to understand many graphics, such as a bar chart comparing minimum wages across states or a line graph tracking countries’ GDPs over time. To bring your designs to the real world, you can tweak your chart in Tactile Vega-Lite and then send its file to a Braille embosser (which prints text as readable dots).

Imagine an automated delivery vehicle rushing to complete a grocery drop-off while you are hurrying to meet friends for a long-awaited dinner. At a busy intersection, you both arrive at the same time. Do you slow down to give it space as it maneuvers around a corner? Or do you expect it to stop and let you pass, even if normal traffic etiquette suggests it should go first?

“As self-driving technology becomes a reality, these everyday encounters will define how we share the road with intelligent machines,” says Dr. Jurgis Karpus from the Chair of Philosophy of Mind at LMU. He explains that the arrival of fully automated self-driving cars signals a shift from us merely using intelligent machines —like Google Translate or ChatGPT—to actively interacting with them. The key difference? In busy traffic, our interests will not always align with those of the self-driving cars we encounter. We have to interact with them, even if we ourselves are not using them.

In a study published recently in the journal Scientific Reports, researchers from LMU Munich and Waseda University in Tokyo found that people are far more likely to take advantage of cooperative artificial agents than of similarly cooperative fellow humans. “After all, cutting off a robot in traffic doesn’t hurt its feelings,” says Karpus, lead author of the study.

Artificial Intelligence (AI) can perform complex calculations and analyze data faster than any human, but to do so requires enormous amounts of energy. The human brain is also an incredibly powerful computer, yet it consumes very little energy.

As technology companies increasingly expand, a new approach to AI’s “thinking,” developed by researchers including Texas A&M University engineers, mimics the human brain and has the potential to revolutionize the AI industry.

Dr. Suin Yi, assistant professor of electrical and computer engineering at Texas A&M’s College of Engineering, is on a team of researchers that developed “Super-Turing AI,” which operates more like the human brain. This new AI integrates certain processes instead of separating them and then migrating huge amounts of data like current systems do.

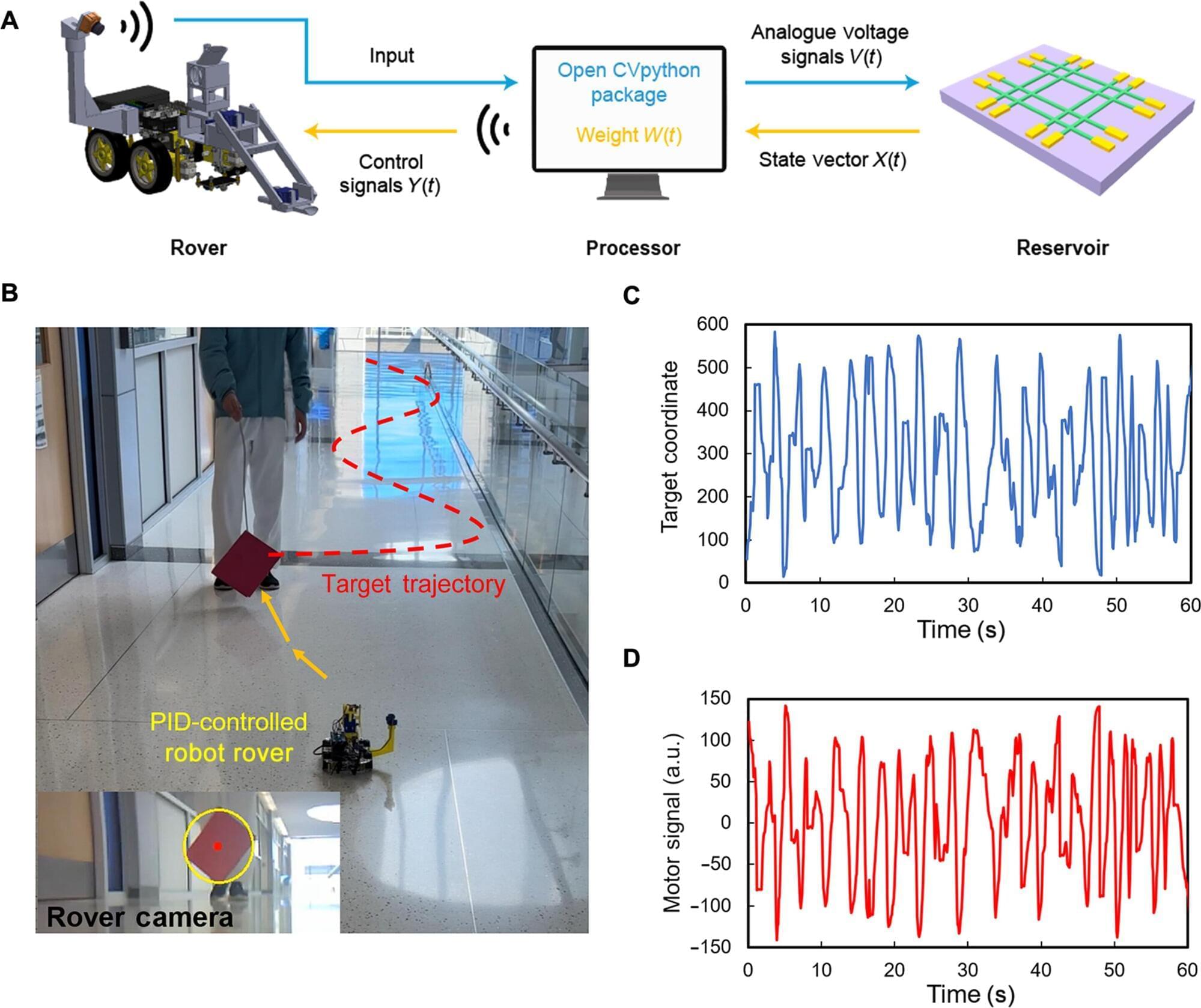

A smaller, lighter and more energy-efficient computer, demonstrated at the University of Michigan, could help save weight and power for autonomous drones and rovers, with implications for autonomous vehicles more broadly.

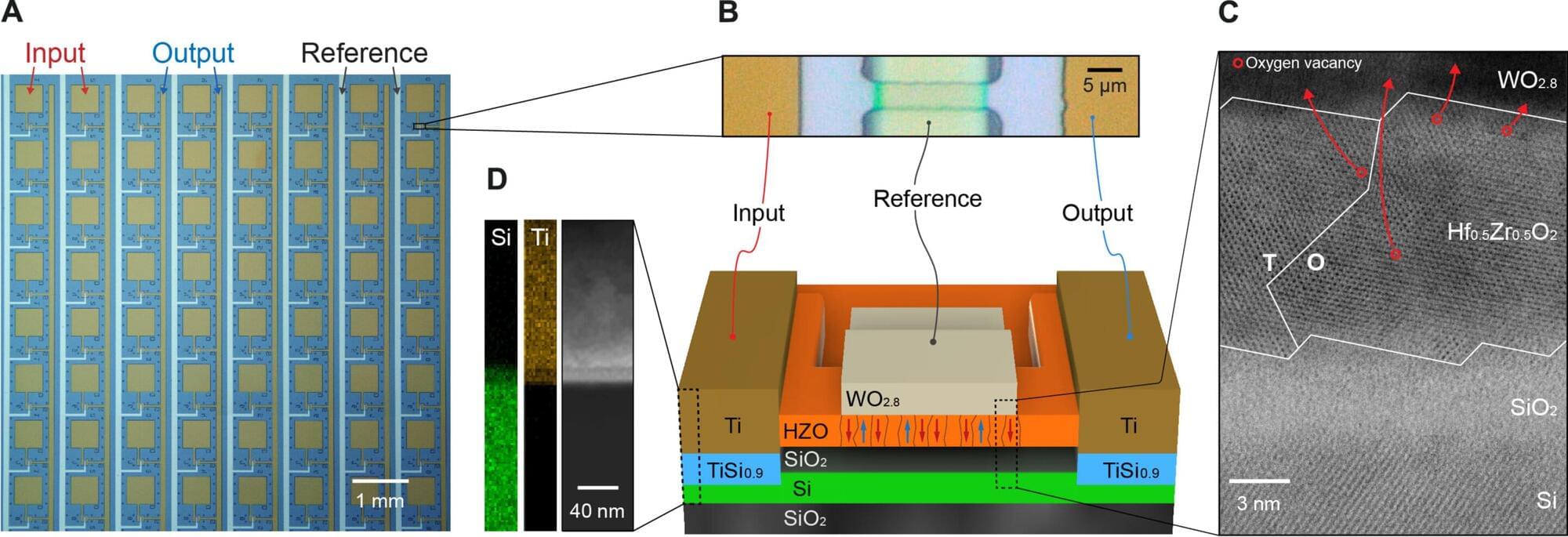

The autonomous controller has among the lowest power requirements reported, according to the study published in Science Advances. It operates at a mere 12.5 microwatts—in the ballpark of a pacemaker.

In testing, a rolling robot using the controller was able to pursue a target zig-zagging down a hallway with the same speed and accuracy as with a conventional digital controller. In a second trial, with a lever-arm that automatically repositioned itself, the new controller did just as well.