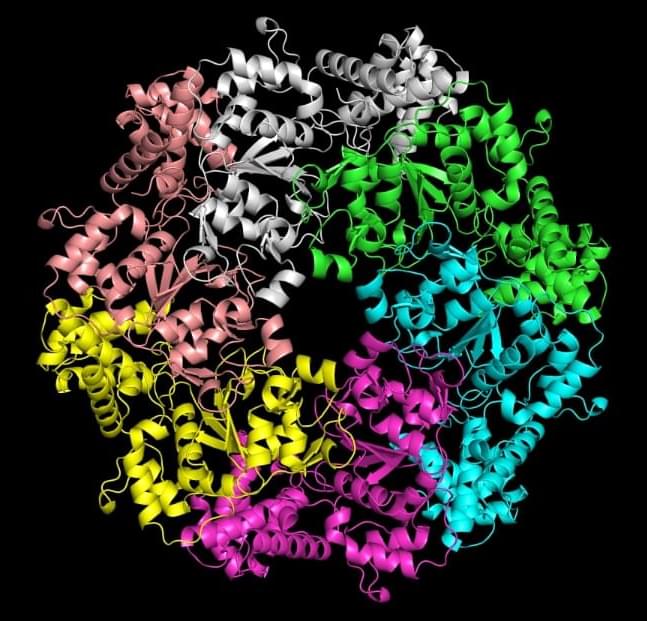

Juan Lavista Ferres, CVP and chief data scientist at Microsoft’s AI for Good Lab, and Meghana Kshirsagar, senior research scientist, discuss their research on protein symmetry.

Al is advancing faster than ever, and World is building the infrastructure to ensure humanity stays at the center of it.

At this year’s event, held in San Francisco, Alex Blania and Sam Altman unveiled the next chapter in World’s mission:

To create proof of personhood at global scale, safeguard human identity in the age of Al, and expand access to a privacy-preserving financial ecosystem built for everyone.

- World’s US launch — Next-gen Orb and Orb Mini — World ID partnerships with Razer and Match — World App 4.0 — World Card with Visa — Path to full decentralization.

AI is advancing faster than ever, and World is building the infrastructure to ensure humanity stays at the center of it.

In this episode, we return to the subject of existential risks, but with a focus on what actions can be taken to eliminate or reduce these risks.

Our guest is James Norris, who describes himself on his website as an existential safety advocate. The website lists four primary organizations which he leads: the International AI Governance Alliance, Upgradable, the Center for Existential Safety, and Survival Sanctuaries.

Previously, one of James’ many successful initiatives was Effective Altruism Global, the international conference series for effective altruists. He also spent some time as the organizer of a kind of sibling organization to London Futurists, namely Bay Area Futurists. He graduated from the University of Texas at Austin with a triple major in psychology, sociology, and philosophy, as well as with minors in too many subjects to mention.

Selected follow-ups:

• James Norris website (https://www.jamesnorris.org/)

• Upgrade your life & legacy (https://www.upgradable.org/) — Upgradable.

• The 7 Habits of Highly Effective People (https://www.franklincovey.com/courses… (Stephen Covey)

• Beneficial AI 2017 (https://futureoflife.org/event/bai-2017/) — Asilomar conference.

• \

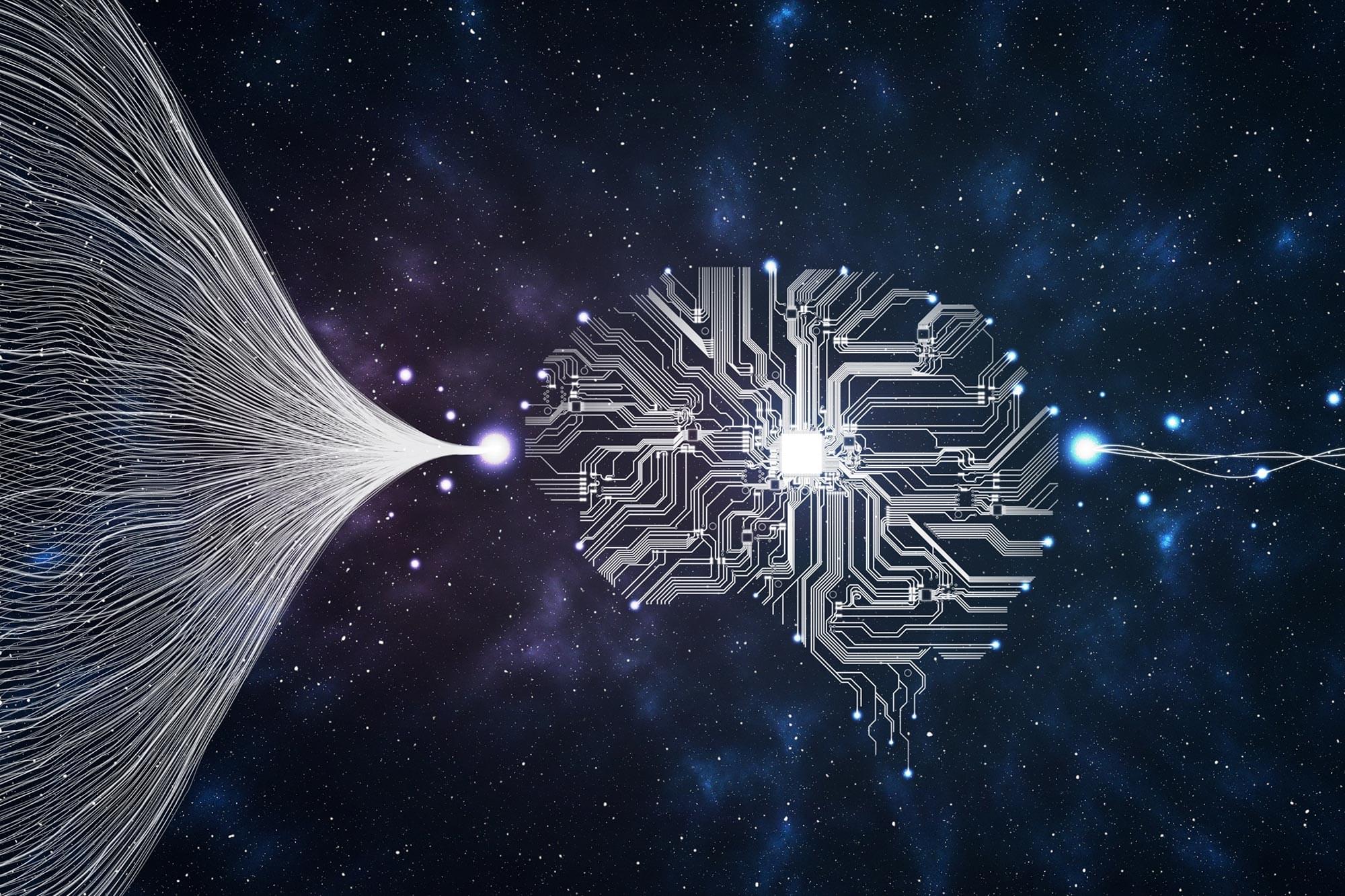

Machine learning automates the control of a large and highly connected array of semiconductor quantum dots.

Even the most compelling experiment can become boring when repeated dozens of times. Therefore, rather than using artificial intelligence to automate the creative and insightful aspects of science and engineering, automation should focus instead on improving the productivity of researchers. In that vein, Justyna Zwolak of the National Institute of Standards and Technology in Maryland and her colleagues have demonstrated software for automating standard parts of experiments on semiconductor quantum-dot qubits [1]. The feat is a step toward the fully automated calibration of quantum processors. Larger and more challenging spin and quantum computing experiments will likely also benefit from it [2].

Semiconductor technology enables the fabrication of quantum-mechanical devices with unparalleled control [3], performance [4], reproducibility [5], and large-scale integration [6]—exactly what is needed for a highly scalable quantum computer. Classical digital logic represents bits as localized volumes of high or low electric potential, and the semiconductor industry has developed efficient ways to control such potentials—exactly what is needed for the operation of qubits based on quantum dots. Silicon or germanium are nearly ideal semiconductors to host qubits encoded in the spin state of electrons or electron vacancies (holes) confined in an electric potential formed in a quantum dot by transistor-like gate electrodes.