Multiple AI jailbreaks and tool poisoning flaws expose GenAI systems like GPT-4.1 and MCP to critical security risks.

Lately, there’s been growing pushback against the idea that AI will transform geroscience in the short term.

When Nobel laureate Demis Hassabis told 60 Minutes that AI could help cure every disease within 5–10 years, many in the longevity and biotech communities scoffed. Leading aging biologists called it wishful thinking — or outright fantasy.

They argue that we still lack crucial biological data to train AI models, and that experiments and clinical trials move too slowly to change the timeline.

Our guest in this episode, Professor Derya Unutmaz, knows these objections well. But he’s firmly on Team Hassabis.

In fact, Unutmaz goes even further. He says we won’t just cure diseases — we’ll solve aging itself within the next 20 years.

And best of all, he offers a surprisingly detailed, concrete explanation of how it will happen:

building virtual cells, modeling entire biological systems in silico, and dramatically accelerating drug discovery — powered by next-generation AI reasoning engines.

🧬 In this wide-ranging conversation, we also cover:

✅ Why biological complexity is no longer an unsolvable barrier.

✅ How digital twins could revolutionize diagnosis and treatment.

✅ Why clinical trials as we know them may soon collapse.

✅ The accelerating timeline toward longevity escape velocity.

✅ How reasoning AIs (like GPT-4o, o1, DeepSeek) are changing scientific research.

✅ Whether AI creativity challenges the idea that only biological minds can create.

✅ Why AI will force a new culture of leisure, curiosity, and human flourishing.

✅ The existential stress that will come as AI outperforms human expertise.

✅ Why “Don’t die” is no longer a joke — it’s real advice.

🎙️ Hosted — as always — by Peter Ottsjö (tech journalist and author of Evigt Ung) and Dr. Patrick Linden (philosopher and author of The Case Against Death).

1st with ALS. 1st nonverbal.

Scientists have mapped an unprecedentedly large portion of the brain of a mouse. The cubic millimeter worth of brain tissue represents the largest piece of a brain we’ve ever understood to this degree, and the researchers behind this project say that the mouse brain is similar enough to the human brain that they can even extrapolate things about us. A cubic millimeter sounds tiny—to us, it is tiny—but a map of 200,000 brain cells represents just over a quarter of a percent of the mouse brain. In brain science terms, that’s extraordinarily high. A proportionate sample of the human brain would be 240 million cells.

Within the sciences, coding and computer science can sometimes overshadow the physical and life sciences. Rhetoric about artificial intelligence has raced ahead with terms like “human intelligence,” but the human brain is not well enough understood to truly give credence to that idea. Scientists have worked for decades to analyze the brain, and they’re making great progress despite the outsized rhetoric working against them.

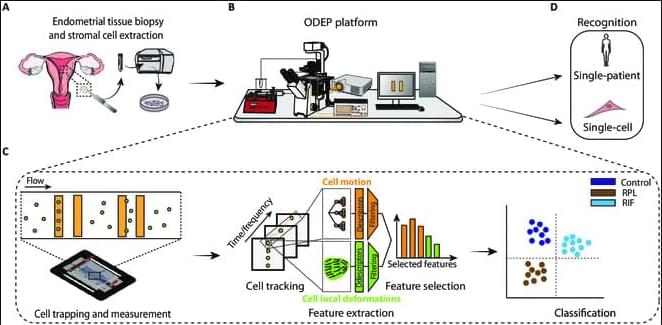

The presence of cellular defects of multifactorial nature can be hard to characterize accurately and early due to the complex interplay of genetic, environmental, and lifestyle factors. With this study, by bridging optically-induced dielectrophoresis (ODEP), microfluidics, live-cell imaging, and machine learning, we provide the ground for devising a robotic micromanipulation and analysis system for single-cell phenotyping. Cells under the influence of nonuniform electric fields generated via ODEP can be recorded and measured. The induced responses obtained under time-variant ODEP stimulation reflect the cells’ chemical, morphological, and structural characteristics in an automated, flexible, and label-free manner.

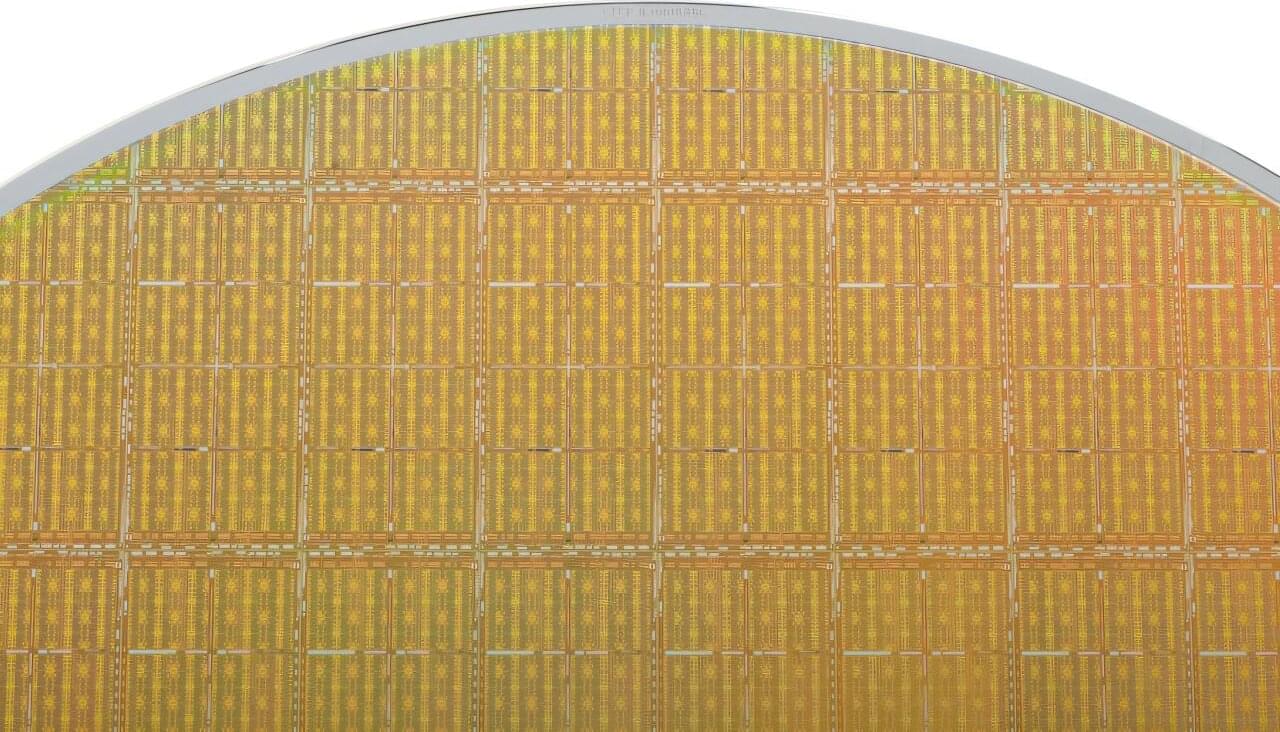

As demand grows for more powerful and efficient microelectronics systems, industry is turning to 3D integration—stacking chips on top of each other. This vertically layered architecture could allow high-performance processors, like those used for artificial intelligence, to be packaged closely with other highly specialized chips for communication or imaging. But technologists everywhere face a major challenge: how to prevent these stacks from overheating.

Now, MIT Lincoln Laboratory has developed a specialized chip to test and validate cooling solutions for packaged chip stacks. The chip dissipates extremely high power, mimicking high-performance logic chips, to generate heat through the silicon layer and in localized hot spots. Then, as cooling technologies are applied to the packaged stack, the chip measures temperature changes. When sandwiched in a stack, the chip will allow researchers to study how heat moves through stack layers and benchmark progress in keeping them cool.

“If you have just a single chip, you can cool it from above or below. But if you start stacking several chips on top of each other, the heat has nowhere to escape. No cooling methods exist today that allow industry to stack multiples of these really high-performance chips,” says Chenson Chen, who led the development of the chip with Ryan Keech, both of the laboratory’s Advanced Materials and Microsystems Group.

Future drops, freebies & digital gifts – Don’t miss out! Join here:

https://docs.google.com/forms/d/e/1FAIpQLSdeuqNNvEjhpL_PQrF4…usp=dialog.

Ray Kurzweil, one of the world’s leading futurists, has made hundreds of predictions about technology’s future. From portable devices and wireless internet to brain-computer interfaces and nanobots in our bloodstream, Kurzweil has envisioned a future that sometimes feels like science fiction—but much of it is becoming reality.

In this video, we explore 7 of Ray Kurzweil’s boldest predictions:

00:00 — 01:44 Intro.

01:44 — 02:42 Prediction 1: Portable Devices and Wireless Internet.

02:42 — 03:34 Prediction 2: Self-Driving Cars by Early 2020s.

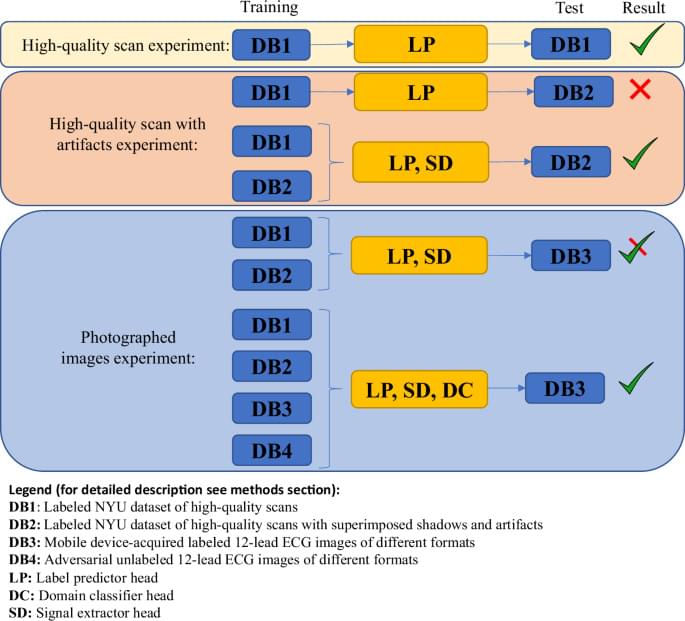

Gliner, V., Levy, I., Tsutsui, K. et al. Clinically meaningful interpretability of an AI model for ECG classification. npj Digit. Med. 8, 109 (2025). https://doi.org/10.1038/s41746-025-01467-8