Criegee intermediates (CIs)—highly reactive species formed when ozone reacts with alkenes in the atmosphere—play a crucial role in generating hydroxyl radicals (the atmosphere’s “cleansing agents”) and aerosols that impact climate and air quality. The syn-CH3CHOO is particularly important among these intermediates, accounting for 25%–79% of all CIs depending on the season.

Until now, scientists have believed that syn-CH3CHOO primarily disappeared through self-decomposition. However, in a study published in Nature Chemistry, a team led by Profs. Yang Xueming, Zhang Donghui, Dong Wenrui and Fu Bina from the Dalian Institute of Chemical Physics (DICP) of the Chinese Academy of Sciences has uncovered a surprising new pathway: syn-CH3CHOO’s reaction with atmospheric water vapor is approximately 100 times faster than previously predicted by theoretical models.

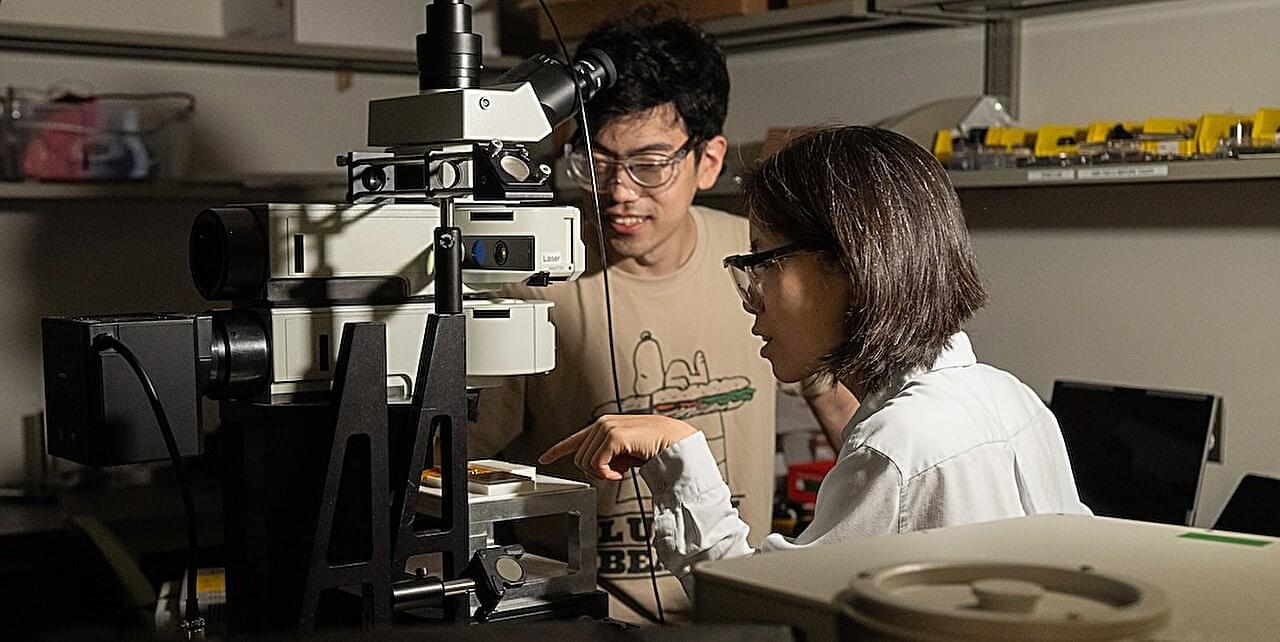

Using advanced laser techniques, the researchers experimentally measured the reaction rate between syn-CH3CHOO and water vapor, and discovered the faster reaction time. To uncover the reason behind this acceleration, they constructed a high-accuracy full-dimensional (27D) potential energy surface using the fundamental invariant-neural network approach and performed full-dimensional dynamical calculations.