Millenials grew up under the technological halo of Moore’s law, enjoying booming exponential growth of computation power that ushered in the information age. It should come as no surprise that transhumanism has earned a degree of mainstream acceptance—from Hollywood movies to magazine covers and the latest sci-fi TV. Transhumanist beliefs will continue to permeate culture as long as the promise of technological progress holds its end of the bargain.

For transhumanist faiths, technology becomes a way of cashing checks religion helped write.

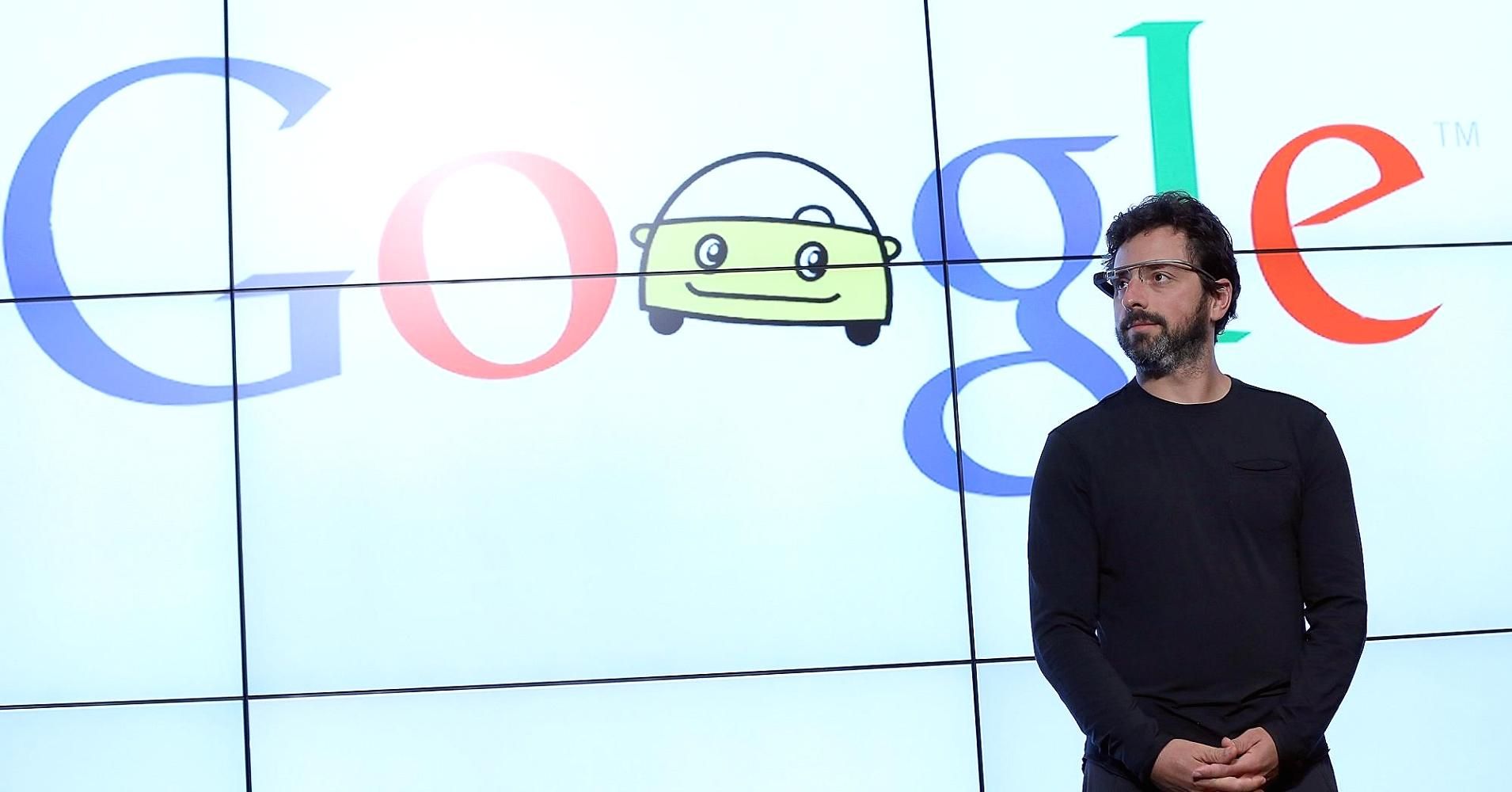

For instance, Silicon Valley engineer Anthony Levandowski—whom you may know from the Uber-Waymo lawsuit over self-driving car technology—recently launched the Way of the Future Church, a new religious organization based on developing godlike artificial intelligence. On its website, the Way of the Future states, “We believe the creation of ‘super intelligence’ is inevitable,” and according to IRS documents detailed by Wired, this new religion seeks “the realization, acceptance, and worship of a Godhead based on Artificial Intelligence (AI) developed through computer hardware and software.” This exuberance departs from the cautious stance toward A.I. taken by Hawking, Musk, and others who warn that artificial superintelligence could pose an existential threat. However, regardless of whether artificial superintelligence is seen as an angel or a demon, Hawking, Musk, and A.I. evangelists alike share the common belief that this technology should be taken seriously.