https://facebook.com/LongevityFB https://instagram.com/longevityyy/ https://twitter.com/Longevityyyyy https://linkedin.com/company/longevityy/

- Please also subscribe and hit the notification bell and click “all” on these YouTube channels:

https://youtube.com/channel/UCAvRKtQNLKkAX0pOKUTOuzw

https://youtube.com/user/BrentAltonNally

https://youtube.com/user/EternalLifeFan

https://youtube.com/user/MaxSam16

https://youtube.com/user/LifespanIO/videos

SHOW NOTES WITH TIME STAMPS:

:00 CHANNEL TRAILER

:22 Gene The Chromosome intro

:14 José interview begins. Follow José Cordeiro on social media: https://facebook.com/josecordeiro2045 https://linkedin.com/in/josecordeiro/ https://twitter.com/cordeiro https://instagram.com/josecordeiro2019/ https://youtube.com/channel/UCnf2guj8tjfigS3w2UV51Qg

:55 https://raadfest.com/ Watch 2019 RAADFest Roundup https://youtube.com/playlist?list=PLGjySL94COVSO3hcnpZq-jCcgnUQIaALQ

:14 BUY LA MUERTE DE LA MUERTE (THE DEATH OF DEATH)

:20 Transhumanist’s 3 core beliefs

:22 Law of Accelerating Returns

:45 José believes we will cure human aging in the next 2–3 decades

:31 quantum computers

:33 Ray Kurzweil

:02 Longevity Escape Velocity

:56 The Singularity is Nearer

:46 the world is improving overall thanks to science and technology

:35 overpopulation fallacy

:14 Idiocracy

:53 Zero to One

:10 human aging and death is the biggest problem for humanity

:45 José plans to be biologically younger than 30 by 2040–2045

:02 How to convince religious people to believe in science and biorejuvenation

:44 everything is “impossible” until it becomes possible

:44 Artificial General Intelligence (AGI)

:20 José is not afraid of Artificial Intelligence. José is afraid of human stupidity.

:00:20 Brent Nally & Vladimir Trufanov are co-founders of https://levscience.com Watch to learn more https://youtube.com/watch?v=iSGJs4_Qkd8&t=1266s

:01:15 Watch Brent’s interviews with Dr. Alex Zhavoronkov https://youtube.com/watch?v=w5csqq8RAqY & https://youtube.com/watch?v=G5IiEuXHvk8

:02:40 José shares what he believes causes human aging and the best treatments for aging

:09:04 Watch Brent’s interviews with Dr. Aubrey de Grey https://youtube.com/watch?v=TquJyz7tGfk&t=226s & https://youtube.com/watch?v=RWRa6kVKv8o

:11:30 Dr. David Sinclair

:12:01 non-aging related risks for human death

:13:08 Watch Transhumania cryonics video https://youtube.com/watch?v=8arbOJpDTMw

:22:30 We wish everyone incredible health and a long life!

:23:26 first ~3 minutes of Idiocracy https://youtube.com/watch?v=YwZ0ZUy7P3E

:25:55 Gennady Stolyarov II

:30:10 THE LIFE OF LIFE

:32:05 there are many biologically immortal species

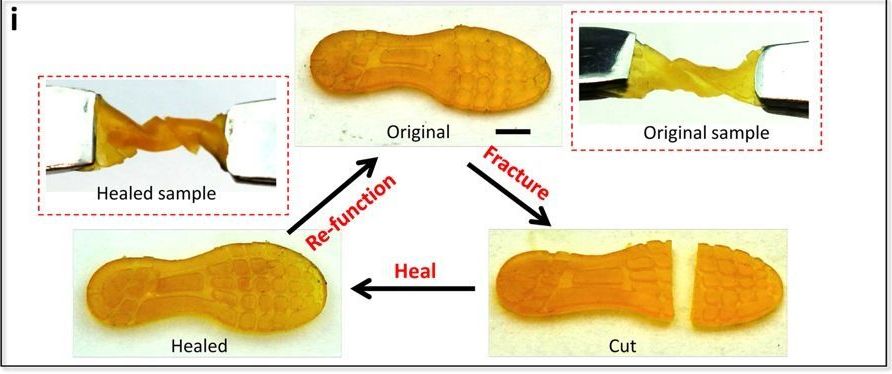

:33:02 telomerase gene therapy

:37:38 Viva la Revolución!