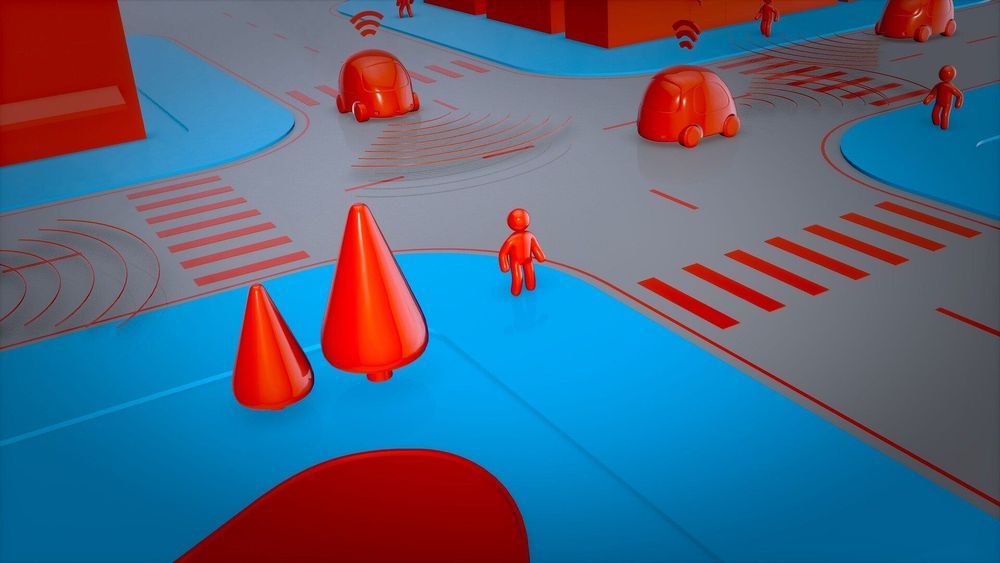

Lidar can be the third eye and an essential component for safe driving in your automated car’s future. That is the word from Bosch. They want the world to know that two is not ideal company; three is better company. Cameras and radar alone don’t cut it.

CES is just around the corner and Bosch wants to make some noise at the event about its new lidar system which will make its debut there. The Bosch entry is described as a long-range lidar sensor suitable for car use.

The company is posing a question that makes it difficult to refuse: Do you want safety or do you want the highest level of safety? Two things Bosch wants you to know: it can work in both highway and city driving scenarios, as said in the company release, that “Bosch sensor will cover both long and close ranges—on highways and in the city” and it will work in concert with cameras and radar.