MIT engineers have designed a “brain-on-a-chip,” smaller than a piece of confetti, that is made from tens of thousands of artificial brain synapses known as memristors—silicon-based components that mimic the information-transmitting synapses in the human brain.

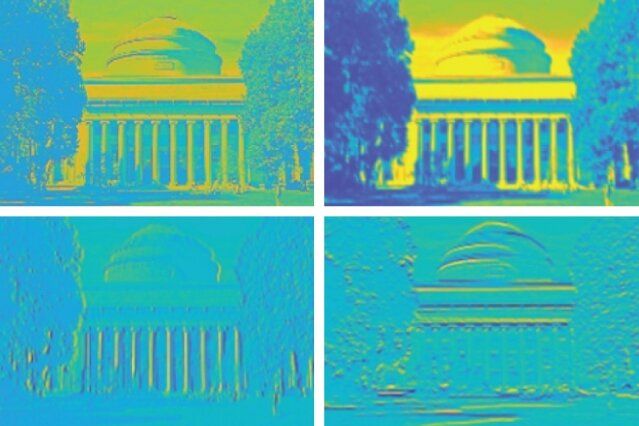

The researchers borrowed from principles of metallurgy to fabricate each memristor from alloys of silver and copper, along with silicon. When they ran the chip through several visual tasks, the chip was able to “remember” stored images and reproduce them many times over, in versions that were crisper and cleaner compared with existing memristor designs made with unalloyed elements.

Their results, published today in the journal Nature Nanotechnology, demonstrate a promising new memristor design for neuromorphic devices—electronics that are based on a new type of circuit that processes information in a way that mimics the brain’s neural architecture. Such brain-inspired circuits could be built into small, portable devices, and would carry out complex computational tasks that only today’s supercomputers can handle.