Researchers tested a four-legged robot on sand, oil, rocks and other tricky surfaces.

Category: robotics/AI – Page 1,975

DARPA FENCE seeks to develop smart camera tech that mimics human brain

The US Defense Advanced Research Projects Agency (DARPA) has selected three teams of researchers led by Raytheon, BAE Systems, and Northrop Grumman to develop event-based infrared (IR) camera technologies under the Fast Event-based Neuromorphic Camera and Electronics (FENCE) program. It is designed to make computer vision cameras more efficient by mimicking how the human brain processes information. DARPA’s FENCE program aims to develop a new class of low-latency, low-power, event-based infrared focal plane array (FPA) and digital signal processing (DSP) and machine learning (ML) algorithms. The development of these neuromorphic camera technologies will enable intelligent sensors that can handle more dynamic scenes and aid future military applications.

New intelligent event-based — or neuromorphic — cameras can handle more dynamic scenes.

Meet PetBot, a DIY robot that turns plastic bottles into filaments

A new DIY robot called PetBot is a unique development that will help to recycle ordinary plastic bottles. The process is not yet fully automated, and the device does not claim to be used for commercial purposes, but the benefits of its operation are obvious. Built by JRT3D, the PetBot automates the plastic recycling process by cutting PET bottles into the tape and then turning them into filament. The robot combines several mechanics, each of which performs its part of the task. It carries out the two separate processes at the same time using the same stepper motor.

The machine automates the plastic recycling process by cutting PET bottles and turning them into filament.

This TikTok Lawsuit Is Highlighting How AI Is Screwing Over Voice Actors

AI taking over voice acting is just the beginning. Why cant the AI render and generate the game world all by itself. Why cant it generate the models, and actors for the game from scratch; and then use deepfake type animation to animate characters. And, then write an original script for the story. These things are all possible now. Only a matter of time before someone has the idea to put it all together.

Voice actors are rallying behind Bev Standing, who is alleging that TikTok acquired and replicated her voice using AI without her knowledge.

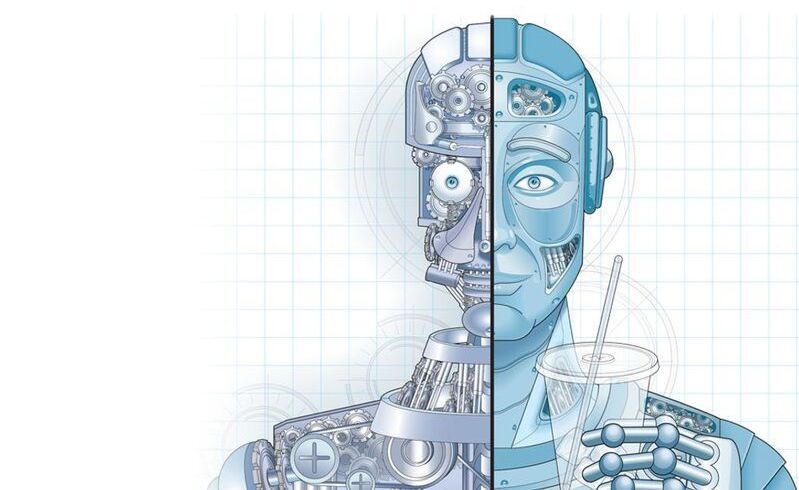

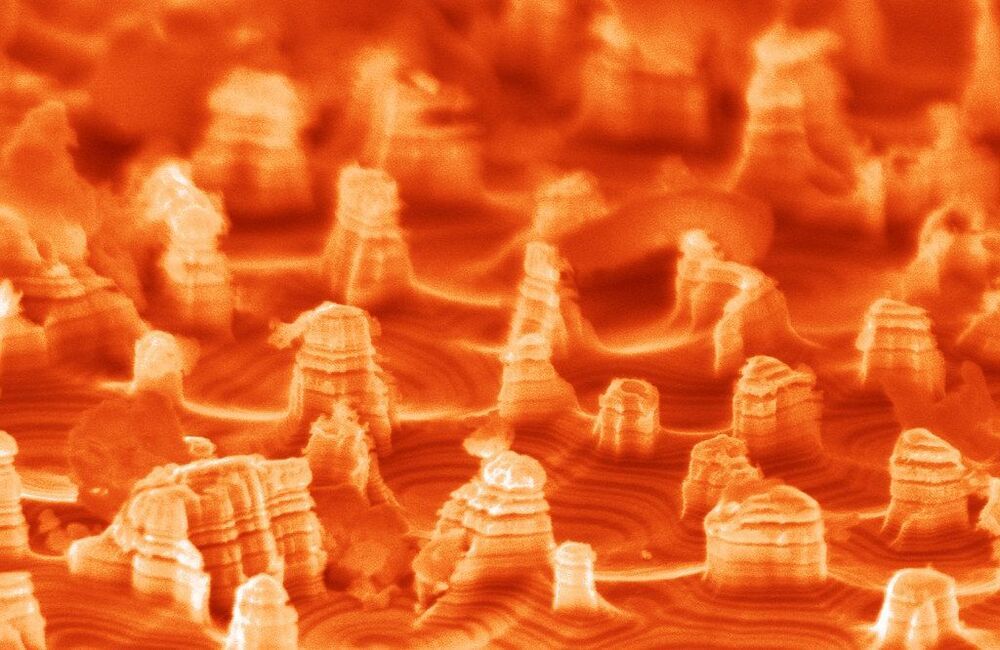

Nanorobotics: what it is, what it can do, and how it can become reality

They’re tiny machines that work on the nanoscale, being up to 100000 times smaller than the width of a human hair. These machines, otherwise known as nanorobotics, are set to augment the human race in unforeseen ways.

However, this microscopic technology has remained in the prototype phase for the past two decades, failing to truly live up to its promise, and lagging due to difficult manufacturing processes, a lack of standardization, and scant reviews of the available literature.

Picture a scenario where you’re ill and need to see your doctor. However, instead of giving you a pill or a shot, your doctor injects you with a swarm of tiny robots.

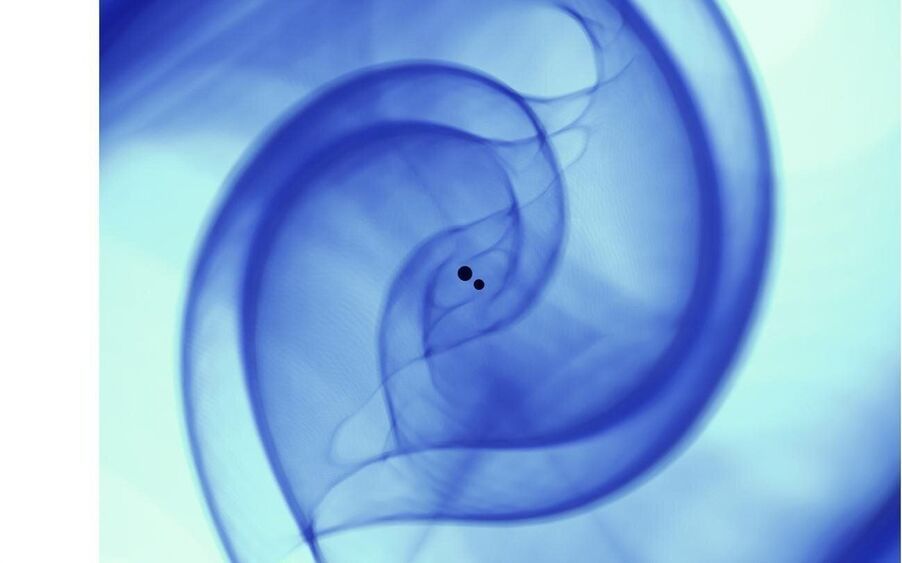

Scientists use artificial intelligence to detect gravitational waves

When gravitational waves were first detected in 2015 by the advanced Laser Interferometer Gravitational-Wave Observatory (LIGO), they sent a ripple through the scientific community, as they confirmed another of Einstein’s theories and marked the birth of gravitational wave astronomy. Five years later, numerous gravitational wave sources have been detected, including the first observation of two colliding neutron stars in gravitational and electromagnetic waves.

As LIGO and its international partners continue to upgrade their detectors’ sensitivity to gravitational waves, they will be able to probe a larger volume of the universe, thereby making the detection of gravitational wave sources a daily occurrence. This discovery deluge will launch the era of precision astronomy that takes into consideration extrasolar messenger phenomena, including electromagnetic radiation, gravitational waves, neutrinos and cosmic rays. Realizing this goal, however, will require a radical re-thinking of existing methods used to search for and find gravitational waves.

Recently, computational scientist and lead for translational artificial intelligence (AI) Eliu Huerta of the U.S. Department of Energy’s (DOE) Argonne National Laboratory, in conjunction with collaborators from Argonne, the University of Chicago, the University of Illinois at Urbana-Champaign, NVIDIA and IBM, has developed a new production-scale AI framework that allows for accelerated, scalable and reproducible detection of gravitational waves.