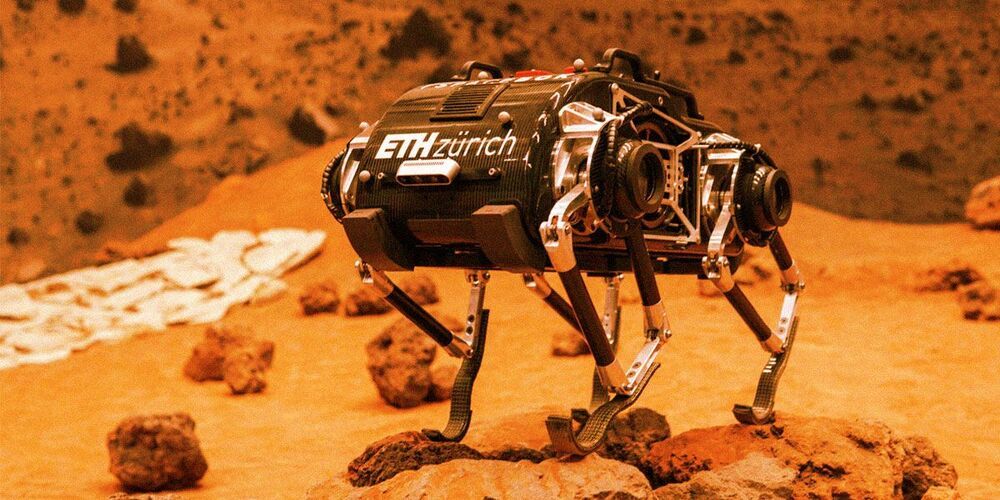

I think there is actually a company that makes something similar to this.

The self-balancing bike is a reminder of the incredibly creative projects that students and young recently graduated engineers can come up with — another recent example is an all-electric monowheel built by a group of Duke University students.

In principle, Zhi Jui Jun’s self-balancing bike should work with someone riding it as well, though no one is shown riding it in Jui Jun’s video — the bicycle steering and keeping balance with the added top-heavy weight of a person would be a sight to behold. Stay posted for updates on any “piloted” tests in the future.