Empathy, the ability to understand what others are feeling and emotionally connect with their experiences, can be highly advantageous for humans, as it allows them to strengthen relationships and thrive in some professional settings. The development of tools for reliably measuring people’s empathy has thus been a key objective of many past psychology studies.

Most existing methods for measuring empathy rely on self-reports and questionnaires, such as the interpersonal reactivity index (IRI), the Empathy Quotient (EQ) test and the Toronto Empathy Questionnaire (TEQ). Over the past few years, however, some scientists have been trying to develop alternative techniques for measuring empathy, some of which rely on machine learning algorithms or other computational models.

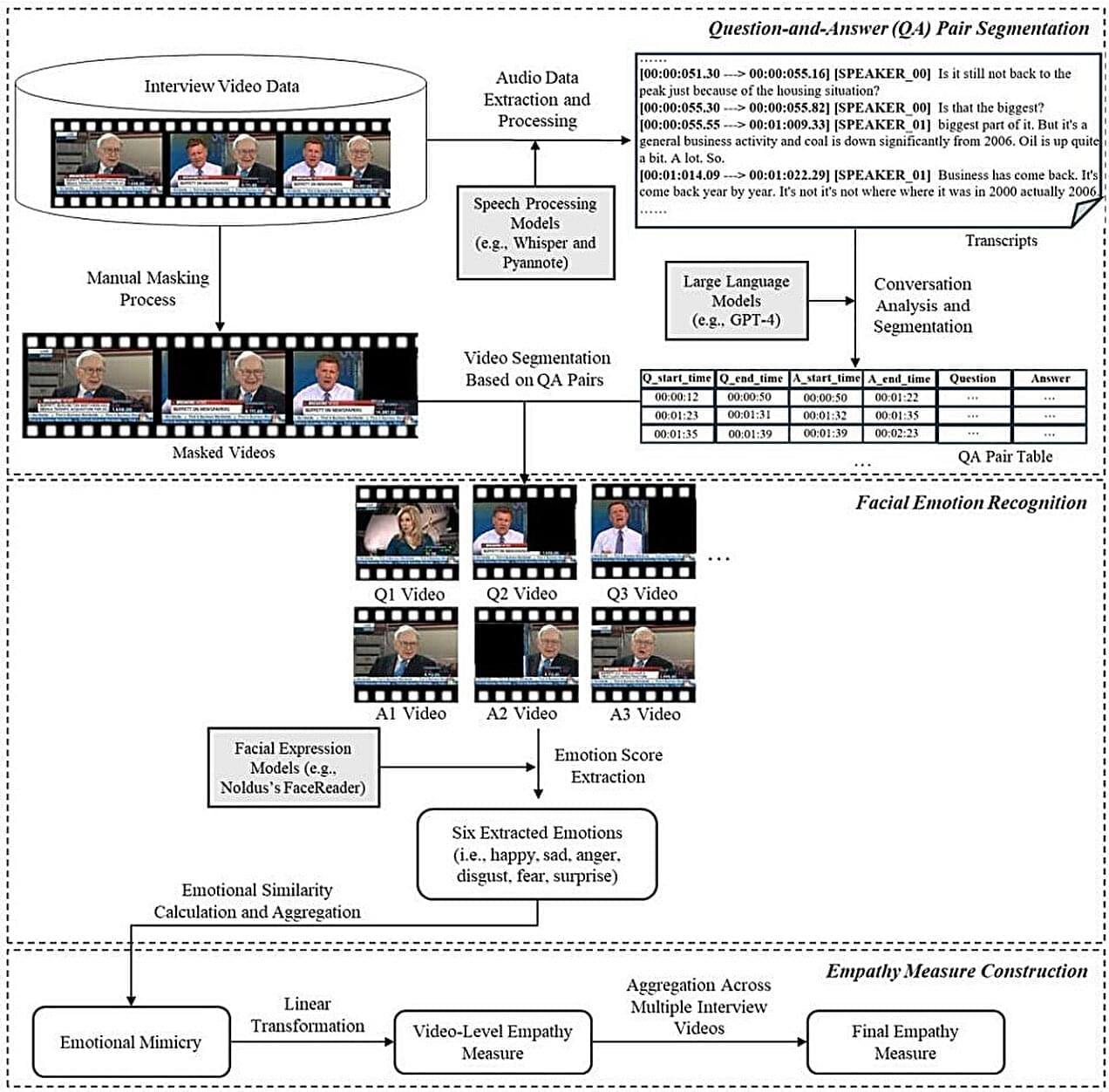

Researchers at Hong Kong Polytechnic University have recently introduced a new machine learning-based video analytics framework that could be used to predict the empathy of people captured in video footage. Their framework, introduced in a preprint paper published in SSRN, could prove to be a valuable tool for conducting organizational psychology research, as well as other empathy-related studies.