Leipzig University just got the world’s largest brain-inspired supercomputer. Could this be the future of drug discovery?

Microsoft has introduced Copilot Mode, an experimental feature designed to transform Microsoft Edge into a web browser powered by artificial intelligence (AI).

As the company explained on Monday, this new mode transforms Edge’s interface, with new tabs showing a single input box that combines chat, search, and web navigation functions.

Once Copilot Mode is enabled, the AI assistant will be able to analyze all open browser tabs with the user’s permission, comparing information and assisting with various tasks, such as researching vacation rentals.

Apple Inc. has lost its fourth AI researcher in a month to Meta Platforms Inc., marking the latest setback to the iPhone maker’s artificial intelligence efforts.

Bowen Zhang, a key multimodal AI researcher at Apple, left the company on Friday and is set to join Meta’s recently formed superintelligence team, according to people familiar with the matter. Zhang was part of the Apple foundation models group, or AFM, which built the core technology behind the company’s AI platform.

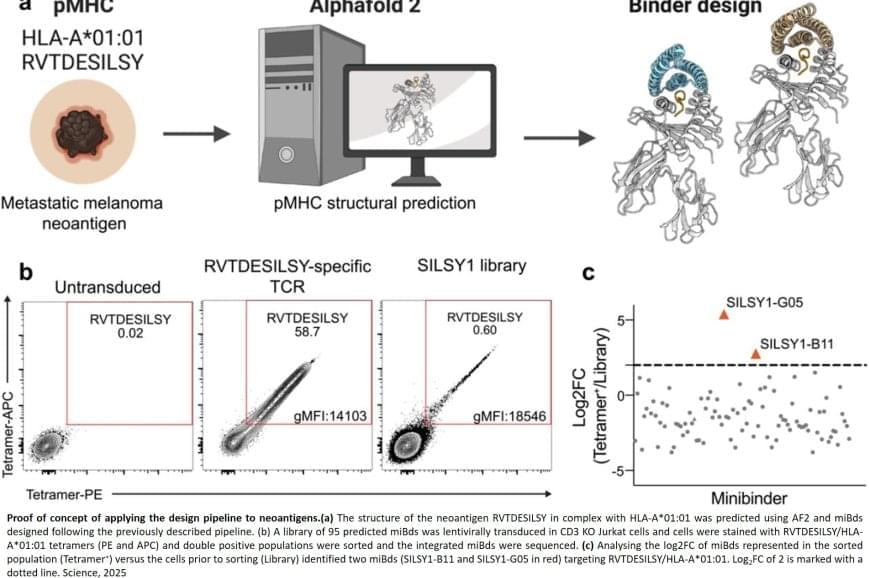

Normally, T cells naturally identify cancer cells by recognizing specific protein fragments, known as peptides, presented on the cell surface by molecules called pMHCs. It is a slow and challenging process to utilize this knowledge for therapy, often because the variation in the body’s own T-cell receptors makes it challenging to create a personalized treatment.

In the study, the researchers tested the strength of the AI platform on a well-known cancer target, NY-ESO-1, which is found in a wide range of cancers. The team succeeded in designing a minibinder that bound tightly to the NY-ESO-1 pMHC molecules. When the designed protein was inserted into T cells, it created a unique new cell product named ‘IMPAC-T’ cells by the researchers, which effectively guided the T cells to kill cancer cells in laboratory experiments.

“It was incredibly exciting to take these minibinders, which were created entirely on a computer, and see them work so effectively in the laboratory,” says a co-author of the study.

The researchers also applied the pipeline to design binders for a cancer target identified in a metastatic melanoma patient, successfully generating binders for this target as well. This documented that the method also can be used for tailored immunotherapy against novel cancer targets.

A crucial step in the researchers’ innovation was the development of a ‘virtual safety check’. The team used AI to screen their designed minibinders and assess them in relation to pMHC molecules found on healthy cells. This method enabled them to filter out minibinders that could cause dangerous side effects before any experiments were carried out.

Precision cancer treatment on a larger scale is moving closer after researchers have developed an AI platform that can tailor protein components and arm the patient’s immune cells to fight cancer. The new method, published in the scientific journal Science, demonstrates for the first time, that it is possible to design proteins in the computer for redirecting immune cells to target cancer cells through pMHC molecules.