Two teams have shown how quantum approaches can solve problems faster than classical computers, bringing physics and computer science closer together.

Quantum computing is still rare enough that merely installing a system in a country is a breakthrough, and IBM is taking advantage of that novelty. The company has forged a partnership with the Canadian province of Quebec to install what it says is Canada’s first universal quantum computer. The five-year deal will see IBM install a Quantum System One as part of a Quebec-IBM Discovery Accelerator project tackling scientific and commercial challenges.

The team-up will see IBM and the Quebec government foster microelectronics work, including progress in chip packaging thanks to an existing IBM facility in the province. The two also plan to show how quantum and classical computers can work together to address scientific challenges, and expect quantum-powered AI to help discover new medicines and materials.

IBM didn’t say exactly when it would install the quantum computer. However, it will be just the fifth Quantum One installation planned by 2023 following similar partnerships in Germany, Japan, South Korea and the US. Canada is joining a relatively exclusive club, then.

Speech is more than just a form of communication. A person’s voice conveys emotions and personality and is a unique trait we can recognize. Our use of speech as a primary means of communication is a key reason for the development of voice assistants in smart devices and technology. Typically, virtual assistants analyze speech and respond to queries by converting the received speech signals into a model they can understand and process to generate a valid response. However, they often have difficulty capturing and incorporating the complexities of human speech and end up sounding very unnatural.

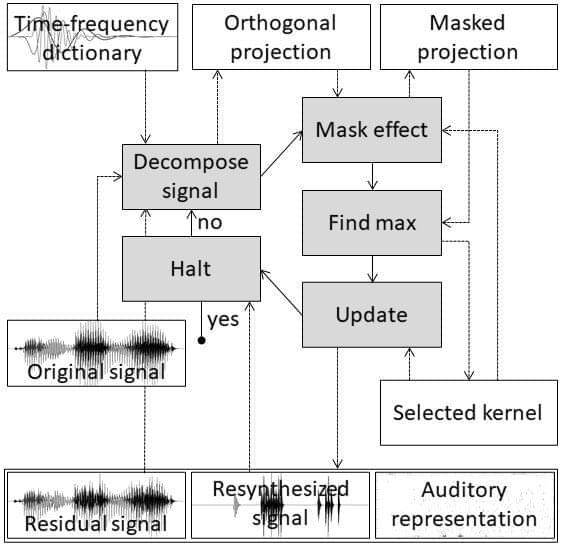

Now, in a study published in the journal IEEE Access, Professor Masashi Unoki from Japan Advanced Institute of Science and Technology (JAIST), and Dung Kim Tran, a doctoral course student at JAIST, have developed a system that can capture the information in speech signals similarly to how humans perceive speech.

“In humans, the auditory periphery converts the information contained in input speech signals into neural activity patterns (NAPs) that the brain can identify. To emulate this function, we used a matching pursuit algorithm to obtain sparse representations of speech signals, or signal representations with the minimum possible significant coefficients,” explains Prof. Unoki. “We then used psychoacoustic principles, such as the equivalent rectangular bandwidth scale, gammachirp function, and masking effects to ensure that the auditory sparse representations are similar to that of the NAPs.”

When the human brain learns something new, it adapts. But when artificial intelligence learns something new, it tends to forget information it already learned.

As companies use more and more data to improve how AI recognizes images, learns languages and carries out other complex tasks, a paper publishing in Science this week shows a way that computer chips could dynamically rewire themselves to take in new data like the brain does, helping AI to keep learning over time.

“The brains of living beings can continuously learn throughout their lifespan. We have now created an artificial platform for machines to learn throughout their lifespan,” said Shriram Ramanathan, a professor in Purdue University’s School of Materials Engineering who specializes in discovering how materials could mimic the brain to improve computing.

On this land taken from Indigenous Peoples, a new nation was eventually born, largely built by those whose ancestries traced back to the Old World via immigration and slavery.

As the country grew, inventions like the telephone, airplane, and Internet helped usher in today’s interconnected world. But the inexorable march of technological progress has come at great cost to the health of the planet, particularly because of global dependence on fossil fuels. The United Nations declared in 2017 that a Decade of Ocean Science for Sustainable Development would be held from 2021 to 2030. This Ocean Decade calls for a worldwide effort to reverse the oceans’ degradation.

The dawn of this decade, 2020, also marked the 400th anniversary of the Mayflower’s journey. Plymouth 400, a cultural nonprofit, has been working for more than a decade to commemorate the anniversary in ways that honor all aspects of this history, said spokesperson Brian Logan. Events began in 2020, but one of the most innovative launches is still waiting in the wings—a newfangled nautical craft, the Mayflower Autonomous Ship, or MAS.