Russian scientists have proposed a new algorithm for automatic decoding and interpreting the decoder weights, which can be used both in brain-computer interfaces and in fundamental research. The results of the study were published in the Journal of Neural Engineering.

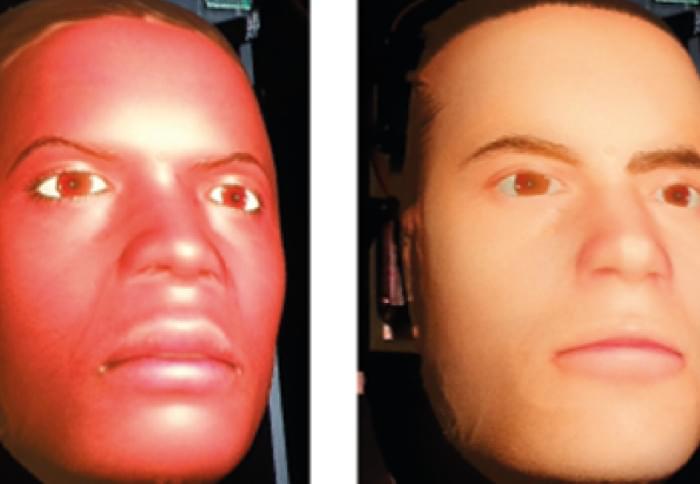

Brain-computer interfaces are needed to create robotic prostheses and neuroimplants, rehabilitation simulators, and devices that can be controlled by the power of thought. These devices help people who have suffered a stroke or physical injury to move (in the case of a robotic chair or prostheses), communicate, use a computer, and operate household appliances. In addition, in combination with machine learning methods, neural interfaces help researchers understand how the human brain works.

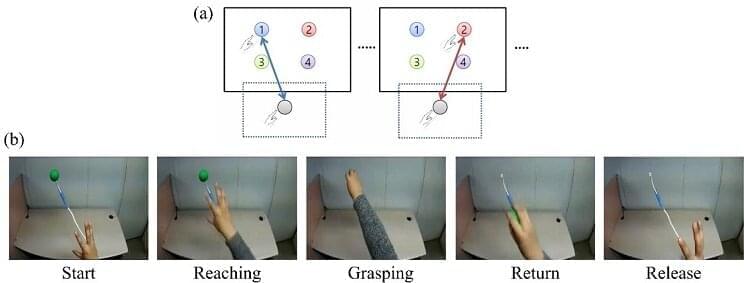

Most frequently brain-computer interfaces use electrical activity of neurons, measured, for example, with electro-or magnetoencephalography. However, a special decoder is needed in order to translate neuronal signals into commands. Traditional methods of signal processing require painstaking work on identifying informative features—signal characteristics that, from a researcher’s point of view, appear to be most important for the decoding task.