Category: robotics/AI – Page 1,717

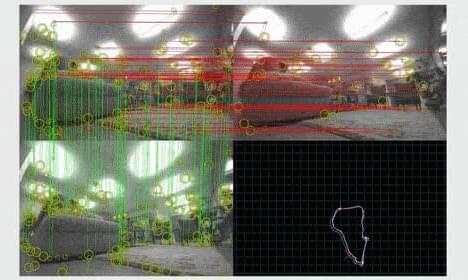

Understand what’s happening on your construction sites with effective reality capture data

See the robotic solution in action with Spot and Trimble Buildings!

Amazon’s Jianbo Ye and Arnie Sen explain that

Click on photo to start video.

to adapt to constant changes in customers’ homes, Astro uses a deep-learning model to produce invariant representations of visual data, estimates sensor reliability to guide the fusion of sensor data, and prunes and compresses map representations: https://amzn.to/36qQUZo

Quantifying Human Consciousness With the Help of AI

A new deep learning algorithm is able to quantify arousal and awareness in humans at the same time.

#consc… See more.

Summary: A new deep learning algorithm is able to quantify arousal and awareness in humans at the same time.

Source: CORDIS

New research supported by the EU-funded HBP SGA3 and DoCMA projects is giving scientists new insight into human consciousness.

Led by Korea University and projects’ partner University of Liège (Belgium), the research team has developed an explainable consciousness indicator (ECI) to explore different components of consciousness.

Spot robot dog can smell airborne gas or chemical hazards in real-time

Teledyne FLIR Defense has announced the partnership with MFE Inspection Solutions to integrate the FLIR MUVE C360 multi-gas detector on Boston Dynamics’ Spot robot and commercial unmanned aerial systems (UAS). The integrated solutions will enable remote monitoring of chemical threats in industrial and public safety applications.

The compact multi-gas detector can detect and classify airborne gas or chemical hazards, allowing inspection personnel to perform their job more safely and efficiently with integrated remote sensing capabilities from both the air and ground.

MUVE C360 is designed to operate on Boston Dynamics‘Spot mobile robot, which can autonomously inspect dangerous, inaccessible, or remote environments. It is also compatible with common commercial UAS systems, which allow operators to fly the C360 into a scene to perform hazard assessments in real-time.