China is building a new hydroelectric dam using AI and 3D printing. No human workers will be involved, according to a new study.

Join us on Patreon!

https://www.patreon.com/MichaelLustgartenPhD

Papers referenced in the video:

A Physiology Clock for Human Aging (preprint)

https://www.biorxiv.org/content/10.1101/2022.04.14.488358v1

Predicting Age by Mining Electronic Medical Records with Deep Learning Characterizes Differences between Chronological and Physiological Age.

https://www.ncbi.nlm.nih.gov/pmc/articles/PMC5716867/

Spirometry Reference Equations for Central European Populations from School Age to Old Age.

https://pubmed.ncbi.nlm.nih.gov/23320075/

An Integrated, Multimodal, Digital Health Solution for Chronic Obstructive Pulmonary Disease: Prospective Observational Pilot Study.

https://pubmed.ncbi.nlm.nih.gov/35142291/

Ken OtwellI thought the claim WAS fraud by Twitter? Twitter fraudulently under-reporting the bot numbers.

Mike Lorreymisrepresenting real user numbers is, actually, fraud, so he gets out of the billion dollar fee.

Shubham Ghosh Roy shared a post.

Michael MacLauchlan shared a link to the group: Futuristic Cities.

Smart cities are slowly becoming more than just a futuristic concept, and one such location is already being built in Japan by none other than Toyota.

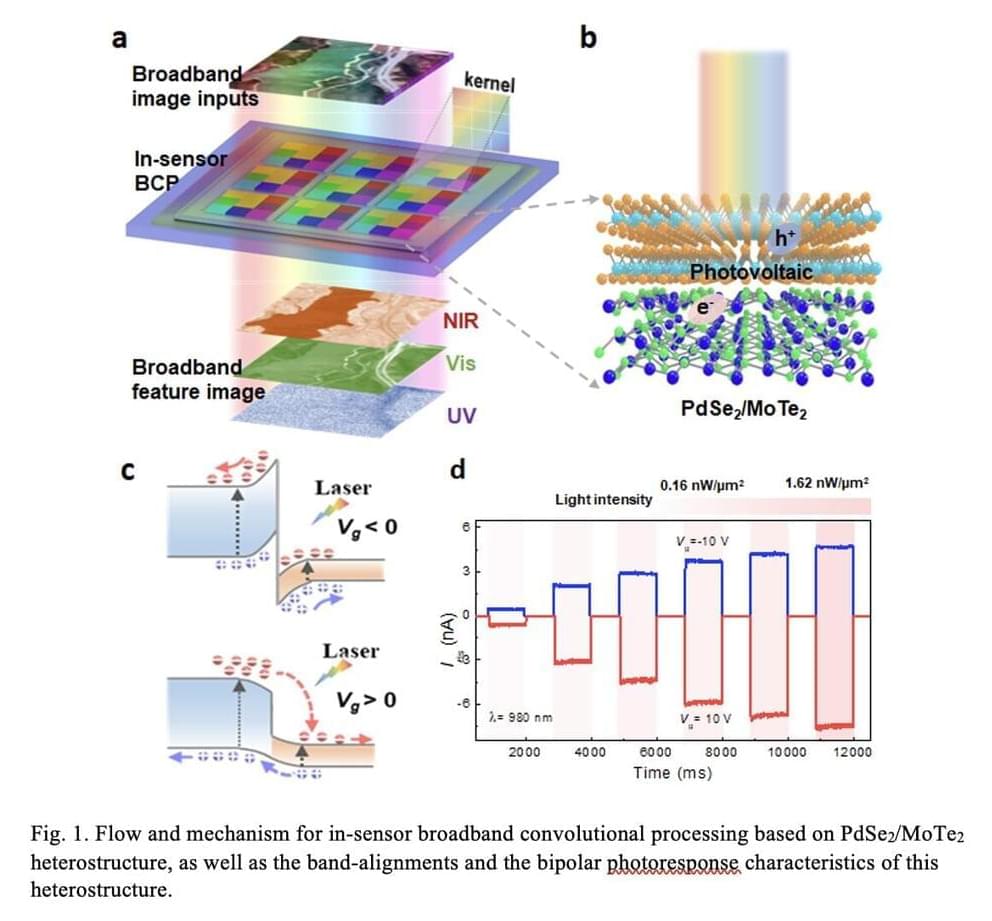

Efficiently processing broadband signals using convolutional neural networks (CNNs) could enhance the performance of machine learning tools for a wide range of real-time applications, including image recognition, remote sensing and environmental monitoring. However, past studies suggest that performing broadband convolutional processing computations directly in sensors is challenging, particularly when using conventional complementary metal-oxide-semiconductor (CMOS) technology, which underpins the functioning of most existing transistors.

Researchers at Huazhong University of Science and Technology and Nanjing University have recently investigated the possibility of achieving the convolutional processing of broadband signals using an alternative platform, namely van der Waals heterostructures. Their paper, published in Nature Electronics, could ultimately inform the development of better performing image recognition algorithms.

“Our paper was inspired by some our previous research works,” Tianyou Zhai, Xing Zhou and Feng Miao, three of the researchers who carried out the study, told TechXplore. “In studies published in Advanced Materials and Advanced Functional Materials, we realized type-III and type-II band-alignments in different heterostructures. Furthermore, we published a paper in Science Advances, where we realized a reconfigurable neural network vision sensor based on WSe2.”

Researchers have developed an algorithm that can identify the basic needs of users from the text and images they share on social networks. The experts hope this tool will help psychologists to diagnose possible mental health problems. The study suggests that Spanish-speaking users are more likely to mention relationship problems when feeling depressed than English speakers.

We spend a substantial amount of our time sharing images, videos or thoughts on social networks such as Instagram, Facebook and Twitter. Now, a group of researchers from the Universitat Oberta de Catalunya (UOC) has developed an algorithm that aims to help psychologists diagnose possible mental health problems through the content people post on these platforms.

According to William Glasser’s Choice Theory, there are five basic needs that are central to all human behavior: Survival, Power, Freedom, Belonging and Fun. These needs even have an influence on the images we choose to upload to our Instagram page. “How we present ourselves on social media can provide useful information about behaviors, personalities, perspectives, motives and needs,” explained Mohammad Mahdi Dehshibi, who led this study within the AI for Human Well-being (AIWELL) group, which belongs to the Faculty of Computer Science, Multimedia and Telecommunications at the UOC.

Modern life can be full of baffling encounters with artificial intelligence—think misunderstandings with customer service chatbots or algorithmically misplaced hair metal in your Spotify playlist. These AI systems can’t effectively work with people because they have no idea that humans can behave in seemingly irrational ways, says Mustafa Mert Çelikok. He’s a Ph.D. student studying human-AI interaction, with the idea of taking the strengths and weaknesses of both sides and blending them into a superior decision-maker.

In the AI world, one example of such a hybrid is a “centaur.” It’s not a mythological horse–human, but a human-AI team. Centaurs appeared in chess in the late 1990s, when artificial intelligence systems became advanced enough to beat human champions. In place of a “human versus machine” matchup, centaur or cyborg chess involves one or more computer chess programs and human players on each side.

“This is the Formula 1 of chess,” says Çelikok. “Grandmasters have been defeated. Super AIs have been defeated. And grandmasters playing with powerful AIs have also lost.” As it turns out, novice players paired with AIs are the most successful. “Novices don’t have strong opinions” and can form effective decision-making partnerships with their AI teammates, while “grandmasters think they know better than AIs and override them when they disagree—that’s their downfall,” observes Çelikok.

This Article Is Based On The Research Paper ‘GraphWorld: Fake Graphs Bring Real Insights for GNNs’. All Credit For This Research Goes To The Researchers 👏👏👏 Please Don’t Forget To Join Our ML Subreddit A graph is a structure consisting of a set of items in which some pairings of the objects are in some […].

Please describe the mindset needed to be a roboticist.

In order to be a roboticist, you need to have an open mindset and an insatiable sense of curiosity. You need to be able to understand the needs of people and how they accomplish tasks before you can develop robots that can meet these requirements. That’s how we can maximise usefulness in the robots of tomorrow.

When you walk through our office, you will also notice that roboticists come from all walks of life and many different disciplines. So we encourage anyone who is interested in robots to apply for a job with us.

Future quantum computers are expected not only to solve particularly tricky computing tasks, but also to be connected to a network for the secure exchange of data. In principle, quantum gates could be used for these purposes. But until now, it has not been possible to realize them with sufficient efficiency. By a sophisticated combination of several techniques, researchers at the Max Planck Institute of Quantum Optics (MPQ) have now taken a major step towards overcoming this hurdle.

For decades, computers have been getting faster and more powerful with each new generation. This development makes it possible to constantly open up new applications, for example in systems with artificial intelligence. But further progress is becoming increasingly difficult to achieve with established computer technology. For this reason, researchers are now setting their sights on alternative, completely new concepts that could be used in the future for some particularly difficult computing tasks. These concepts include quantum computers.

Their function is not based on the combination of digital zeros and ones—the classical bits—as is the case with conventional, microelectronic computers. Instead, a quantum computer uses quantum bits, or qubits for short, as the basic units for encoding and processing information. They are the counterparts of bits in the quantum world—but differ from them in one crucial feature: qubits can not only assume two fixed values or states such as zero or one, but also any values in between. In principle, this offers the possibility to carry out many computing processes simultaneously instead of processing one logical operation after the other.