I believe there are good advances in AI due to the processing performance; however, as I highlighted earlier many of the principles like complex algorithms along with the pattern & predictive analysis of large volumes of information hasn’t changed much from my own work in the early days with AI. Where I have concerns and is the foundational infrastructure that “connected” AI resides on. Ongoing hacking and attacks of today could actually make AI adoption fall really short; and in the long run cause AI to look pretty bad.

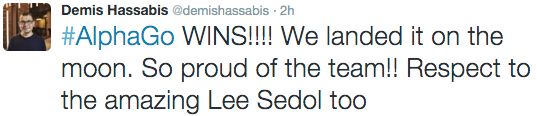

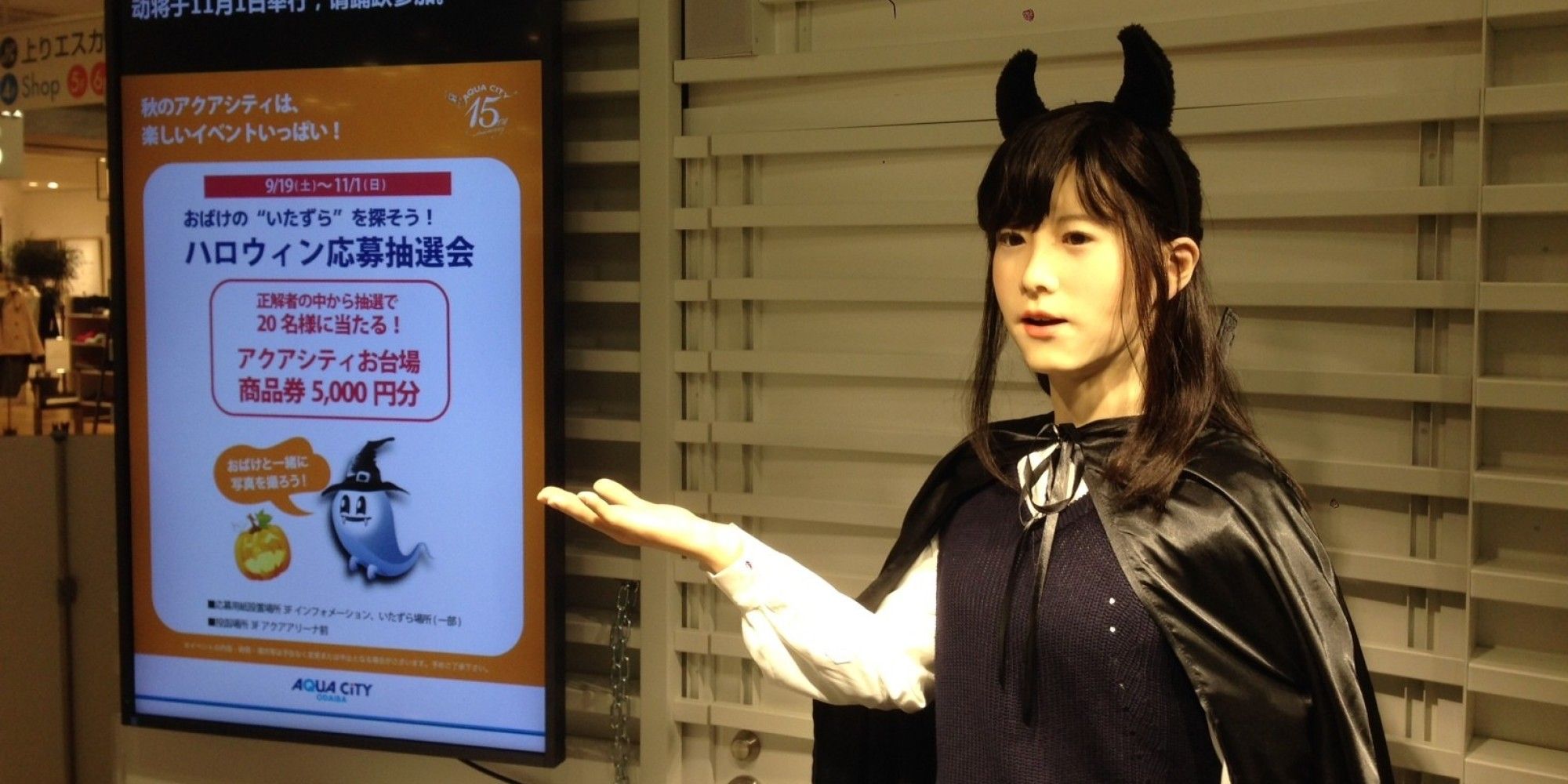

A debate in New York tries to settle the question.

By Larry Greenmeier on March 10, 2016.