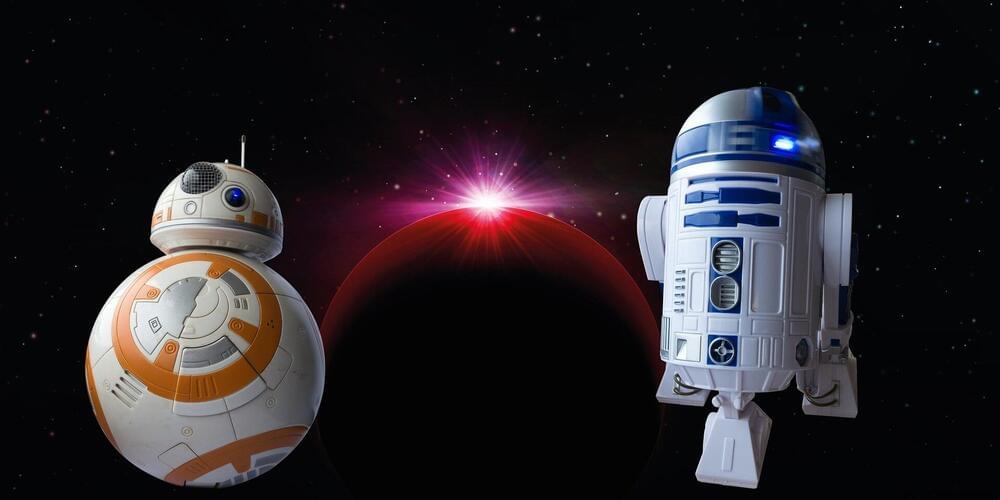

The robotic explorer GLIMPSE, created at ETH Zurich and the University of Zurich, has made it into the final round of a competition for prospecting resources in space. The long-term goal is for the robot to explore the south polar region of the moon.

The south polar region of the moon is believed to contain many resources that would be useful for lunar base operations, such as metals, water in the form of ice, and oxygen stored in rocks. But to find them, an explorer robot that can withstand the extreme conditions of this part of the moon is needed. Numerous craters make moving around difficult, while the low angle of the sunlight and thick layers of dust impede the use of light-based measuring instruments. Strong fluctuations in temperature pose a further challenge.

The European Space Agency (ESA) and the European Space Resources Innovation Center ESRIC called on European and Canadian engineering teams to develop robots and tools capable of mapping and prospecting the shadowy south polar region of the moon, between the Shoemaker and the Faustini craters. To do this, the researchers had to adapt terrestrial exploration technologies for the harsh conditions on the moon.