Register FREE. Streamed online.

In 1903, the Wright brothers invented the first successful airplane. By 1914, just over a decade after its successful test, aircraft would be used in combat in World War I, with capabilities including reconnaissance, bombing and aerial combat. This has been categorized by most historians as a revolution in military affairs. The battlefield, which previously included land and sea, now included the sky, permanently altering the way wars are fought. With the new technology came new strategy, policy, tactics, procedures and formations.

Twenty years ago, unmanned aircraft systems (UASs) were much less prevalent and capable. Today, their threat potential and risk profile have increased significantly. UASs are becoming increasingly more affordable and capable, with improved optics, greater speed, longer range and increased lethality.

The U.S. has long been a proponent of utilizing unmanned aircraft systems, with the MQ-9 Reaper and MQ-1 Predator excelling in combat operations, and smaller squad-based UASs being fielded, such as the RQ-11 Raven and the Switchblade. While the optimization of friendly UAS capability can yield great results on the battlefield, adversarial use of unmanned aircraft systems can be devastating.

Around the same time, neuroscientists developed the first computational models of the primate visual system, using neural networks like AlexNet and its successors. The union looked promising: When monkeys and artificial neural nets were shown the same images, for example, the activity of the real neurons and the artificial neurons showed an intriguing correspondence. Artificial models of hearing and odor detection followed.

But as the field progressed, researchers realized the limitations of supervised training. For instance, in 2017, Leon Gatys, a computer scientist then at the University of Tübingen in Germany, and his colleagues took an image of a Ford Model T, then overlaid a leopard skin pattern across the photo, generating a bizarre but easily recognizable image. A leading artificial neural network correctly classified the original image as a Model T, but considered the modified image a leopard. It had fixated on the texture and had no understanding of the shape of a car (or a leopard, for that matter).

Self-supervised learning strategies are designed to avoid such problems. In this approach, humans don’t label the data. Rather, “the labels come from the data itself,” said Friedemann Zenke, a computational neuroscientist at the Friedrich Miescher Institute for Biomedical Research in Basel, Switzerland. Self-supervised algorithms essentially create gaps in the data and ask the neural network to fill in the blanks. In a so-called large language model, for instance, the training algorithm will show the neural network the first few words of a sentence and ask it to predict the next word. When trained with a massive corpus of text gleaned from the internet, the model appears to learn the syntactic structure of the language, demonstrating impressive linguistic ability — all without external labels or supervision.

In this award-winning AutoML conference paper, Amazon Web Services and ETH Zürich scientists present a new way to decide when to terminate Bayesian optimization… See more.

Bayesian optimization (BO) is a widely popular approach for the hyperparameter optimization (HPO) in machine learning. At its core, BO iteratively evaluates promising configurations until a user-defined budget, such as wall-clock time or number of iterations, is exhausted. While the final performance after tuning heavily depends on the provided budget, it is hard to pre-specify an optimal value in advance. In this work, we propose an effective and intuitive termination criterion for BO that automatically stops the procedure if it is sufficiently close to the global optimum. Our key insight is that the discrepancy between the true objective (predictive performance on test data) and the computable target (validation performance) suggests stopping once the sub-optimality in optimizing the target is dominated by the statistical estimation error. Across an extensive range of real-world HPO problems and baselines, we show that our termination criterion achieves a better trade-off between the test performance and optimization time. Additionally, we find that overfitting may occur in the context of HPO, which is arguably an overlooked problem in the literature, and show how our termination criterion helps to mitigate this phenomenon on both small and large datasets.

Boston Dynamics gets into AI.

SEOUL/CAMBRIDGE, MA, August 12, 2022 – Hyundai Motor Group (the Group) today announced the launch of Boston Dynamics AI Institute (the Institute), with the goal of making fundamental advances in artificial intelligence (AI), robotics and intelligent machines. The Group and Boston Dynamics will make an initial investment of more than $400 million in the new Institute, which will be led by Marc Raibert, founder of Boston Dynamics.

As a research-first organization, the Institute will work on solving the most important and difficult challenges facing the creation of advanced robots. Elite talent across AI, robotics, computing, machine learning and engineering will develop technology for robots and use it to advance their capabilities and usefulness. The Institute’s culture is designed to combine the best features of university research labs with those of corporate development labs while working in four core technical areas: cognitive AI, athletic AI, organic hardware design as well as ethics and policy.

“Our mission is to create future generations of advanced robots and intelligent machines that are smarter, more agile, perceptive and safer than anything that exists today,” said Marc Raibert executive director of Boston Dynamics AI Institute. “The unique structure of the Institute — top talent focused on fundamental solutions with sustained funding and excellent technical support — will help us create robots that are easier to use, more productive, able to perform a wider variety of tasks, and that are safer working with people.”

Welcome, AI friends.

Controversy over Google’s AI program is raising questions about just how powerful it is. Is it even safe? By Amelia Tait.

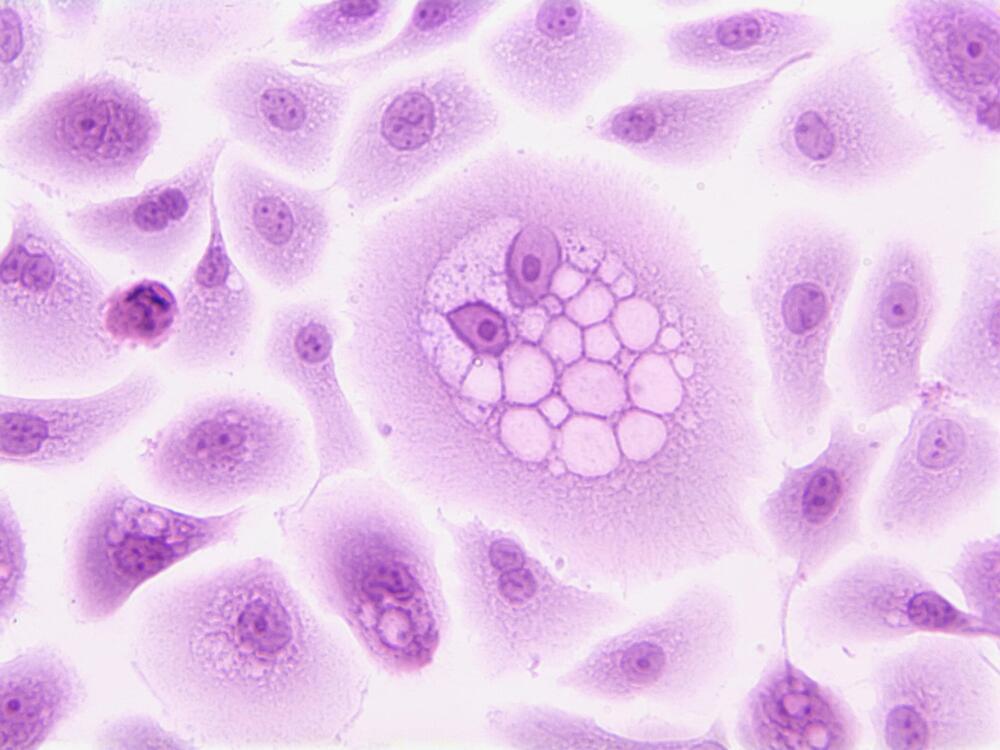

Vanderbilt researchers have developed an active machine learning approach to predict the effects of tumor variants of unknown significance, or VUS, on sensitivity to chemotherapy. VUS, mutated bits of DNA with unknown impacts on cancer risk, are constantly being identified. The growing number of rare VUS makes it imperative for scientists to analyze them and determine the kind of cancer risk they impart.

Traditional prediction methods display limited power and accuracy for rare VUS. Even machine learning, an artificial intelligence tool that leverages data to “learn” and boost performance, falls short when classifying some VUS. Recent work by the lab of Walter Chazin, Chancellor’s Chair in Medicine and professor of biochemistry and chemistry, led by co-first authors and postdoctoral fellows Alexandra Blee and Bian Li, featured an active machine learning technique.

Active machine learning relies on training an algorithm with existing data, as with machine learning, and feeding it new information between rounds of training. Chazin and his lab identified VUS for which predictions were least certain, performed biochemical experiments on those VUS and incorporated the resulting data into subsequent rounds of algorithm training. This allowed the model to continuously improve its VUS classification.

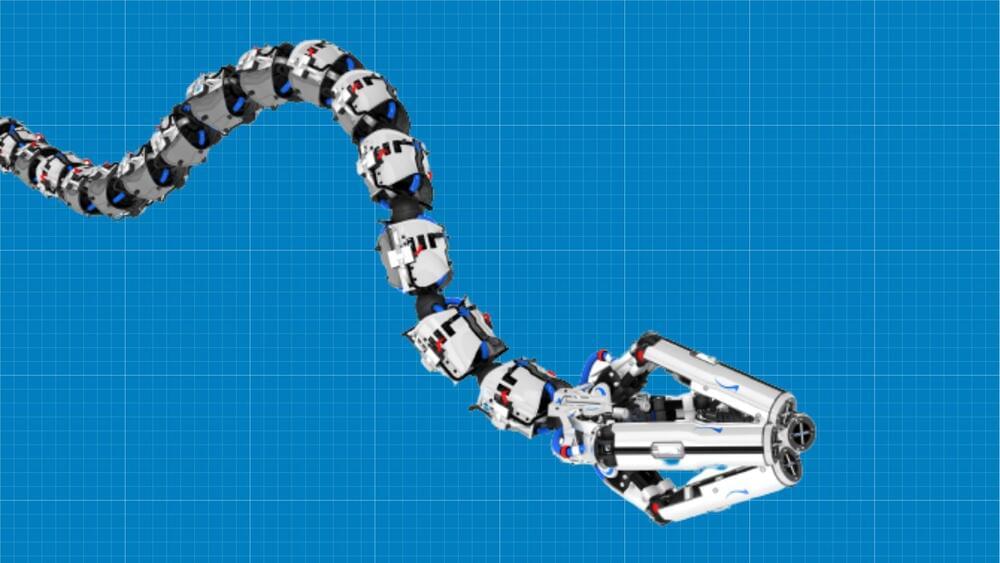

University of Toronto researchers are working on advanced snake-like robots with many useful applications.

Slender, flexible, and extensible robots

Now, a team led by Jessica Burgner-Kahrs, the director of the Continuum Robotics Lab at the University of Toronto Mississauga, is building very slender, flexible, and extensible robots that could be used by doctors to save lives, according to a press release by the institution. They do this by accessing difficult-to-reach places.