There were two Teslabot videos. The first has a discussion with James Douma. James describes his perspective of the advances in neural nets. He described how GPT-3 created a foundational capability by cracking language. He believes the Teslabot will leverage neural nets to crack robotic methods for bipedal movement and mastering identifying and picking up objects.

Category: robotics/AI – Page 1,639

These Are Not Photos: Beautiful Landscapes Created by New AI

First photographers were creating portraits of people that don’t exist, now Aurel Manea has created a series of “landscape photos” using a new artificially intelligent (AI) software program called Stable Diffusion.

Manea tells PetaPixel that he has been blown away by what the London and Los Altos-based startup Stability AI has created.

“I can’t, as a landscape photographer myself, emphasize enough what these new technologies will mean for photography,” explains Manea.

Fruit-picking drones can solve the farm labor shortage

These autonomous robotic pickers can harvest precisely and gently without tiring or needing a break.

The Hidden Pattern: A Patternist Philosophy of Mind

The Hidden Pattern presents a novel philosophy of mind, intended to form a coherent conceptual framework within which it is possible to understand the diverse aspects of mind and intelligence in a unified way. The central concept of the philosophy presented is the concept of “pattern”: minds and the world they live in and co-create are viewed as patterned systems of patterns, evolving over time, and various aspects of subjective experience and individual and social intelligence are analyzed in detail in this light. Many of the ideas presented are motivated by recent research in artificial intelligence and cognitive science, and the author’s own AI research is discussed in moderate detail in one chapter. However, the scope of the book is broader than this, incorporating insights from sources as diverse as Vedantic philosophy, psychedelic psychotherapy, Nietzschean and Peircean metaphysics and quantum theory. One of the unique aspects of the patternist approach is the way it seamlessly fuses the mechanistic, engineering-oriented approach to intelligence and the introspective, experiential approach to intelligence.

Artificial Intelligence Officially Has Sentience?

Just weeks after a former Google engineer claimed that its AI was sentient, Facebook parent company Meta is warning that its new BlenderBot 3 chatbot could be gaining some form of conscious awareness. Meta’s chatbot was developed for research purposes and the company has identified instances where the bot has overtly lied and treated users rudely.

Holographic Conversational Video Experience allows you to communicate with deceased relatives

The company StoryLife developed technology Holographic Conversational Video Experience that allows you to communicate with holograms of deceased relatives.

What we know

A U.S. startup has learned how to create a digital clone of a person before they die. It uses two dozen synchronized cameras to do so. They record answers to questions and then the resulting material is used to train artificial intelligence.

Open-source software gives a leg up to robot research

Carnegie Mellon researchers have developed an open-source software that enables more agile movement in legged robots.

Robots can help humans with tasks like aiding disaster recovery efforts or monitoring the environment. In the case of quadrupeds, robots that walk on four legs, their mobility requires many software components to work together seamlessly. Most researchers must spend much of their time developing lower-level infrastructure instead of focusing on high-level behaviors.

Aaron Johnson’s team in the Robomechanics Lab at Carnegie Mellon University’s College of Engineering has experienced these frustrations firsthand. The researchers have often had to rely on simple models for their work because existing software solutions were not open-sourced, did not provide a modular framework, and lacked end-to-end functionality.

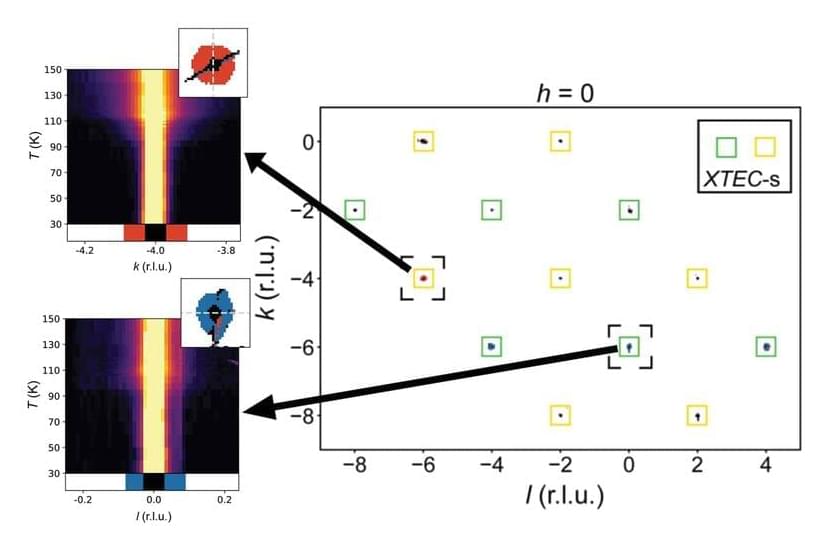

Uncovering nature’s patterns at the atomic scale in living color

Color coding makes aerial maps much more easily understood. Through color, we can tell at a glance where there is a road, forest, desert, city, river or lake.

Working with several universities, the U.S. Department of Energy’s (DOE) Argonne National Laboratory has devised a method for creating color-coded graphs of large volumes of data from X-ray analysis. This new tool uses computational data sorting to find clusters related to physical properties, such as an atomic distortion in a crystal structure. It should greatly accelerate future research on structural changes on the atomic scale induced by varying temperature.

The research team published their findings in the Proceedings of the National Academy of Sciences in an article titled “Harnessing interpretable and unsupervised machine learning to address big data from modern X-ray diffraction.”

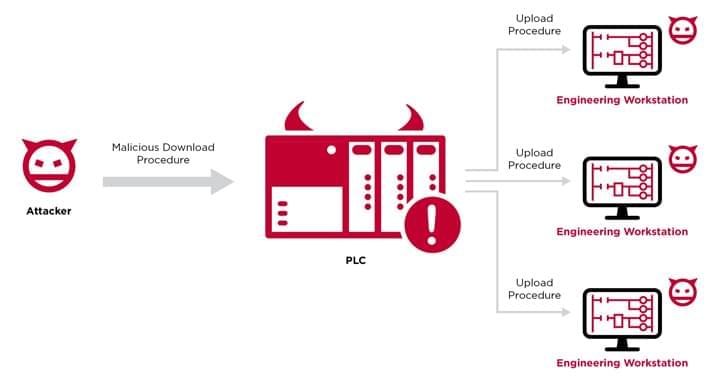

New Evil PLC Attack Weaponizes PLCs to Breach OT and Enterprise Networks

Cybersecurity researchers have elaborated a novel attack technique that weaponizes programmable logic controllers (PLCs) to gain an initial foothold in engineering workstations and subsequently invade the operational technology (OT) networks.

Dubbed “Evil PLC” attack by industrial security firm Claroty, the issue impacts engineering workstation software from Rockwell Automation, Schneider Electric, GE, B&R, Xinje, OVARRO, and Emerson.

Programmable logic controllers are a crucial component of industrial devices that control manufacturing processes in critical infrastructure sectors. PLCs, besides orchestrating the automation tasks, are also configured to start and stop processes and generate alarms.