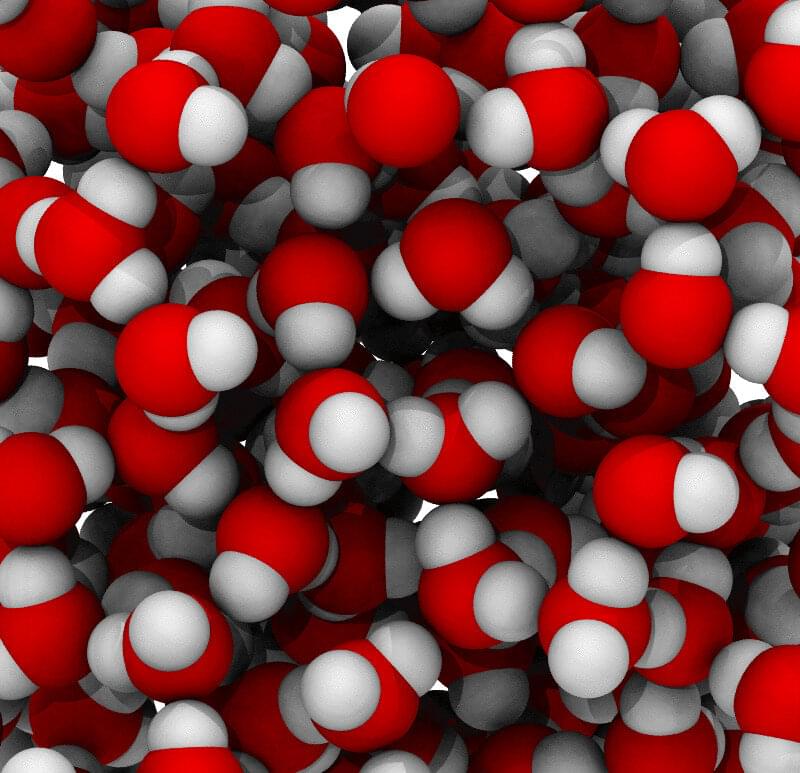

Water has puzzled scientists for decades. For the last 30 years or so, they have theorized that when cooled down to a very low temperature like-100C, water might be able to separate into two liquid phases of different densities. Like oil and water, these phases don’t mix and may help explain some of water’s other strange behavior, like how it becomes less dense as it cools.

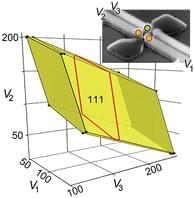

It’s almost impossible to study this phenomenon in a lab, though, because water crystallizes into ice so quickly at such low temperatures. Now, new research from the Georgia Institute of Technology uses machine learning models to better understand water’s phase changes, opening more avenues for a better theoretical understanding of various substances. With this technique, the researchers found strong computational evidence in support of water’s liquid-liquid transition that can be applied to real-world systems that use water to operate.

“We are doing this with very detailed quantum chemistry calculations that are trying to be as close as possible to the real physics and physical chemistry of water,” said Thomas Gartner, an assistant professor in the School of Chemical and Biomolecular Engineering at Georgia Tech. “This is the first time anyone has been able to study this transition with this level of accuracy.”