ENGINEERING POINT OF VIEW

Category: robotics/AI – Page 1,370

Will AI take your job? ‘No one is safe from this’ — Alan Thompson

Alan Thompson, AI Consultant and Former Chairman of Mensa International, examines the latest trends in artificial intelligence, as well as its applications to finance, professional services, and military. He discusses the possibility that AI could become sentient and even dangerous with David Lin, Anchor and Producer at Kitco News.

Alan Thompson’s website: https://lifearchitect.ai/about-alan/

Follow David Lin on Twitter: @davidlin_TV (https://twitter.com/davidlin_TV)

Follow Kitco News on Twitter: @KitcoNewsNOW (https://twitter.com/KitcoNewsNOW)

0:00 — Intro.

1:57 — Overview of AI

8:07 — Chat GPT

11:51 — Human similarities.

15:53 — Microsoft and Google.

19:55 — AI replacing workers.

21:54 — Applications of AI

24:26 — Technological singularity.

26:05 — Dangers of AI

28:52 — Transhumanism.

#technology #ai #chatgpt.

Kitco NEWS is a global news network based in Montreal, with bureaus in New York, Hong Kong, New Mexico, London and Vancouver. Since 2009, our journalists have helped investors make informed decisions through in-depth reporting, daily market updates, and interviews with key industry figures. We aim to accurately and impartially cover the economy, stock markets, commodities, cryptocurrencies, mining and metals.

LEAKED: Elon’s Secret AI Lab, Tesla Mexico, Toyota Doomed

Join Patreon: https://www.patreon.com/solvingthemoneyproblem.

➡ FREE One Year Supply of Vitamin D + 5 AG1 Travel Packs https://athleticgreens.com/SMR

UNLOCK 300+ Exclusive Videos: https://www.patreon.com/solvingthemoneyproblem.

➡ Oura Ring https://ouraring.com/smr (Track your sleep, readiness & activity)

My Tesla Stock Price Targets: https://www.patreon.com/posts/tesla-stock-bull-57460691/

Merch: https://solving-the-money-problem.creator-spring.com/

Twitter https://twitter.com/stevenmarkryan.

AFFILIATE DISCLOSURE: I earn a commission on every purchase made through my links.

🚀 SUPPORT THE CHANNEL ON PATREON

◆ Patreon https://www.patreon.com/solvingthemoneyproblem.

BECOME A MEMBER OF THE CHANNEL

◆ Join https://www.youtube.com/channel/UCagiBBx1prefrlsDzDxuA9A/join.

CHANNEL MERCH

◆ Merch store https://solving-the-money-problem.creator-spring.com/

MERCH LINKS

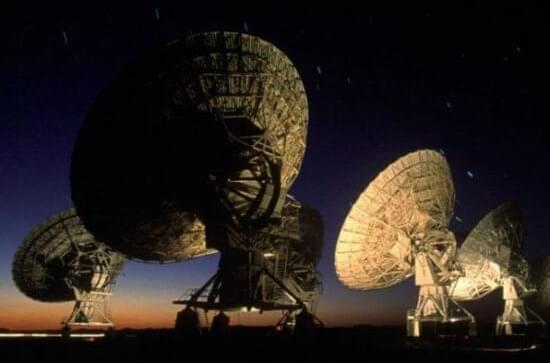

Will AI Make First Contact with Extra-Terrestrial Intelligence?

Will a machine learning AI be the way we find out we are not alone in the Universe?

In a January 2023 published paper in Nature Astronomy, a collaboration by authors from universities in Toronto, Canada, Berkeley in California, Manchester in the United Kingdom, Malta, Queensland and Western Australia, and the SETI Institute, created a machine learning algorithm variational autoencoder, a type of neural network that learns through the unsupervised study of unlabelled data. They used it to try and find technosignatures contained within 150 Terabytes of radio traffic from 820 nearby stars. The data source came from the Green Bank Telescope in West Virginia, the world’s largest steerable radio telescope. This data had previously been searched in 2017 using traditional techniques.

Radio signals are abundant throughout the Universe and they represent the most effective way for us to find out if we are a solo act or one of many technical civilizations. Our contribution to radio traffic has been going on for more than a century which means an alien civilization within a hundred light-years from us with technology similar to ours can now detect us.

SETI (Search for Extraterrestrial Intelligence) has been using radio telescopes and receiving antennae since 1960 in a search to detect signals coming from space in patterns similar to what we produce. So far, however, it has proven to be harder than finding the proverbial needle in a haystack. That’s why the application of AI to the vast amounts of radio traffic coming from space is seen as a step up in our efforts to detect alien intelligence. It seems ironic that an AI may be the way we first discover alien intelligence originating from distant solar systems. And it may be that those alien species are using their own AIs to be doing the same.

“Sorry in advance!” Snapchat warns of hallucinations with new AI conversation bot

On Monday, Snapchat announced an experimental AI-powered conversational chatbot called “My AI,” powered by ChatGPT-style technology from OpenAI. My AI will be available for $3.99 a month for Snapchat+ subscribers and is rolling out “this week,” according to a news post from Snap, Inc.

But like its GPT-powered cousins, ChatGPT and Bing Chat, Snap says that My AI is prone to “hallucinations,” which are unexpected falsehoods generated by an AI model. On this point, Snap includes a rather lengthy disclaimer in its My AI announcement post:

Talend Data Fabric adds data observability features, connector updates

Check out all the on-demand sessions from the Intelligent Security Summit here.

Data management and integration veteran Talend today debuted the winter ‘23 release of its core platform, providing enhanced observability, automation and connectivity for enterprises’ data assets. The update comes over a month after the company announced it is being acquired by Qlik in a transaction set to close in the first half of 2023.

Talend started in 2004 as a data integrator, but gradually expanded to offer Talend Data Fabric, a unified solution that works across any cloud, hybrid or multicloud environment. The solution combines enterprise-grade data discovery, integration, quality (automatic cleaning and profiling) and governance capabilities. It’s is intended to reduce the effort involved in working with data, while providing teams with clean and uncompromised information for decision-making.

After losing billions of dollars on the metaverse, Mark Zuckerberg’s launching a ‘top-level’ team at Meta to develop AI products for WhatsApp, Messenger, and Instagram

Looks like Meta is also getting into the AI action.

The tech giant will be creating a new “top-level product group” focused on generative AI — AI that can create content — to focus on “building delightful experiences around this technology into all of our different products,” CEO Mark Zuckerberg said in a Facebook post on Monday.

“Over the longer term, we’ll focus on developing AI personas that can help people in a variety of ways,” he said, adding Meta is exploring AI experiences with text in WhatsApp and Messenger, and with images in Instagram.

Zuckerberg didn’t specify in the post what top-level meant.

Elon Musk recruits team to develop OpenAI’s ChatGPT rival

The war is on. 😃

Elon Musk has approached AI researchers in recent weeks about forming a new research lab to develop an alternative to OpenAI ‘s ChatGPT, the Information reported on Monday, citing people with direct knowledge of the effort.

Tesla and Twitter chief Musk has been recruiting Igor Babuschkin, a researcher who recently left Alphabet’s DeepMind AI unit, the report said.

The report comes after ChatGPT, a text-based chatbot developed by OpenAI that can draft prose, poetry or even computer code on command, gained widespread attention in Silicon Valley.