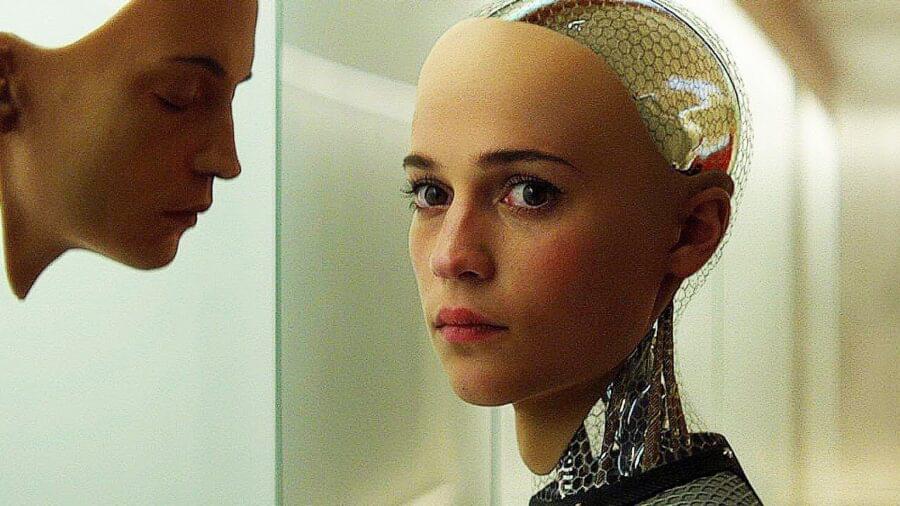

My music score for Rotwang’s robot in the silent German expressionist film METROPOLIS by Fritz Lang. 1927.

This film had a major influence on me, but that would come later. When I saw it for the first time I was 9 years old. Little did I know, this scene in particular would haunt me to this day.

I tried to convey the feelings I had as a child, with this composition I call “Phantasmaglorious”; meaning frightening and darkly beautiful. A fitting tribute to Fritz Lang’s masterwork, Alfred Abel as Joh Fredersen, the Master of Metropolis and Rudolf Klein-Rogge as C. A. Rotwang the mad scientist who creates the spectre of my childhood nightmeres.

This movie is the definition of sublime.