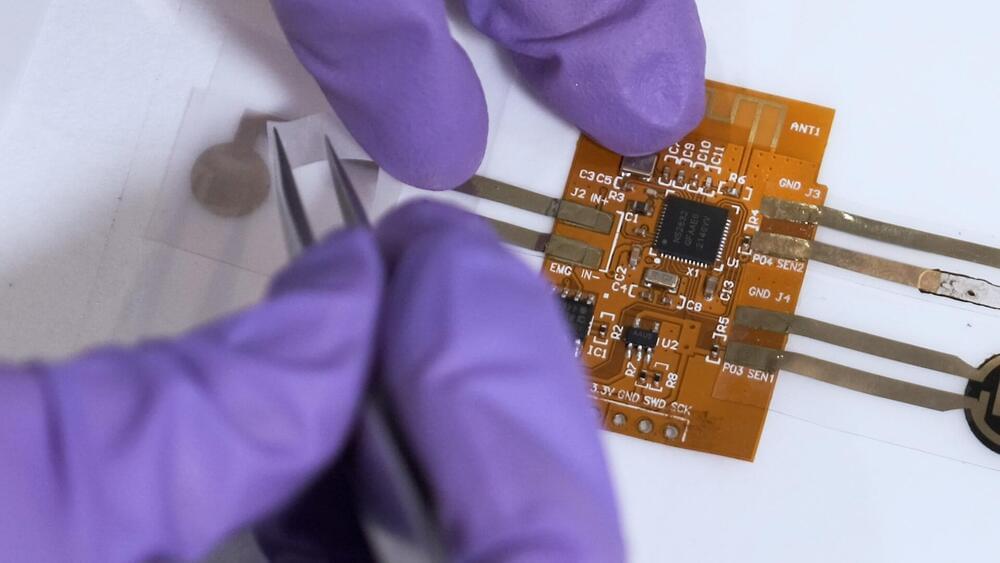

For centuries, the town of Carrara’s prosperity has depended on artists. Its famed Tuscan marble quarries supplied artists like Michelangelo, Canova and Bernini with the finest material for their sculptures. Today, robots are being used to create modern-day works. Chris Livesay has more.

#news #marble #technology.

Each weekday morning, “CBS Mornings co-hosts Gayle King, Tony Dokoupil and Nate Burleson bring you the latest breaking news, smart conversation and in-depth feature reporting. “CBS Mornings” airs weekdays at 7 a.m. on CBS and stream it at 8 a.m. ET on the CBS News app.

Watch CBS News: http://cbsn.ws/1PlLpZ7c.

Download the CBS News app: http://cbsn.ws/1Xb1WC8

Follow “CBS Mornings” on Instagram: https://bit.ly/3A13OqA

Like “CBS Mornings” on Facebook: https://bit.ly/3tpOx00

Follow “CBS Mornings” on Twitter: https://bit.ly/38QQp8B

Try Paramount+ free: https://bit.ly/2OiW1kZ

For video licensing inquiries, contact: [email protected]