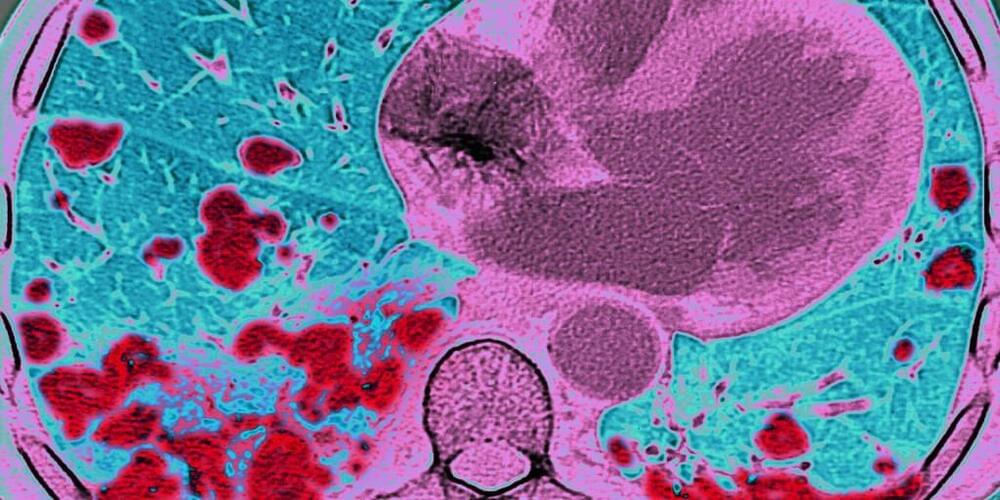

Researchers in Boston are on the verge of what they say is a major advancement in lung cancer screening: Artificial intelligence that can detect early signs of the disease years before doctors would find it on a CT scan.

The new AI tool, called Sybil, was developed by scientists at the Mass General Cancer Center and the Massachusetts Institute of Technology in Cambridge. In one study, it was shown to accurately predict whether a person will develop lung cancer in the next year 86% to 94% of the time.

The Centers for Disease Control and Prevention currently recommends that adults at risk for lung cancer get a low-dose CT scan to screen for the disease annually.