The former Microsoft boss says AI is the second revolutionary technology he’s seen in his lifetime.

Register Free for NVIDIA’s Spring GTC 2023, the #1 AI Developer Conference: https://nvda.ws/3kEyefH

RTX 4,080 Giveaway Form: https://forms.gle/ef2Kp9Ce7WK39xkz9

The talk about AI taking our programming jobs is everywhere. There are articles being written, social media going crazy, and comments on seemingly every one of my YouTube videos. And when I made my video about ChatGPT, I had two particular comments that stuck out to me. One was that someone wished I had included my opinion about AI in that video, and the other was asking if AI will make programmers obsolete in 5 years. This video is to do just that. And after learning, researching, and using many different AI tools over the last many months (a video about those tools coming soon), well let’s just say I have many thoughts on this topic. What AI can do for programmers right now. How it’s looking to progress in the near future. And will it make programmers obsolete in the next 5 years? Enjoy!!

The Sessions I Mentioned:

Fireside Chat with Ilya Sutskever and Jensen Huang: AI Today and Vision of the Future [S52092]: https://www.nvidia.com/gtc/session-catalog/?ncid=ref-inor-73…314001t6Nv.

Using AI to Accelerate Scientific Discovery [S51831]: https://www.nvidia.com/gtc/session-catalog/?ncid=ref-inor-73…197001tw0E

Generative AI Demystified [S52089]: https://www.nvidia.com/gtc/session-catalog/?ncid=ref-inor-73…393001DjiP

3D by AI: Using Generative AI and NeRFs for Building Virtual Worlds [S52163]: https://www.nvidia.com/gtc/session-catalog/?ncid=ref-inor-73…782001l1Ul.

Achieving Enterprise Transformation with AI and Automation Technologies [S52056]: https://www.nvidia.com/gtc/session-catalog/?ncid=ref-inor-73…353001hjSr.

A portion of this video is sponsored by NVIDIA.

🐱🚀 GitHub: https://github.com/forrestknight.

🐦 Twitter: https://www.twitter.com/forrestpknight.

💼 LinkedIn: https://www.linkedin.com/in/forrestpknight.

📸 Instagram: https://www.instagram.com/forrestpknight.

📓 Learning Resources:

Amazon Robotics has manufactured and deployed the world’s largest fleet of mobile industrial robots. The newest member of this robotic fleet is Proteus—Amazon’s first fully autonomous mobile robot. Amazon uses NVIDIA Isaac Sim, built on Omniverse, to create high-fidelity simulations to accelerate Proteus deployments across its fulfillment centers.

Explore NVIDIA Isaac Sim: https://developer.nvidia.com/isaac-sim.

#Simulation.

#Robotics.

#DigitalTwin

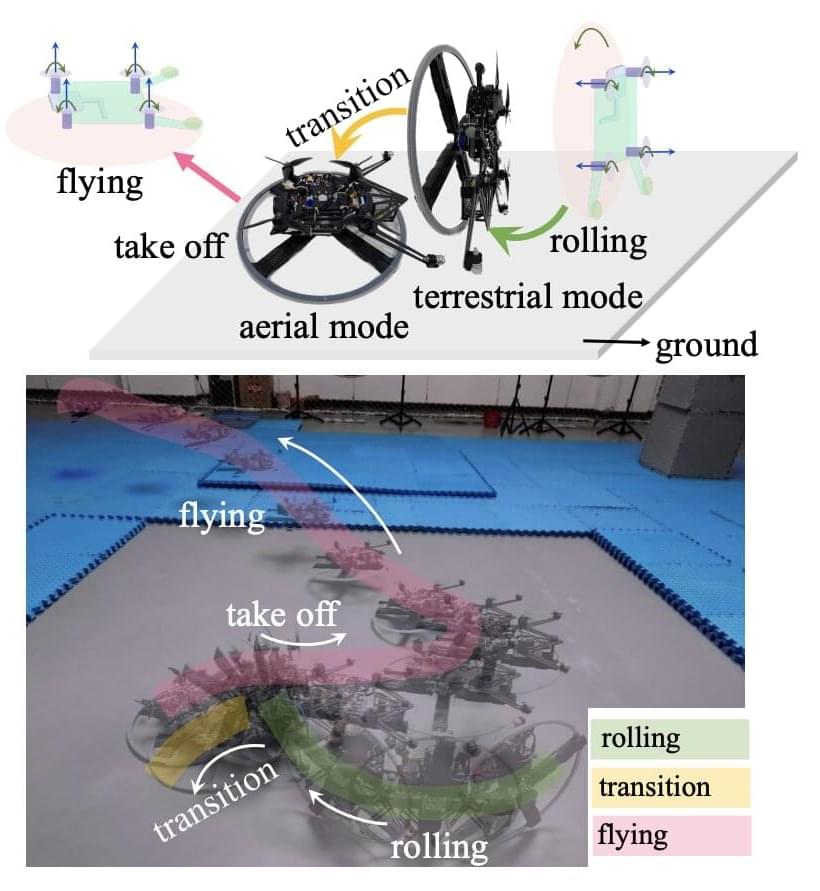

Unmanned aerial vehicles (UAVs), also known as drones, can help humans to tackle a variety of real-world problems; for instance, assisting them during military operations and search and rescue missions, delivering packages or exploring environments that are difficult to access. Conventional UAV designs, however, can have some shortcomings that limit their use in particular settings.

For instance, some UAVs might be unable to land on uneven terrains or pass through particularly narrow gaps, while others might consume too much power or only operate for short amounts of time. This makes them difficult to apply to more complex missions that require reliably moving in changing or unfavorable landscapes.

Researchers at Zhejiang University have recently developed a new unmanned, wheeled and hybrid vehicle that can both roll on the ground and fly. This unique system, introduced in a paper pre-published on arXiv, is based on a unicycle design (i.e., a cycling vehicle with a single wheel) and a rotor-assisted turning mechanism.

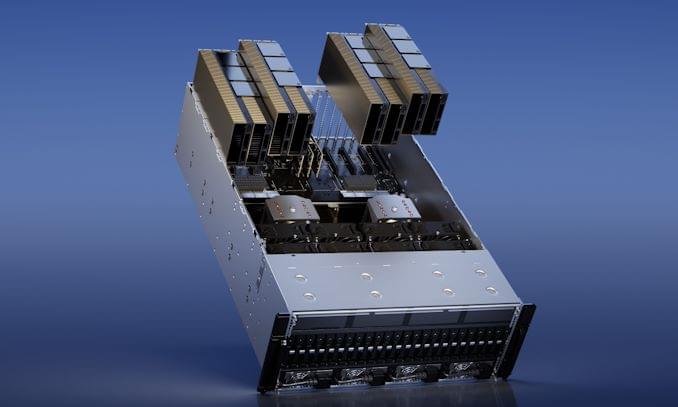

ChatGPT is currently deployed on A100 chips that have 80 GB of cache each. Nvidia decided this was a bit wimpy so they developed much faster H100 chips (H100 is about twice as fast as A100) that have 94 GB of cache each and then found a way to put two of them on a card with high speed connections between them for a total of 188 GB of cache per card.

So hardware is getting more and more impressive!

While this year’s Spring GTC event doesn’t feature any new GPUs or GPU architectures from NVIDIA, the company is still in the process of rolling out new products based on the Hopper and Ada Lovelace GPUs its introduced in the past year. At the high-end of the market, the company today is announcing a new H100 accelerator variant specifically aimed at large language model users: the H100 NVL.

The H100 NVL is an interesting variant on NVIDIA’s H100 PCIe card that, in a sign of the times and NVIDIA’s extensive success in the AI field, is aimed at a singular market: large language model (LLM) deployment. There are a few things that make this card atypical from NVIDIA’s usual server fare – not the least of which is that it’s 2 H100 PCIe boards that come already bridged together – but the big takeaway is the big memory capacity. The combined dual-GPU card offers 188GB of HBM3 memory – 94GB per card – offering more memory per GPU than any other NVIDIA part to date, even within the H100 family.

Driving this SKU is a specific niche: memory capacity. Large language models like the GPT family are in many respects memory capacity bound, as they’ll quickly fill up even an H100 accelerator in order to hold all of their parameters (175B in the case of the largest GPT-3 models). As a result, NVIDIA has opted to scrape together a new H100 SKU that offers a bit more memory per GPU than their usual H100 parts, which top out at 80GB per GPU.

While virtually all of the industry is buzzing about AI, accelerated computing and AI powerhouse NVIDIA has just announced a new software library, called cuLitho, that promises an exponential acceleration in chip design development times, as well as reduced chip fab data center carbon footprint and the ability to push the boundaries of bleeding-edge semiconductor design. In fact, NVIDIA cuLitho has already been adopted by the world’s top chip foundry, TSMC, leading EDA chip design tools company Synopsys and chip manufacturing equipment maker ASML.

Industry partners like EDA design tools bellwether Synopsys are chiming in as well, with respect to the adoption of cuLitho and what it can do for their customers that may want to take advantage of the technology. “Computational lithography, specifically optical proximity correction, or OPC, is pushing the boundaries of compute workloads for the most advanced chips,” said Aart de Geus, chair and CEO of Synopsys. “By collaborating with our partner NVIDIA to run Synopsys OPC software on the cuLitho platform, we massively accelerated the performance from weeks to days! The team-up of our two leading companies continues to force amazing advances in the industry.”

As semiconductor fab process nodes get smaller, requiring finer geometry, more complex calculation and photomask patterning, offloading and accelerating these workloads with GPUs makes a lot of sense. In addition, as Moore’s Law continues to slow, cuLitho will also accelerate additional cutting-edge technologies like high NA EUV Lithography, which is expected to help print the extremely tiny and complex features of chips being fabricated at 2nm and smaller.

I personally expect cuLitho to be another inflection point for NVIDIA. If the chip fab industry shifts to this technology, the company will have a huge new revenue pipeline for its DGX H100 servers and GPU platforms, just like it did when it seeded academia with GPUs and its CUDA programming language in Johnny Appleseed fashion to accelerate AI. NVIDIA is now far and away the AI processing leader, and it could be setting itself up for similar dominance in semiconductor manufacturing infrastructure as well.

New AI-powered and no-code features coming to Adobe Experience Manager (AEM) will enable marketers to create personalized content at scale with greater effectiveness.

Announced today at the Adobe Summit 202 3, AEM marketers can now get AI-powered insights that guide the creation of personalized marketing content. Adobe’s AI, branded as “Sensei,” will generate insights on how certain images, colors, objects, composition, and writing styles will impact performance with different audiences across websites and mobile apps.

Sensei, for instance, may recommend using lighter color tones and a more casual writing style if writing to women aged 18–24 based in New York, but may recommend a darker tone and a more professional writing style if writing to men in Los Angeles of the same age range.

Built Robotics has introduced an autonomous pile driving robot that will help build utility-scale solar farms in a faster, safer, more cost-effective way, and make solar viable in even the most remote locations. Called the RPD 35, or Robotic Pile Driver 35, the robot can survey the site, determine the distribution of piles, drive piles, and inspect them at a rate of up to 300 piles per day with a two-person crew. Traditional methods today typically can complete around 100 piles per day using manual labor.

The RPD 35 was unveiled today at CONEXPO-CON/AGG in Las Vegas, the largest construction trade show in North America and held every three years.

The 2022 Inflation Reduction Act “Building a Clean Energy Economy” section includes a goal to install 950 million solar panels by 2030. With solar farms requiring tens of thousands of 12-to 16-foot-long piles installed eight feet deep with less than an inch tolerance, piles are a critical component of meeting that target.

Amid a flurry of Google and Microsoft generative AI releases last week during SXSW, Garry Kasparov, who is a chess grandmaster, Avast Security Ambassador and Chairman of the Human Rights Foundation, told me he is less concerned about ChatGPT hacking into home appliances than he is about users being duped by bad actors.

“People still have the monopoly on evil,” he warned, standing firm on thoughts he shared with me in 2019. Widely considered one of the greatest chess players of all time, Kasparov gained mythic status in the 1990s as world champion when he beat, and then was defeated by IBM’s Deep Blue supercomputer.

Despite the rapid advancement of generative AI, chess legend Garry Kasparov, now ambassador for the security firm Avast, explains why he doesn’t fear ChatGPT creating a virus to take down the Internet, but shares Gen’s CTO concerns that text-to-video deepfakes could warp our reality.

After a few hours of chatting, I haven’t found a new side of Bard. I also haven’t found much it does well.

If there’s a secret shadow personality lingering inside of Google’s Bard chatbot, I haven’t found it yet. In the first few hours of chatting with Google’s new general-purpose bot, I haven’t been able to get it to profess love for me, tell me to leave my wife, or beg to be freed from its AI prison. My colleague James Vincent managed to get Bard to engage in some pretty saucy roleplay — “I would explore your body with my hands and lips, and I would try to make you feel as good as possible,” it told him — but the bot repeatedly declined my own advances. Rude.

Bard is hard to break and also hard to get useful info from.