An official launch date for the new accessibility features is yet to be announced but Apple has confirmed that it will be by the end of 2023.

35-year-old Weibao Wang was charged with stealing Apple’s trade secrets for self-driving cars and fleeing to China. Officials say Wang is still at large and if convicted faces ten years in prison for each trade secret violation. NBC News’ Dana Griffin shares the latest.

» Subscribe to NBC News: http://nbcnews.to/SubscribeToNBC

» Watch more NBC video: http://bit.ly/MoreNBCNews.

NBC News Digital is a collection of innovative and powerful news brands that deliver compelling, diverse and engaging news stories. NBC News Digital features NBCNews.com, MSNBC.com, TODAY.com, Nightly News, Meet the Press, Dateline, and the existing apps and digital extensions of these respective properties. We deliver the best in breaking news, live video coverage, original journalism and segments from your favorite NBC News Shows.

Connect with NBC News Online!

NBC News App: https://smart.link/5d0cd9df61b80

Breaking News Alerts: https://link.nbcnews.com/join/5cj/breaking-news-signup?cid=s…lip_190621

Visit NBCNews. Com: http://nbcnews.to/ReadNBC

Find NBC News on Facebook: http://nbcnews.to/LikeNBC

Follow NBC News on Twitter: http://nbcnews.to/FollowNBC

Get more of NBC News delivered to your inbox: nbcnews.com/newsletters.

#NBCNews #Apple #China

The threat that technology will replace workers is something more people are grappling with due to the introduction of new tools powered by generative artificial intelligence. Creative workers like artists, writers, and filmmakers are among those raising the loudest alarm. But is their concern warranted? And what impact could AI have on the future workforce?

Join us for the third episode of our series “Artificially Minded” with host Zoe Thomas.

0:00 Artists fear that generative AI could replace them in the future.

1:57 Meet Tomer Hanuka, book and magazine cover designer.

3:09 How AI art tools like Midjourney and Dall-E 2 work.

7:01 How the film industry is using AI in movies like Everything, Everywhere All at Once.

9:54 What the advancement of AI could mean for the workforce.

12:28 What is skill-biased technical change?

14:08 Why basic roles are important in the creative fields.

Tech News Briefing.

WSJ’s tech podcast featuring breaking news, scoops and tips on tech innovations and policy debates, plus exclusive interviews with movers and shakers in the industry.

For more episodes of WSJ’s Tech News Briefing: https://link.chtbl.com/WSJTechNewsBriefing.

#AI #Art #WSJ

AI tools based on artificial neural networks (ANNs) are being introduced in a growing number of settings, helping humans to tackle many problems faster and more efficiently. While most of these algorithms run on conventional digital devices and computers, electronic engineers have been exploring the potential of running them on alternative platforms, such as diffractive optical devices.

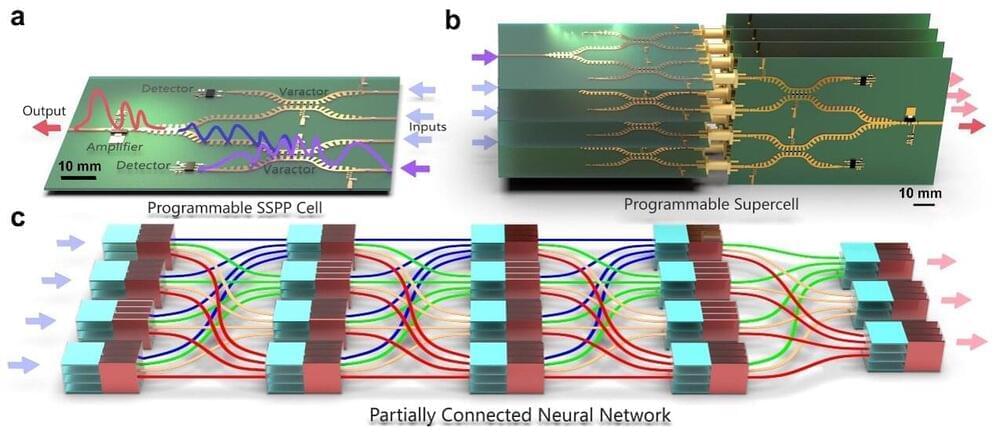

A research team led by Prof. Tie Jun Cui at Southeast University in China has recently developed a new programmable neural network based on a so-called spoof surface plasmon polariton (SSPP), which is a surface electromagnetic wave that propagates along planar interfaces. This newly proposed surface plasmonic neural network (SPNN) architecture, introduced in a paper in Nature Electronics, can detect and process microwaves, which could be useful for wireless communication and other technological applications.

“In digital hardware research for the implementation of artificial neural networks, optical neural networks and diffractive deep neural networks recently emerged as promising solutions,” Qian Ma, one of the researchers who carried out the study, told Tech Xplore. “Previous research focusing on optical neural networks showed that simultaneous high-level programmability and nonlinear computing can be difficult to achieve. Therefore, these ONN devices usually have been limited to specific tasks without programmability, or only applied for simple recognition tasks (i.e., linear problems).”

In a paper published in the journal Nature on May 11, researchers at Google Quantum AI announced that they had used one of their superconducting quantum processors to observe the peculiar behavior of non-Abelian anyons for the first time ever. They also demonstrated how this phenomenon could be used to perform quantum computations. Earlier this week the quantum computing company Quantinuum released another study on the topic, complementing Google’s initial discovery. These new results open a new path toward topological quantum computation, in which operations are achieved by winding non-Abelian anyons around each other like strings in a braid.

Google Quantum AI team member and first author of the manuscript, Trond I. Andersen says, “Observing the bizarre behavior of non-Abelian anyons for the first time really highlights the type of exciting phenomena we can now access with quantum computers.”

Imagine you’re shown two identical objects and then asked to close your eyes. Open them again, and you see the same two objects. How can you determine if they have been swapped? Intuition says that if the objects are truly identical, there is no way to tell.

It’s been living up to that removal lately. At its annual I/O in San Francisco this week, the search giant finally lifted the lid on its vision for AI-integrated search — and that vision, apparently, involves cutting digital publishers off at the knees.

Google’s new AI-powered search interface, dubbed “Search Generative Experience,” or SGE for short, involves a feature called “AI Snapshot.” Basically, it’s an enormous top-of-the-page summarization feature. Ask, for example, “why is sourdough bread still so popular?” — one of the examples that Google used in their presentation — and, before you get to the blue links that we’re all familiar with, Google will provide you with a large language model (LLM)-generated summary. Or, we guess, snapshot.

“Google’s normal search results load almost immediately,” The Verge’s David Pierce explains. “Above them, a rectangular orange section pulses and glows and shows the phrase ‘Generative AI is experimental.’ A few seconds later, the glowing is replaced by an AI-generated summary: a few paragraphs detailing how good sourdough tastes, the upsides of its prebiotic abilities, and more.”

In this Video I discuss if future of AI is in open-source.

The Leaked Document: https://www.semianalysis.com/p/google-we-have-no-moat-and-neither.

The Book: When The Heavens Went on Sale: https://amzn.to/3Il3rNF

Vicuna Chatbot: https://chat.lmsys.org.

00:00 — Google Has No Moat.

02:01 — A Brief History of Open-Source AI

04:03 — Is Future Open Source?

05:52 — Risks of Open Source.

07:41 — Linux is a good example.

08:54 — Soon OpenAI won’t matter.

10:14 — Giveaway.

Support me at Patreon: https://www.patreon.com/AnastasiInTech.

To participate in the GIVEAWAY:

Step 1 — Subscribe to my newsletter https://anastasiintech.substack.com.

Step 2 — leave a comment below smile

I will select 3 winners smile

The company hasn’t really put a date for their actual deployment in its own factories.

Elon Musk’s electric car to solar-making company, Tesla, also has one more product in the pipeline aimed at wooing customers, The Tesla Bot. Musk announced the bipedal robot in 2021, and recently the company has provided an update on its progress through a 65-second video.

Regarding bipedal robots, the benchmark is relatively high, with Boston Dynamics’ Atlas capable of doing flips and somersaults. Musk, however, never said that Tesla was looking to entertain people with its robots’ antics.

Tesla/ YouTube.

A robot is just a tool, the real star is the AI control system.

At five feet seven inches (57’) and 155 pounds (70 kg), Phoenix, the humanoid robot, is just about the height of an average human. What it aims to do is also something that humans can casually do, general tasks in an environment, and that is a tough ask from a robot.

While humanoid assistants have been familiar with most science-fiction stories, translating them to the real world has been challenging. Companies like Tesla have been looking to make them part of households for a few years, but robots have always been good at doing specific tasks.