Timnit Gebru co-authored a research paper while she worked at Google, which identified the biases of machine learning.

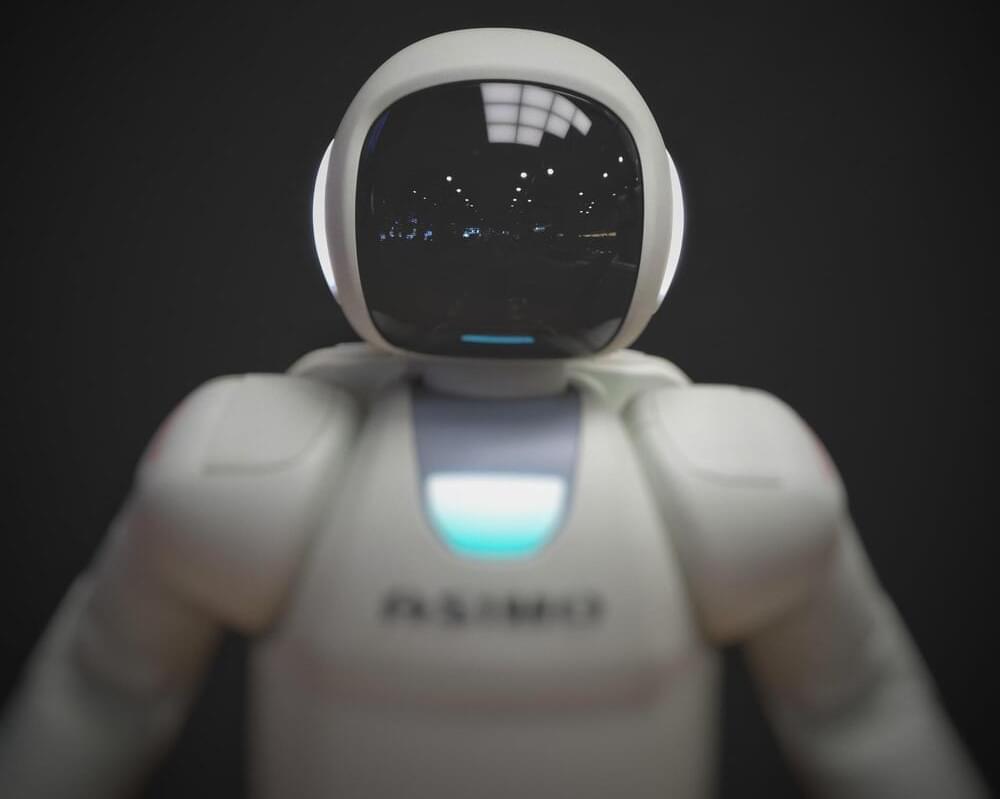

Increasingly, social robots are being used for support in educational contexts. But does the sound of a social robot affect how well they perform, especially when dealing with teams of humans? Teamwork is a key factor in human creativity, boosting collaboration and new ideas. Danish scientists set out to understand whether robots using a voice designed to sound charismatic would be more successful as team creativity facilitators.

“We had a robot instruct teams of students in a creativity task. The robot either used a confident, passionate—ie charismatic—tone of voice or a normal, matter-of-fact tone of voice,” said Dr. Kerstin Fischer of the University of Southern Denmark, corresponding author of the study in Frontiers in Communication. “We found that when the robot spoke in a charismatic speaking style, students’ ideas were more original and more elaborate.”

We know that social robots acting as facilitators can boost creativity, and that the success of facilitators is at least partly dependent on charisma: people respond to charismatic speech by becoming more confident and engaged. Fischer and her colleagues aimed to see if this effect could be reproduced with the voices of social robots by using a text-to-speech function engineered for characteristics associated with charismatic speaking, such as a specific pitch range and way of stressing words. Two voices were developed, one charismatic and one less expressive, based on a range of parameters which correlate with perceived speaker charisma.

In the company’s quarterly earnings call earlier this month, CEO Tim Cook said Apple is planning to “weave” AI into its products, per The Independent. But he also cautioned about the future of the technology.

“I do think it’s very important to be deliberate and thoughtful in how you approach these things,” he said, per Inc. “And there’s a number of issues that need to be sorted as is being talked about in a number of different places, but the potential is certainly very interesting.”

Apple is also telling some employees to limit their use of ChatGPT and other external AI tools, according to an internal document seen by the Journal. That includes the automated coding tool Copilot, from the Microsoft-owned GitHub.

A team of researchers from Stanford University and Google let 25 AI-powered bots loose inside a virtual town — and they acted a lot more like humans than you might expect.

As detailed in a recent, yet-to-be-peer-reviewed study, the researchers trained 25 different “generative agents,” using OpenAI’s GPT-3.5 large language model, to “simulate believable human behavior” such as cooking up breakfast, going to work, or practicing a specific profession like painting or writing.

A virtual town called “Smallville” allowed these agents to hop from school to a cafe, or head to a bar after work.

AI powered photo editing tool.

The research team noted in its paper that new details can be added within the regeneration of the edited aspects of images that are beneficial to the update. “Our approach can hallucinate occluded content, like the teeth inside a lion’s mouth, and can deform following the object’s rigidity, like the bending of a horse leg.”

There are many brands that are attempting to offer editing options for generative AI content. However, most do not go as far as allowing for the actual editing of images, but rather for aspects such as editing around images. For example, Microsoft’s Designer app allows you to generate AI images from a text prompt, and you can select your favorite from three results, then take it to the design studio where you can create a host of creativity and productivity-based projects, such as social media posts, invitations, digital postcards, or graphics with the image as the focal point. However, you cannot edit the AI-generated image.

With the DragGAN tool still being a demo for now, there is no telling what the quality of a readily available technology would be, or if it would even be possible, especially since the demos are based on low-resolution videos. However, it is an interesting example of how quickly AI continues to develop.

John Yang:

Artificial intelligence is finding its place in all sorts of scientific fields, and perhaps none holds more life savings promise than healthcare programs are learning to answer patient’s medical questions and diagnose illnesses. But there’s still some problems to be worked out. Earlier, I spoke with Dr. Isaac Kohane, the editor-in-chief of the New England Journal of Medicine AI, and the chair of Harvard’s Department of Biomedical Informatics. I asked him about AI’s potential in medicine.

Dr. Isaac Kohane, Editor-in-Chief, New England Journal of Medicine AI: Doctors can definitely use AI as an augmentation, so they’ll remember or be reminded of all the things that they should know about their patient, their specific patient, and all other similar patients like them.

The AI “arms race” commences. Silicon Valley is looking to capitalize on AI’s big moment, and every tech Goliath worth its salt is feverishly looking to churn out a new product to keep pace with ChatGPT’s 100 million users. Microsoft kicked things off nicely earlier this month with its integration of ChatGPT into Bing, with Microsoft CEO Satya Nadella proclaiming, “The race starts today.” The OG tech giant says it wants to use the chatbot to “empower people to unlock the joy of discovery,” whatever that means. Not to be outdone, Google announced that it would be launching its own AI search integration, dubbed “Bard” (Google’s tool already made a mistake upon launch, costing the company a stock slump). In China, meanwhile, the tech giants Alibaba (basically the Chinese version of Amazon) and Baidu (Chinese Google) recently announced that they would also be pursuing their own respective AI tools.

Do the people actually want an AI “revolution”? It’s not totally clear but whether they want it or not, it’s pretty clear that the tech industry is going to give it to them. The robots are coming. Prep accordingly!

Tech startup Sanctuary AI has unveiled a general-purpose robot designed to perform many workplace tasks currently handled by people — working with humans or without them.

The challenge: Robots have worked alongside people for decades, and traditionally, they’ve been incredibly specialized — a bot on a General Motors’ assembly line, for example, might move pieces of metal from one place to another over and over again.

This has meant business owners would need to purchase multiple (usually expensive) robots if they wanted to automate multiple tasks.