Years after shutting down its robotics division, OpenAI is now back in the game after raising funding for Norwegian robotics company 1X.

Proteins are made from chains of amino acids that fold into three-dimensional shapes, which in turn dictate protein function. Those shapes evolved over billions of years and are varied and complex, but also limited in number. With a better understanding of how existing proteins fold, researchers have begun to design folding patterns not produced in nature.

But a major challenge, says Kim, has been to imagine folds that are both possible and functional. “It’s been very hard to predict which folds will be real and work in a protein structure,” says Kim, who is also a professor in the departments of molecular genetics and computer science at U of T. “By combining biophysics-based representations of protein structure with diffusion methods from the image generation space, we can begin to address this problem.”

The new system, which the researchers call ProteinSGM, draws from a large set of image-like representations of existing proteins that encode their structure accurately. The researchers feed these images into a generative diffusion model, which gradually adds noise until each image becomes all noise. The model tracks how the images become noisier and then runs the process in reverse, learning how to transform random pixels into clear images that correspond to fully novel proteins.

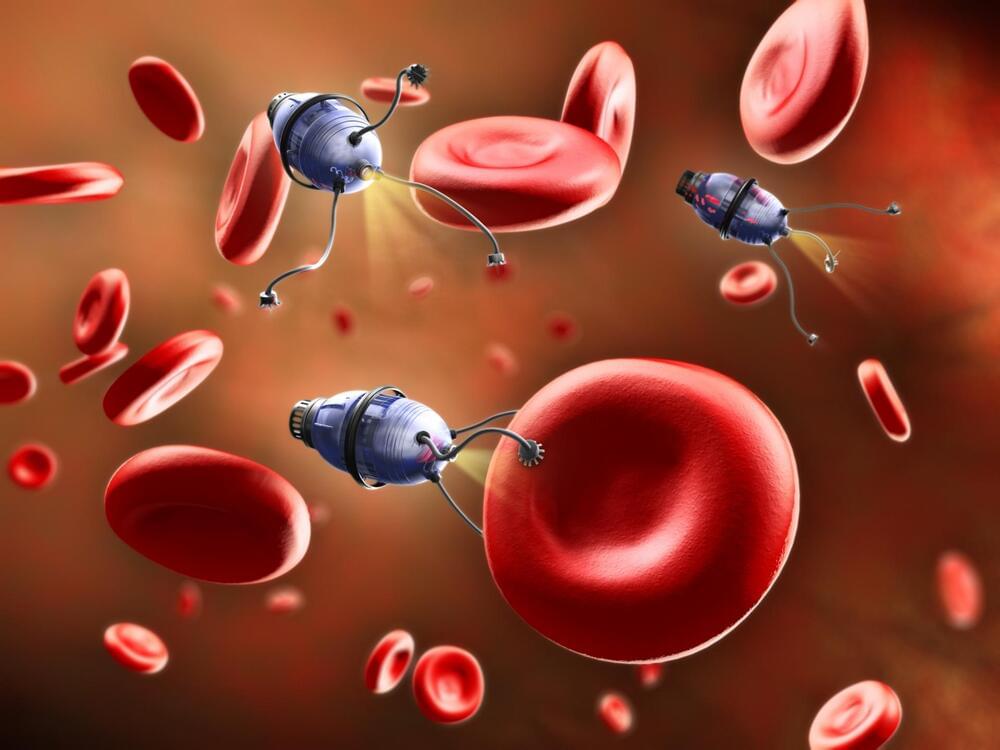

The xenobot had been predicted to be a valuable tool in medicine and other fields. It is expected not only to help treat cancer but keep the aquatic bodies clean.

Ever imagined a world where we could utilize the power of a living cell to carry out certain functions? Just like we have robots that help in several aspects of our lives, some scientists in US universities have come up with a living robot known as the xenobot.

The xenobot had been predicted to be a valuable tool in medicine and other fields. In years to come, it wouldn’t only help treat cancer, but it would help keep the aquatic bodies clean.

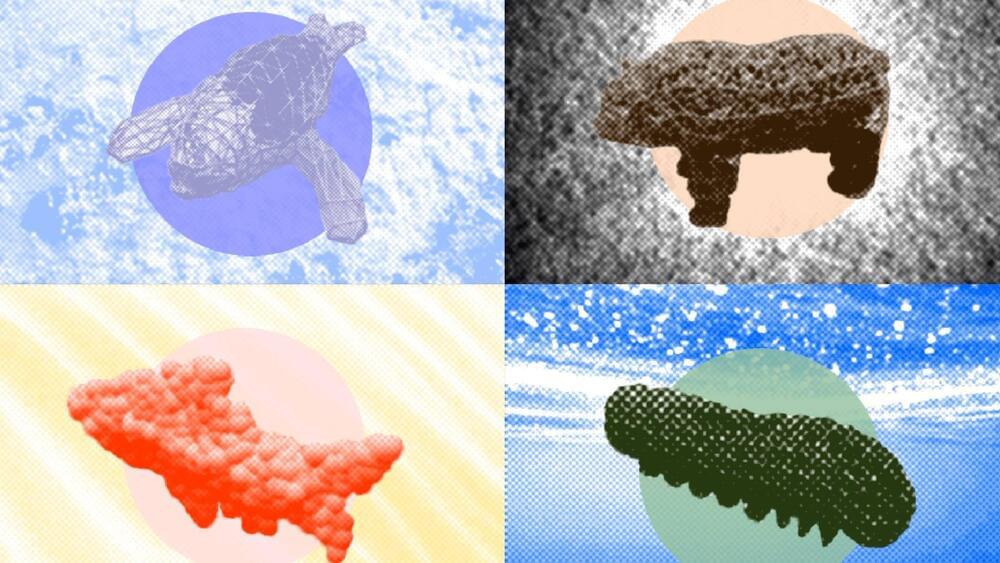

A team of MIT researchers has developed a bio-inspired platform that enables engineers to study soft robot co-design called a “SoftZoo” due to the fact that it was inspired by animal-like robots.

This is according to a report by the institution published on Tuesday.

In the platform can be found 3D models of animals such as panda bears, fishes, sharks, and caterpillars.

“Our robots are born from sculptors for sculpture,” says the artist.

A new startup called Robotor is seeking to revolutionize how sculptures are made by simplifying the sculpting process with the use of robotics and artificial intelligence. Founded by Filippo Tincolini and Giacomo Massari, the new company aims to make these works of art faster and easier to produce and even more sustainable.

The new technology allows for the development of structures that were once deemed inconceivable, according to a report by TNW published on Friday.

“It’s not perfect, but it’s still pretty magical at the same time. I think it dramatically transforms what Khan Academy is going to become.”

Khan Academy, a pioneer of digital education and savior of the less privileged, has set its sight on shaping artificial intelligence into a guide for students.

When Sam Altman and Greg Brockman, OpenAI’s CEO, and president, respectively, gave Sal Khan, Khan Academy’s founder, a private demo of their GPT software, Khan was impressed with the program’s ability to answer academic questions intelligently, reports Fast Company.

A fast-food chain struggling to hire staff is using AI to help fill the gaps at the drive-thru.

For more Local News from KPHO: https://www.azfamily.com/

For more YouTube Content: https://www.youtube.com/channel/UCIrgpHvUm1FMtv-C1xwkJtw

The Code Interpreter is probably the most interesting ChatGPT plugin of OpenAI and opens up completely new capabilities for the Chatbot.

At the end of March, OpenAI introduced a groundbreaking new feature for ChatGPT: Plugins. One of them is a so-called Code Interpreter. With it, the language model can not only generate code, but also execute it independently.

As with Auto-GPT, the busy developer community has found exciting use cases for this technology in a very short time. Especially for data journalism and similar data-based analysis, the tool seems to open up completely new possibilities. This is also due to the possibility of uploading and downloading files up to 100 MB in size.