Year 2021 This bit of dna could be synthesized to essentially regrow humans if they had critical injury much like wolverine from the marvel movies.

Two species of sea slugs can pop off their heads and regrow their entire bodies from the noggin down, scientists in Japan recently discovered. This incredible feat of regeneration can be achieved in just a couple of weeks and is absolutely mind-blowing.

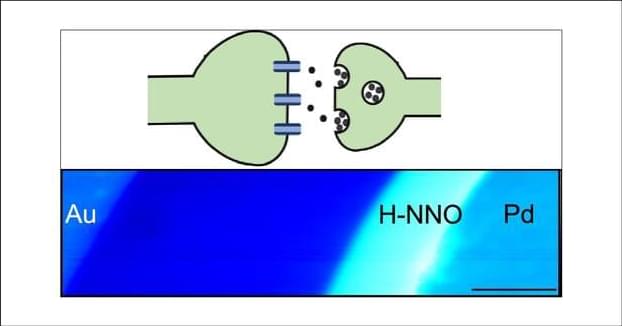

Most cases of animal regeneration — replacing damaged or lost body parts with an identical replacement — occur when arms, legs or tails are lost to predators and must be regrown. But these sea slugs, which belong to a group called sacoglossans, can take it to the next level by regrowing an entirely new body from just their heads, which they seem to be able to detach from their original bodies on purpose.

If that wasn’t strange enough, the slugs’ heads can survive autonomously for weeks thanks in part to their unusual ability to photosynthesize like plants, which they hijack from the algae they eat. And if that’s still not enough in the bizarro realm, the original decapitated body can also go on living for days or even months without their heads.